Do AI Detectors Actually Work

September 12, 2025

So, do AI detectors actually work?

It’s a simple question with a messy answer: sometimes. Some of these tools are surprisingly good at sniffing out AI-generated text. Others are so unreliable they’re basically guessing. It creates a ton of confusion for anyone just trying to get a straight answer.

The Million-Dollar Question: Do AI Detectors Work?

Think of the current AI detection world like a weather forecast. One app might nail the storm prediction down to the minute, giving you plenty of time to grab an umbrella. Another might promise clear skies right before a torrential downpour leaves you soaked. That’s the kind of variability we’re dealing with.

This performance gap isn't just a small bug; it’s a massive problem for students, teachers, and creators. Trusting a bad detector is like using a broken compass—it feels like you're getting guidance, but you could end up completely lost. The fallout ranges from false accusations of plagiarism to accidentally publishing content that sounds like a robot wrote it.

A Wildly Inconsistent Spectrum of Accuracy

This isn't just a hunch, either. The data backs it up. One recent study on AI detector sensitivity put ten popular tools to the test and found a jaw-dropping range in performance.

A detector's sensitivity—its ability to correctly flag AI text—swung from a perfect 100% all the way down to a useless 0%. That’s not a typo. Some tools couldn't spot AI content at all.

On the flip side, tools from companies like Copyleaks, QuillBot, and Sapling hit that 100% mark in the study, proving that accurate detection is actually possible.

This huge difference in quality makes it crucial to know which tools you can lean on and which ones to avoid.

AI Detector Performance At a Glance

To give you a clearer picture, this table breaks down the performance of popular AI detectors based on what the latest research has found. You can see just how wide the accuracy gap really is.

| Detector Tier | Reported Accuracy Range | Example Tools |

|---|---|---|

| High Performers | 95% - 100% | Copyleaks, QuillBot, Sapling |

| Mid-Tier Performers | 60% - 90% | GPTZero, Writer |

| Low Performers | 0% - 50% | ZGPS, Check-plagiarism |

The takeaway here is pretty clear: not all detectors are created equal. Some are fantastic, while others fail spectacularly.

The bottom line: An AI detector's result should never be the final word. The best ones are reliable enough to be a great starting point for a human review, but even they aren't flawless. Treat them as an assistant, not a judge.

Now that we've established that this performance gap exists, let's dig into why. In the next sections, we'll pull back the curtain on the technology powering these tools and get into their real-world limitations.

How AI Detectors Try to Unmask AI Content

Think of an AI detector as a digital detective trying to spot a forgery. It’s not just reading the words on the page; it’s looking for the author’s unique “fingerprint”—those subtle, almost invisible patterns that make human writing so distinct.

These tools are programmed to hunt for the statistical clues that give away a text’s robotic origins. They’re built on machine learning models trained on enormous libraries of both human and AI-generated content, which teaches them to spot the difference.

The Concepts of Perplexity and Burstiness

Two of the biggest clues these detectors look for are perplexity and burstiness. They might sound technical, but the ideas behind them are pretty straightforward and tell you a lot about how these tools actually think.

Perplexity is really just a measure of predictability. Human writing is like a winding country road—full of unexpected turns, surprising word choices, and sentences that vary in length. You can’t always guess what’s coming next. That unpredictability gives it high perplexity.

AI-generated text, on the other hand, often feels like a perfectly straight highway. It’s efficient and grammatically perfect, but it almost always picks the most statistically probable word to follow the last one. This makes the language smooth but also highly predictable, resulting in low perplexity.

An AI detector sees this low perplexity as a major red flag. When a text is too perfect and predictable, it’s often a sign that a machine, not a human, was behind the wheel.

Burstiness is all about the natural rhythm of human writing. We tend to mix short, punchy sentences with longer, more descriptive ones. This creates a dynamic “burst” of information, followed by a pause. That's high burstiness.

AI models often have a hard time replicating this organic flow. Their output can be unnervingly uniform, with sentences that are all roughly the same length and structure. This lack of variation is a dead giveaway—another clue that alerts the detector. You can dive deeper into these technical mechanics with our detailed guide on how AI detectors work.

Weaving the Clues Together

An AI detector never relies on just one clue. It combines its analysis of perplexity, burstiness, word choice, and sentence structure to build a complete case. It then calculates a probability score, essentially saying, "Based on all the evidence, there's a 95% chance this was written by an AI."

Here’s a quick look at how it pieces everything together:

- Text Analysis: The tool breaks the text you submit into smaller chunks.

- Pattern Recognition: It scans each chunk for the statistical fingerprints of perplexity and burstiness.

- Vocabulary Check: It looks for common AI phrases or an overly formal, stilted tone.

- Scoring: Finally, it assigns a probability score based on how many AI-like traits it found.

This process is why you get a percentage instead of a simple yes or no. The detector is just presenting its level of confidence based on the digital fingerprints it found. As AI tools get better at generating different kinds of content, like an AI business plan generator, understanding how their output is analyzed becomes even more important.

Real-World Accuracy: So, Do These Tools Actually Work?

Talking about how detectors work in theory is one thing. Seeing how they perform out in the wild is another. This is where the stakes get incredibly high, especially in academia, where a single percentage point can change a student's future.

The academic world has become the primary battleground for AI detection. With students under immense pressure, many are turning to AI for a helping hand. This has pushed universities to adopt detection tools as their first line of defense against academic dishonesty.

And one of the biggest names in this space is Turnitin. It's a tool used by thousands of universities worldwide, making it the de facto standard for checking plagiarism and, now, AI-generated content.

The Turnitin Case Study

Turnitin is the perfect example of how these tools are being deployed. When they launched their AI detection feature, they did so with a bold claim: 98% accuracy. For a university administrator, that number sounds like a silver bullet. Almost foolproof.

But what does a 2% error rate actually look like when you apply it at scale?

Let’s do the math. If a large university processes 100,000 papers a semester, that 2% false positive rate suddenly becomes 2,000 students wrongly accused of cheating. That’s the core dilemma here. A high accuracy claim on paper can quickly translate into a huge number of false accusations in the real world.

The pressure is immense. Turnitin has reported that over 10% of student papers submitted globally now contain at least 20% AI-generated text. This surge puts enormous strain on these systems to be both sharp and fair. You can dive deeper into Turnitin's AI detection data over at bestcolleges.com.

The Problem with a Single Score

The real issue is our tendency to rely on a single, automated score. Whether it comes from Turnitin, GPTZero, or another tool, a high "AI percentage" is often treated as a final verdict. Guilty. This completely removes human judgment from the equation, and that’s a dangerous path to go down.

A high AI score should be the beginning of a conversation, not the end of one. It’s a data point—a reason to look closer—but it is not irrefutable proof.

Relying on a score alone ignores all the crucial context. For example, a non-native English speaker might naturally write in a more structured, predictable way that just so happens to mimic AI patterns. Or what about a writer who uses a grammar checker or paraphrasing tool to refine their own work? They could easily trigger a false positive, too.

How Other Tools Perform Beyond Academia

While the university campus provides a high-stakes drama, this accuracy problem affects everyone, from content marketers to editors. The performance across different tools is all over the map, which makes it incredibly difficult to know who to trust.

A quick look at the 12 best AI detectors shows just how much they can disagree. One tool might flag a text as 90% AI, while another calls it 30% human. That inconsistency comes down to the simple fact that every detector uses a slightly different algorithm and was trained on different data.

Here are a few common themes we see when these tools are put to the test:

- Model Specialization: Some detectors are fantastic at sniffing out text from a specific model, like GPT-4, but fall flat when faced with content from another, like Claude.

- The "Mixed" Content Blind Spot: Most tools get confused by text that’s a mix of human and AI. A few AI-generated sentences sprinkled into an otherwise human-written article can fly right under the radar.

- Evasion via Paraphrasing: AI-generated text that’s been run through a "humanizer" or paraphrasing tool is the ultimate challenge. It's exceptionally hard for most detectors to catch, which tanks their real-world accuracy.

So, do AI detectors actually work? The answer is complicated. They work well enough to be a helpful signal, but their accuracy is fragile. They should never be the sole basis for any major decision.

Why AI Detectors Falter in the Evasion Game

Even the most sophisticated AI detectors have an Achilles' heel. They're locked in a constant cat-and-mouse game where AI models get better, and human users get smarter about side-stepping detection. The second a detector learns to spot one tell-tale pattern, a new technique pops up to erase it.

This dynamic is exactly why you can't ever fully trust a detection score. It's like a master art forger who doesn't just copy a painting but perfectly mimics the artist's unique brushstrokes and signature style. At a certain point, the forgery becomes almost impossible to distinguish from the real thing.

It's the same with text. Simple edits, paraphrasing tools, and new writing techniques can effectively scrub the digital "fingerprints" that detectors are trained to find. This constant evolution is the core reason why answering "do AI detectors actually work?" is so complicated.

The Paraphrasing Problem

One of the easiest and most effective ways to fool a detector is paraphrasing. Just running AI-generated text through a "humanizer" or a quality paraphrasing tool is often enough to sail past even top-tier detectors.

These tools are built to rewrite text by changing sentence structures, swapping common words for less predictable synonyms, and mixing up sentence lengths. In doing so, they directly attack the core metrics detectors rely on, like perplexity and burstiness. The reworked text becomes less predictable and more rhythmically varied, making it appear far more human to an algorithm.

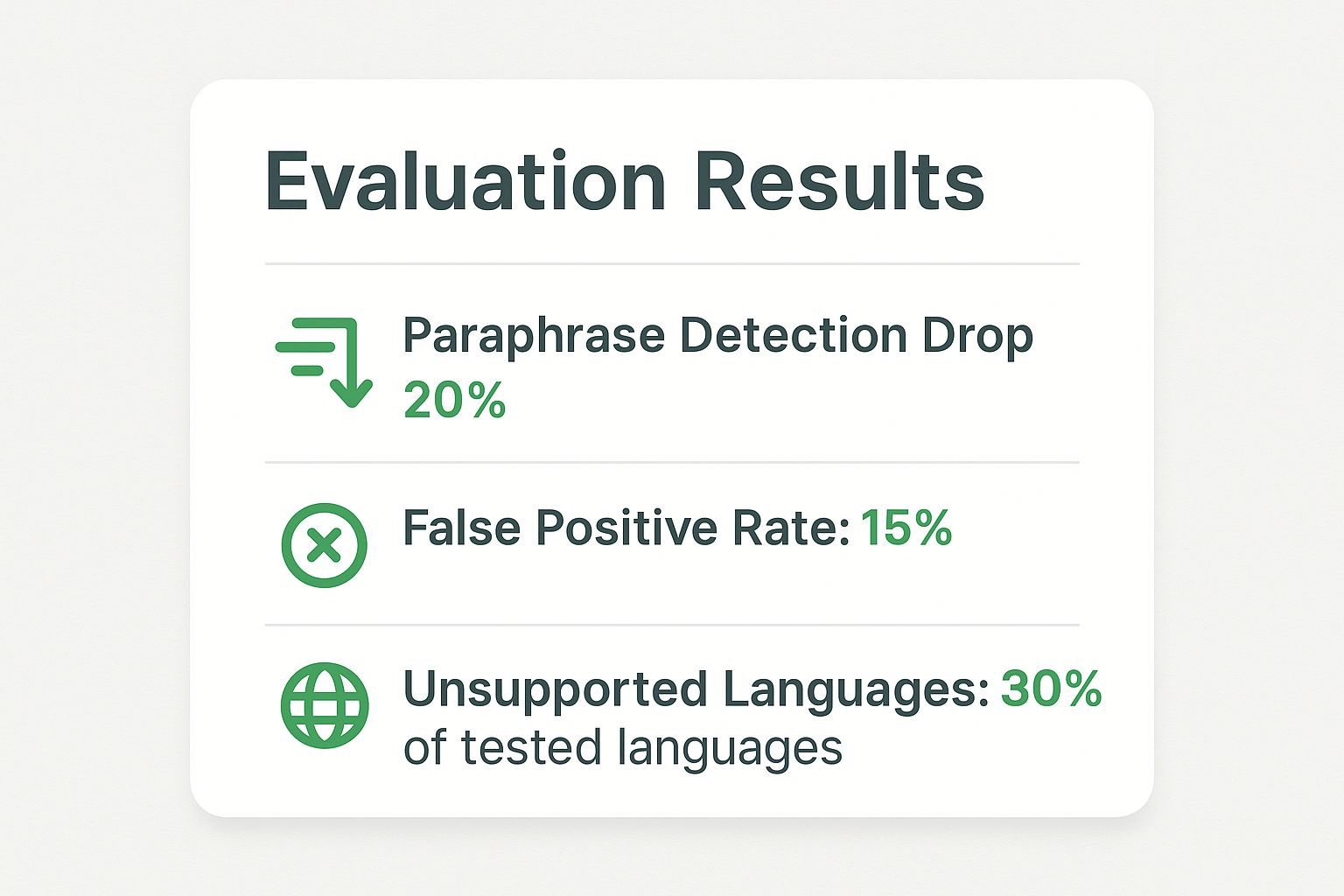

The impact here is huge. Research has shown time and again how running AI content through a paraphrasing model can completely tank detection accuracy. One study found this single step slashed the effectiveness of leading detectors by an average of 54.83%.

And as the world of AI-generated content moves forward, concepts like Generative Engine Optimisation introduce even more wrinkles, making the detection landscape that much harder to navigate.

The High Cost of False Positives

Maybe the most dangerous flaw in AI detection is the false positive—when a detector wrongly flags human-written text as AI-generated. This isn't some rare glitch; it's a persistent problem with serious, real-world consequences, especially in schools and workplaces.

A false positive can lead to a student being wrongly accused of cheating or a professional writer's credibility being unfairly damaged. This happens because some human writing styles just happen to mimic the patterns AI detectors look for.

A non-native English speaker, for instance, might naturally use simpler sentence structures and more common vocabulary. To an AI detector that lacks any real-world context, these perfectly normal writing habits can look like signs of AI generation.

This is a massive blind spot, and the data backs it up.

As you can see, between paraphrasing tricks, false positives, and language limitations, these tools are working with some significant disadvantages.

Common Reasons AI Detectors Fail

Beyond the big two—paraphrasing and false positives—several other issues chip away at accuracy. These weak spots are a good reminder of why a detector's score should be treated as a clue, not as definitive proof.

Here’s a quick breakdown of the most common reasons these tools get it wrong.

| Weakness | Description | Impact on Accuracy |

|---|---|---|

| Mixed Human-AI Content | Algorithms struggle to classify text that blends human and AI writing, like an AI-generated draft that's been heavily edited by a person. | High |

| Outdated Training Data | AI models evolve constantly. A detector trained on GPT-3.5 outputs will be less effective at spotting text from newer models like GPT-4o or Claude 3. | High |

| Lack of Context | Detectors analyze text in a vacuum. They don't understand the author's unique voice, intent, or the specific demands of the writing task. | High |

Ultimately, these vulnerabilities mean that while AI detectors can offer a signal, they are far from infallible. Their susceptibility to evasion and their tendency to misjudge human writing make them unreliable as a sole judge of authenticity.

Using AI Detection Tools Responsibly

The first step is accepting that AI detectors can—and do—fail. The next is learning how to use them without causing real-world harm. The core message is simple: an AI detector is a guide, not a judge. It’s just one data point, much like a single diagnostic test from a doctor's office.

Think of it this way: no doctor would make a life-altering diagnosis based on one blood test alone. They bring in their own expertise, consider the patient’s history, and look at the whole picture. A high AI score should be the start of a conversation, not the end of one.

Relying entirely on an automated score strips away critical human judgment and context. It steamrolls the nuances of different writing styles, ignores the very real possibility of false positives, and forgets these tools are deeply imperfect. So, how can educators, editors, and managers use them the right way?

A Framework for Ethical Use

The key is building a workflow that puts fairness and critical thinking first. Blindly trusting a percentage is where everything goes wrong. Instead, treat a flagged piece of content as a signal to look closer, not as proof of guilt.

This approach lets you maintain standards without making false accusations. When you see a high AI score, it’s time to put on your detective hat and gather more evidence.

Here’s a practical, step-by-step process:

Cross-Reference with Multiple Tools: Never, ever trust a single detector. Run the text through two or three different tools. If one screams 98% AI and another shrugs with 20% AI, that glaring inconsistency tells you more about the tools' reliability than the text itself.

Consider the Context: Ask the important questions. Is the writer a non-native English speaker whose prose might naturally be more structured? Did the assignment require a formal, fact-heavy tone that can easily mimic AI? Context is everything.

Analyze the Content Manually: Just read the text. Does it have a genuine voice or a unique point of view? Are there weird phrases or clunky, repetitive sentences? Your own expertise is still the best detection tool you have.

A high AI probability score isn't an accusation; it's an observation. It just means the text shares statistical patterns with AI-generated content. This should spark curiosity, not condemnation.

Prioritizing Human Judgment

Ultimately, the most important part of this process is you. No algorithm can replace human intuition, empathy, or contextual understanding. Before you make any decision based on a detector’s score, always put a human-led review front and center.

For a teacher, that might mean talking with the student and asking to see their outlines or draft history. For an editor, it could be a simple conversation with the writer about their process. This human-centric approach is the only fair way to navigate the messy reality of AI detection.

By treating these tools as assistants instead of arbiters, you can tap into their strengths without falling for their very significant weaknesses.

So, What's the Final Verdict on AI Detectors?

After digging into how these tools work, seeing how they perform in the real world, and pointing out their biggest flaws, we can finally tackle the big question: do AI detectors actually work?

The answer is a frustrating but honest sometimes.

While the technology is interesting and some tools are surprisingly good at sniffing out robotic text, the industry as a whole is a mess of inconsistency. This isn't a small problem—it creates a high-stakes environment where a tool's flawed judgment can have serious consequences for students, writers, and professionals.

The verdict isn't a simple "yes" or "no." It's a wake-up call to be smarter and more critical about how we use these tools. The only way forward is to understand what they can do and, more importantly, what they can't.

The Key Takeaways

The performance of AI detectors is a mixed bag, defined by a few core truths. Simple tricks can still fool them, false positives are a constant threat, and no score should ever be treated as gospel. For a deeper dive, our comprehensive guide on if AI detectors actually work breaks it all down.

Here’s what you absolutely need to remember:

- Accuracy Is All Over the Place: As studies have shown, performance swings wildly. A tool might be nearly perfect one moment and completely useless the next, depending on what it’s analyzing.

- Evasion Is Way Too Easy: A quick run through a paraphrasing tool or a few manual tweaks is often all it takes to trick even the most "advanced" detectors.

- False Positives Are a Real Danger: Human writing gets flagged all the time. This is especially true for non-native English speakers, whose writing styles can be unfairly misidentified as AI-generated.

The most important thing to take away from all this is that human oversight is non-negotiable. An AI detector should only ever be a first-pass tool. Think of it as a signal to look closer, not as the final judge.

At the end of the day, these tools are not arbiters of truth. They're just probability calculators that look for statistical patterns. Their results mean nothing without human context and critical thinking.

A high AI score isn't an accusation; it's just an observation that requires a real person to investigate. Relying on an AI detector as the sole source of authority isn't just irresponsible—it's a recipe for disaster.

Common Questions (and Honest Answers) About AI Detectors

Even after you understand the tech, a lot of practical questions pop up. It’s one thing to know how they work, but what does that mean for you? Let's tackle the big ones.

Can AI Detectors Be 100 Percent Accurate?

The short answer? Nope.

Getting to 100% accuracy just isn't possible right now. The whole goal of an AI model is to sound human, and they're trained on mountains of human-written text to get there. They're designed to be indistinguishable.

Because of this, there's always going to be an overlap that makes a perfect call impossible. Plus, a few simple tweaks—like a quick paraphrase or some manual edits—can throw even the sharpest algorithms for a loop.

An AI detector score is just a probability, not a verdict. A 95% AI score doesn't mean it's proven to be AI. It just means the text has statistical patterns that look a lot like a machine's. Think of it as an educated guess, not a smoking gun.

Are Paid AI Detectors Better Than Free Ones?

You’d think so, but not really. It’s easy to assume that a paid tool must be better, but we’ve seen plenty of independent tests where free tools actually outperform the expensive ones.

The whole field is moving incredibly fast. A detector that was top-of-the-line six months ago could be completely obsolete today, easily fooled by the latest AI models.

Instead of letting price guide you, look for recent, unbiased reviews and run your own tests. Don’t fall into the trap of thinking a subscription guarantees better results.

What Should I Do If I'm Falsely Accused by an AI Detector?

First, don't panic. An AI detector score isn't hard evidence, and a growing number of schools and companies know these tools are flawed. If you find yourself in this situation, the best thing you can do is calmly show your work.

Here’s how you can prove your writing is yours:

- Show Your Edit History: Pop open the version history in Google Docs or Microsoft Word. It's the perfect way to show how your document grew from a blank page to a finished piece.

- Provide Your Research Trail: Share your outlines, messy first drafts, browser history, or the articles you used for research. This creates a clear paper trail of your effort.

- Talk About False Positives: Ask to see the report and start a conversation. You can politely bring up the well-known problem of false positives, especially for writers who aren't native English speakers or anyone with a more formal, academic style.

At Natural Write, we get how frustrating it is to have your authentic work flagged. Our tools are built to help you refine your writing so it sounds clear and natural, making sure your own voice comes through every time. Humanize your text and move past AI detectors with confidence at https://naturalwrite.com.