Are AI Detectors Accurate? The Real Answer

October 29, 2025

So, are AI detectors accurate? The honest answer is… it’s complicated.

It's not a simple yes or no. These tools can be pretty good at spotting text that's 100% AI-generated, but their reliability often takes a nosedive in real-world situations. Think of them like a weather forecast—they're great at predicting a major hurricane but might mistake a few dark clouds for a downpour, raising a false alarm.

The Complicated Truth About AI Detector Accuracy

When we ask if AI detectors are accurate, what we're really asking is if they can consistently tell the difference between something a human wrote and something a machine spit out. Many popular tools boast accuracy rates of 98% or higher, but that number usually comes from tests in a sterile, lab-like environment. In those tests, the software is only looking at pure, unedited AI text.

The real world is a lot messier. The moment a person touches that AI text—fixing a sentence, adding a bit of personal flair, or moving a paragraph—the detector's job gets exponentially harder. And that’s where those shiny accuracy claims start to fall apart.

To give you a clearer picture, here's a quick breakdown of what impacts their performance.

AI Detector Accuracy at a Glance

| Factor | Impact on Accuracy | General Performance Range |

|---|---|---|

| Pure AI Content | High | Often 95%-99% in ideal conditions |

| Edited AI Content | Moderate to Low | Highly variable; drops significantly with edits |

| Human Content | High (risk of false positives) | False positive rates can be 1%-10% or higher |

| Mixed Content | Low | Very difficult for detectors to parse |

| AI Model Used | Variable | More advanced models are harder to detect |

Essentially, the more human involvement, the less reliable the detector. Now let's dig into why that is.

Why Accuracy Is Not a Simple Metric

An AI detector’s performance isn't a single, fixed number. It's a moving target influenced by several factors that can completely change the result. To really get it, you have to understand the difference between false positives and false negatives.

- False Positives: This is when a detector wrongly flags human-written text as AI-generated. This is the most dangerous kind of mistake, because it can lead to unfair accusations against writers, students, and professionals.

- False Negatives: This happens when a detector misses AI-generated content, letting it slide by as human. While this undermines the tool’s purpose, it’s generally less harmful than a false positive.

The real challenge is striking a delicate balance between catching AI writing and not falsely accusing innocent people. Think about it: even a low false positive rate of 1% could mean thousands of incorrect flags at a large university processing hundreds of thousands of papers. This context is everything when you see a tool claiming near-perfect accuracy.

An AI detector's result should be treated as an initial data point, not as conclusive proof. Its primary value is in starting a conversation about content origin, not ending one with an accusation.

The Problem with Predictability

At their core, AI detectors work by spotting patterns. Large Language Models (LLMs) are trained to be predictable; they're designed to choose the most statistically likely next word. This creates text with low "perplexity"—a fancy term for randomness and complexity.

Human writing is the opposite. It’s full of quirks, strange phrasing, and unexpected word choices. It has high perplexity.

Detectors are trained to sniff out that low-perplexity signature of AI. But as AI models get more sophisticated, they get much better at mimicking human creativity, making their patterns harder to spot. Even early on, the tools struggled; the original OpenAI text classifier was notoriously unreliable, a fact that's been well-documented.

It’s a constant cat-and-mouse game. What's detectable today might be invisible tomorrow. Ultimately, relying on these tools without understanding their limits is a recipe for flawed judgments and misplaced trust.

How AI Detectors Actually Work

To really answer the question "are AI detectors accurate," we need to pop the hood and see what they’re actually looking for. At its core, AI-generated text is built on a foundation of predictability. Large Language Models (LLMs) are trained to pick the most statistically likely next word, which creates sentences that are smooth and logical but often miss that certain human spark.

Think of it like this: human writing is a lot like a jazz improvisation. It’s filled with unique phrasing, unexpected rhythms, and personal quirks that make it feel alive. AI writing, on the other hand, is more like a perfectly rehearsed classical piece—technically flawless, but it follows a predictable, structured pattern. The AI detector, then, is like a "music critic" trained to spot the difference between the spontaneous solo and the rehearsed concerto.

Spotting Statistical Fingerprints

The main trick these detectors use is analyzing perplexity and burstiness. These sound more complicated than they are.

Perplexity is just a way to measure how random or unpredictable a text is. Human writing tends to have high perplexity because our word choices can be surprising and varied. AI writing, which is designed for coherence, naturally has low perplexity.

Burstiness is all about the rhythm of your sentences. Humans tend to write in bursts, mixing long, winding sentences with short, punchy ones. AI models often produce text with a more uniform sentence length, creating a rhythm that feels just a little too even.

So, an AI detector scans text looking for these statistical fingerprints:

- Low Perplexity: The words follow highly predictable patterns.

- Low Burstiness: The sentence lengths are too consistent and lack natural variation.

Essentially, the detector is asking, "Does this text feel too perfect? Too predictable?" If the answer is yes, it gets flagged. You can dive deeper into these mechanics in our guide on how an AI detector works.

The Challenge of Real-World Performance

While these methods sound solid in theory, real-world performance is a whole different story. The explosion of AI writing tools led to a gold rush of detection software, but the results have been shaky at best.

Take Turnitin, for example. Between 2023 and 2024, they reviewed around 200 million papers and found that 11% contained significant AI content. They claimed a false positive rate of less than 1%, but independent analysis suggested it was actually closer to 4% at the sentence level. That’s a big difference when a student’s grade is on the line.

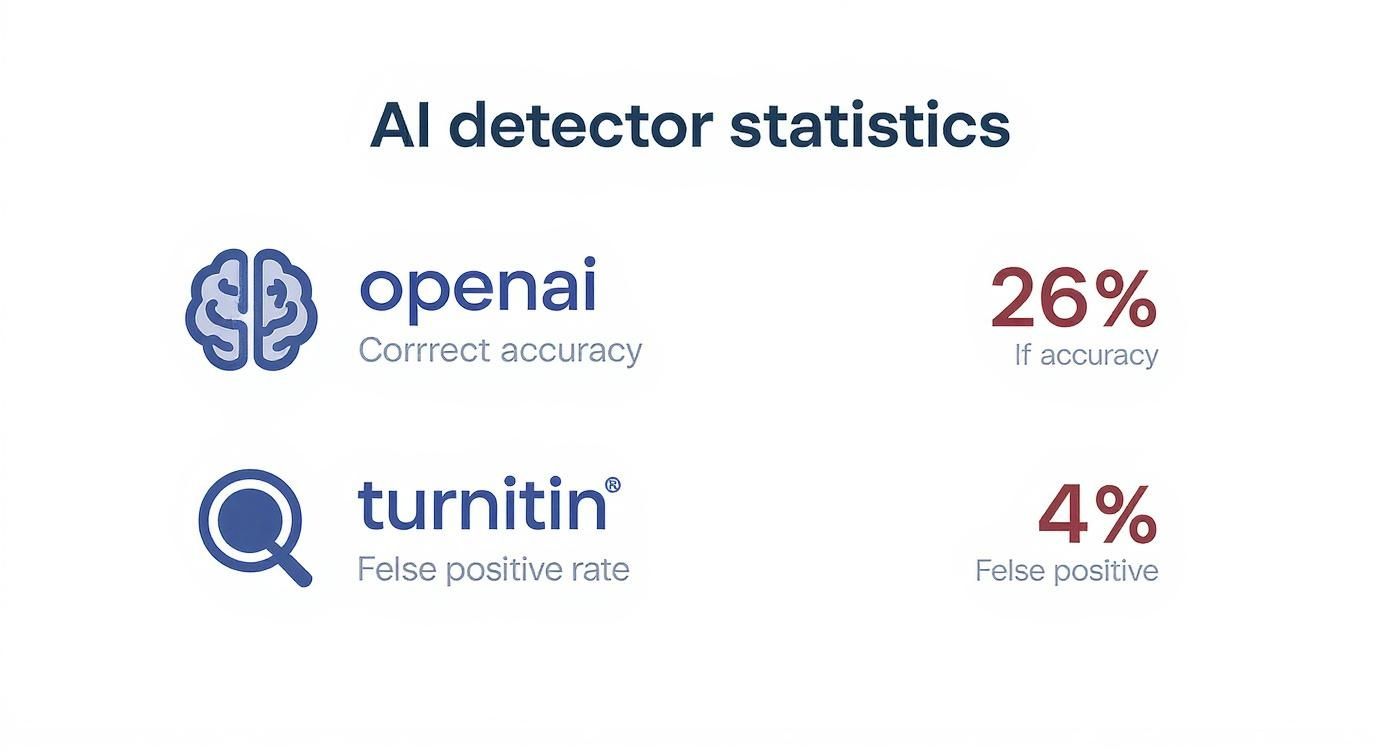

Even the creators of the AI models have stumbled. OpenAI’s own detector was a perfect example—it only identified 26% of AI-written text correctly, while wrongly flagging 9% of human writing as AI. Its performance was so underwhelming that they pulled the plug on the tool in mid-2023.

Here's a look at the data from OpenAI’s original blog post, showing just how often their classifier got it wrong.

The data shows that even when the tool was "very confident," it still misidentified a huge chunk of both AI and human writing. It really highlights how tough this whole detection game is.

Linguistic Analysis and Watermarking

Beyond just looking at stats, some detectors use linguistic analysis to search for other clues. This involves spotting overly formal tones, a lack of personal stories or idioms, and the repetitive use of certain "AI-favorite" words and phrases. It's a more nuanced approach, but it still has a hard time with advanced AI models that are getting much better at mimicking human style.

The key takeaway here is that no single detection method is foolproof. The best systems try to combine multiple techniques, but even they can be tricked by a few simple human edits or a more advanced AI.

Looking at other fields can give us some perspective. For instance, the process of distinguishing between monitoring and listening approaches in social media analytics is a useful parallel. Monitoring simply tracks keywords (like a basic detector), while listening analyzes the deeper context and sentiment (like a more advanced linguistic analysis). Both aim to interpret text, but their depth and accuracy are miles apart.

Finally, researchers are looking into watermarking. This is a method where AI models would embed a subtle, invisible signal into the text they create. It would make detection far more reliable, but it requires all AI developers to get on board, and that just hasn't happened yet. Until it does, detectors will be stuck playing catch-up, trying to spot the clues left behind in the writing itself.

Putting Detector Accuracy Claims to the Test

AI detector companies love to advertise 98% or 99% accuracy. It sounds great on a sales page, but those numbers almost always come from sterile lab environments, testing pure, unedited AI-generated text. That’s a scenario you’ll almost never find in the real world.

The second a human touches that text—to edit, rephrase, or add their own ideas—the game completely changes. When we ask, "are AI detectors accurate," we're really asking how they handle two major errors: false positives (flagging human work as AI) and false negatives (letting AI work slide by). The real answer lies far beyond the advertised claims.

False Positives: The Real Danger

Of the two errors, the false positive is by far the more dangerous. An incorrect accusation of AI use can have serious fallout, especially for students and professional writers. A student might face an academic integrity board, or a writer could lose a client’s trust over one flawed scan.

Even a "low" false positive rate becomes a huge problem at scale. Imagine a university that runs 100,000 papers through a detector with a 1% false positive rate. That’s 1,000 students wrongly flagged. This not only creates an enormous headache for faculty who have to investigate each case but also causes incredible stress for the people who are wrongly accused.

A detector's score should never be the final verdict. Think of it as a single clue in a much larger investigation, not a definitive judgment on who wrote something.

Claimed vs. Observed Accuracy of Popular AI Detectors

The gap between what AI detectors claim and what they actually deliver is often massive. Independent studies consistently find that the near-perfect accuracy from marketing materials falls apart under real-world stress, especially with edited text or content from newer AI models.

The table below compares the advertised accuracy rates of some popular tools with their observed performance in independent testing, highlighting the disconnect.

| AI Detector | Advertised Accuracy | Observed False Positive/Negative Rates | Best Use Case |

|---|---|---|---|

| Turnitin | 98% accuracy | Reports of up to a 15% miss rate (false negatives); historical issues with false positives. | Academic integrity checks, as a preliminary indicator. |

| Originality.AI | 99% on GPT-4 | Independent tests show a higher false positive rate, especially on human-edited content. | SEO content agencies checking for unedited AI drafts. |

| GPTZero | Claims to be the "most accurate" | Can be effective but struggles with heavily paraphrased or mixed human-AI text. | Quick, initial scans for educators or casual users. |

| OpenAI's Classifier (discontinued) | N/A | 26% of human text was misidentified as AI; only correctly identified AI text 9% of the time. | Discontinued due to poor performance. |

This data shows that even the biggest names in the industry struggle to deliver consistent, reliable results. You just can't take their marketing claims at face value.

Let's dig into one of the biggest players. Turnitin, a staple in academic integrity, launched its AI detector with a stated 98% accuracy. Yet, the tool has a documented 15% miss rate, meaning roughly one in six AI-generated papers could slip through. It has also famously struggled with false positives, once even flagging the U.S. Constitution as AI-generated. You can read more about the challenges universities face with these tools in this deep dive into AI detection accuracy.

This infographic gives a clear visual of the problem, comparing the claims of Turnitin and OpenAI's original (and now defunct) detector to their actual performance.

The data is pretty clear: even the industry leaders have a long way to go. OpenAI’s tool was so unreliable they pulled it from the market entirely.

Why Do Free Detectors Often Fall Short?

The internet is flooded with free tools promising to spot AI-written content. While they can be handy for a quick check, they often come with an even higher risk of being wrong.

Here's why:

- Simpler Algorithms: Free detectors usually run on less sophisticated models, making them much easier for modern AI to fool.

- Slower Updates: They often lag behind in keeping up with the newest AI models like GPT-4 and its successors.

- Higher Error Rates: Study after study shows free tools produce more false positives and false negatives than paid options.

For casual use, a free tool is probably fine. But if a decision has real stakes, relying on one is a bad idea. If you want to see how different options stack up, you can check out our guide on free online AI detection tools.

The main takeaway is simple: no AI detector is perfect. Always treat their results with a healthy dose of skepticism.

The Unfair Disadvantage for Non-Native English Writers

One of the biggest and most troubling blind spots in AI detection is the bias against non-native English speakers. These tools learn what "human writing" looks like by analyzing massive datasets, but that data is almost always dominated by native English speakers.

This creates a very specific—and very flawed—model of what sounds natural. The algorithm learns a baseline for sentence structure, complexity, and word choice that can unintentionally penalize anyone who writes differently.

This problem circles right back to the idea of perplexity. Remember, AI detectors flag text with low perplexity, meaning it's predictable. Unfortunately, someone learning English often uses simpler sentences or more common phrases. It’s a natural part of the learning process, not a sign of a robot.

The result? Their completely human work gets tagged as AI-generated, creating a wave of false positives for an entire group of people. This isn’t just a technical hiccup; it’s a serious ethical problem with real consequences.

Why Linguistic Background Matters So Much

The way we build sentences is deeply shaped by our native tongue's grammar and rhythm. For a non-native writer, these patterns can carry over into their English, creating a unique style that an algorithm—trained only on standard English—might see as strange or too simple.

Think about some common traits you might see:

- More structured sentences: Following grammar rules more strictly than a native speaker might.

- Common vocabulary: Sticking to familiar words instead of obscure synonyms.

- Simpler phrasing: Avoiding complex idioms or slang that could cause confusion.

An AI detector doesn't get this context. All it sees is text that strays from its "normal" human baseline and shows less randomness than it expects. That's enough to trigger a red flag. This can lead to unfair accusations in school or at work, punishing people for their background instead of celebrating their effort.

The core issue is that many AI detectors equate "different" with "artificial." For a non-native English writer, their unique linguistic fingerprint can be misinterpreted as the predictable signature of a machine, leading to profoundly unfair outcomes.

The Data Shows a Clear Bias

The numbers paint a pretty stark picture. While some tools are getting better, many still show a major bias. One important study looked at how AI detectors handled texts from non-native English writers and found a disturbing trend. Some detectors had a false positive rate as high as 5.04%. In a large university, that could mean thousands of students being wrongly accused of using AI every year.

But the same research also showed that better performance is possible. For example, Copyleaks' AI Detector managed a combined accuracy of 99.84% across three different non-native English datasets, flagging only 12 texts incorrectly out of 7,482. This pushed its false positive rate below 1.0%, proving that with the right training, this bias can be seriously reduced. You can check out the full study about AI detection accuracy for non-native English speakers on copyleaks.com.

This gap highlights a critical point: not all detectors are the same. When you ask, "are AI detectors accurate," the answer really depends on who is writing. For non-native English speakers, the risk of being falsely accused is still unacceptably high with many tools out there. It’s a systemic problem that demands more honesty from developers and a much more careful, human-first approach from anyone using these tools to make important decisions.

How to Use AI Detection Tools Responsibly

https://www.youtube.com/embed/LDEBs9Qw1aU

Given their clear limitations, you might be wondering if AI detectors are even worth the trouble. The answer is yes—but only if you approach them with a healthy dose of skepticism and a clear understanding of what they can and can’t do.

Thinking of these tools as foolproof lie detectors is a recipe for disaster.

The single most important rule is to treat a detector's score as an initial data point, not as the final word. Its job is to start a conversation about where the content came from, not to end one with a definitive accusation. When a tool flags a piece of text, it's just a signal to look closer. Nothing more.

Leaning too heavily on a single score can lead to false accusations, destroy trust, and create a ton of stress for writers and students. The goal is to use these tools smartly, as one small piece of a much larger puzzle.

A Framework for Responsible Use

Before you take any action based on an AI detector's score, you need a better approach. A high "AI probability" score doesn't automatically mean someone cheated or tried to deceive you. It just means the writing contains patterns that the tool’s algorithm happens to associate with machine-generated text.

Here’s a practical framework to guide your investigation and keep things fair:

Never Rely on a Single Detector: This is a big one. Different tools use different algorithms, and they can spit out wildly different results for the exact same text. Always run the content through at least two or three reputable detectors to see if you get a consensus. A flag from one tool is just noise; a flag from several is a signal worth a second look.

Consider the Author's Context: Is the writer a non-native English speaker? Are they new to the subject and using simpler language? As we’ve seen, factors like these can send the risk of a false positive through the roof. Always think about the writer’s background before jumping to conclusions.

This simple cross-check helps filter out the noise and gives you a much more balanced perspective.

Beyond the Score

Once you have the initial data, the real work begins. The score itself is just a starting point. A responsible evaluation means looking for the human indicators of content origin—the stuff a machine can't easily fake.

The ultimate goal is to empower users to use these tools intelligently—as a way to start a conversation about content origin, not to end one with an accusation. This nuanced approach helps mitigate the risks while still leveraging the potential benefits of detection technology.

Here are a few actionable steps to take after getting a high AI score:

Look for Other Telltale Signs: AI-generated text almost always lacks personal anecdotes, unique opinions, or specific, niche examples. Does the writing feel generic? Is it missing a distinct voice or personality? The absence of a human touch can be a far more reliable indicator than any percentage score.

Check for Factual Inaccuracies: AI models are notorious for "hallucinating"—making up facts, sources, and even quotes. A quick fact-check on a few of the claims can often reveal if the content was created without a human in the loop.

Initiate a Conversation: If you're an educator or a manager, the best next step is to simply talk to the writer. Ask them about their writing process, their sources, or how they came to understand the topic. A five-minute conversation will almost always clarify the situation more effectively than any piece of software ever could.

By following this framework, you can use AI detectors as they were intended: as assistive tools that support, rather than replace, human judgment. The question isn't just "are AI detectors accurate?" but "how accurately and responsibly are we interpreting their results?"

Got Questions About AI Detector Accuracy?

Even after a deep dive, you probably still have some specific questions. Let's tackle the most common ones people ask when they're trying to figure out if these tools are legit and what the results actually mean.

Which AI Detector Is the Most Accurate?

Here’s the thing: there isn’t one single “best” detector that outperforms all others, all the time. A tool's real-world accuracy is tied directly to what kind of text you’re feeding it and what data it was trained on.

Some detectors are great at sniffing out lazy, unedited text from older models like GPT-3.5. Others are tuned more finely to catch the subtle fingerprints of sophisticated new AIs. The smartest move? Don't trust just one tool. Run your text through two or three different reputable detectors. If they all say the same thing, that’s a much stronger signal than one isolated flag.

Can AI Detectors Spot Human-Edited Text?

This is where it gets messy. Whether a detector can catch human-edited AI text really depends on how much editing we're talking about.

- Light Edits: If you just fix a few typos or swap a handful of words, a good detector will probably still catch it. The underlying statistical patterns—the AI's "signature"—are still there.

- Heavy Edits: But if you do a major rewrite—restructuring sentences, changing the flow, and injecting your own voice—you can absolutely fool a detector. You're reintroducing the natural randomness (what experts call perplexity) that human writing has, effectively smudging the AI's original fingerprints.

When you heavily edit an AI-generated draft, it becomes a hybrid. It's not machine writing anymore, but it's not purely human either, making it a nightmare for any detector to classify with confidence.

Are Free AI Detectors Any Good?

Free tools are fine for a quick, casual check. But if the stakes are high, they usually don't cut it. Their algorithms are often simpler and aren't updated as religiously to keep up with the newest AI writers.

For anything important, like academic papers or professional publishing, relying solely on a free detector is a gamble. They tend to have more false positives (flagging your work as AI) and false negatives (missing obvious AI content). Think of them as a first-pass screener, not the final word.

Ready to make sure your writing sounds genuinely human and sails past AI detection? Natural Write gives you a free, one-click way to humanize your AI text instantly. Polish your essays, blogs, and marketing copy so they feel truly authentic.