How Does an AI Detector Work? Insights Into the Technology

August 10, 2025

So, you've probably wondered: how does an AI detector actually work? It's less about magic and more about being a highly trained digital detective. At its heart, an AI detector uses sophisticated machine learning to spot the tell-tale patterns that separate human writing from text generated by a machine. These tools are designed to find the subtle "fingerprints" that AI models leave behind.

The Digital Detective: How AI Detectors Spot AI Writing

Think of a large language model like ChatGPT as an incredibly smart student, trained to predict the most logical next word in a sentence. While this makes it powerful, it often produces text that is almost too perfect and predictable. AI detectors are built specifically to notice this unnatural consistency.

The whole process hinges on machine learning and natural language processing (NLP) to tell the difference between human and AI content. A core part of this operation is the use of classifiers—algorithms trained on massive, labeled datasets of both human and AI-written text.

These classifiers study features like word frequencies, sentence length, and structural complexity to find the patterns that give the AI away. For example, a detector might flag a piece of writing for having unusually uniform sentence lengths, a common giveaway of machine-generated content.

Core Components Of An AI Detector

To make a call, these digital detectives rely on a few key components working together. Let's break down the fundamental building blocks that enable these tools to analyze and classify text.

| Core Components of an AI Detector | |

|---|---|

| Component | Function |

| Training Data | A massive library of text, with millions of examples of both human and AI-generated content. The quality and diversity of this data directly shape the detector's accuracy. |

| Feature Extraction | The detector breaks down a submitted text into measurable characteristics, analyzing things like sentence structure, word choice, and rhythmic patterns. |

| Classification Model | This is the engine. It compares the extracted features against the patterns it learned from the training data, ultimately calculating a probability score. |

When you think about it, this analytical approach isn't just for spotting AI writing. It’s similar to methods used in other online contexts, like identifying fake Instagram followers.

The goal isn't to find a single "gotcha" word but to analyze the entire tapestry of the text. A human writer's weave is often beautifully imperfect, while an AI's is typically flawless—and that very flawlessness is the clue.

This system of pattern recognition is why a detector provides a likelihood, such as "95% AI-generated," rather than a simple yes or no. It's all about probabilities.

Training the AI: What Detectors Learn and Why It Matters

An AI detector’s real power comes from its training. This isn’t just a quick study session; it's a massive, ongoing effort where the model is fed millions of documents, each one clearly labeled "human" or "AI." The quality and diversity of this training data are everything.

Think of it like training a world-class sommelier. You wouldn't just have them taste Merlot from California. To build an expert palate, they need to sample a huge library of wines—different grapes, regions, and vintages from all over the world. That’s what allows them to spot the subtle notes that distinguish a French Bordeaux from an Italian Chianti.

It’s the same with an AI detector. It learns to answer the question "how does an AI detector work?" by analyzing a vast collection of texts. The more diverse and high-quality these texts are, the better the detector gets at picking up on the faint "flavors" of machine-generated content versus authentic human writing.

The Importance of Diverse Training Data

A detector trained only on content from an older model like GPT-3 would be completely lost trying to identify text from today's more advanced AIs. The patterns it was taught to spot are already outdated. This is why the best AI detectors are in a constant state of learning and retraining.

Their datasets have to include content from a wide range of sources:

- Various AI Models: Text generated by many different large language models (LLMs), because each one has its own unique style and quirks.

- Diverse Human Writing: Examples from every corner of the writing world, from technical manuals and academic papers to creative stories and casual blog posts.

This diversity is what stops the detector from developing a narrow "taste" and helps it avoid bias. It ensures the tool can recognize AI fingerprints across different contexts and writing styles, making it more reliable and less likely to make mistakes like false positives.

The core principle is simple: to catch an AI, you have to understand all AIs. A detector’s knowledge must be as broad and current as the technology it’s designed to monitor.

Building a Robust Detection Model

The training process is more than just collecting data; it's about building a solid statistical understanding. The effectiveness of AI detectors hinges on the quality and sheer size of their training datasets. Detectors trained on massive datasets—containing millions of samples from both AI and human sources—achieve higher accuracy rates, sometimes hitting over 90% in controlled tests. These datasets include text from different AI models and human writing styles to capture a broad spectrum of nuances. You can learn more about how these datasets improve AI detection accuracy on Coursera.

This continuous training cycle is what makes or breaks a detector. As AI writers evolve, so must the tools that spot them. It's a perpetual cat-and-mouse game where the detector's education never really ends. This commitment to ongoing learning is what separates a mediocre tool from a highly effective one that can actually keep up.

Decoding the Signals That Give AI Away

So, when an AI detector scans a piece of writing, what’s it actually looking for? It’s not some single, glaring mistake. Instead, it’s hunting for a whole constellation of subtle linguistic clues and statistical oddities that tend to separate machine-generated text from human writing. The system is essentially asking: does this feel natural, or is it a little too perfect?

Two of the most telling signals are perplexity and burstiness. They might sound technical, but they’re actually pretty intuitive ideas about how we communicate. Getting a feel for them is the key to understanding what’s really happening under the hood of an AI detector.

The Predictability Test: Perplexity

Imagine you're reading a story. If you can guess the next word almost every time, the text is predictable. That’s the core idea behind perplexity—it’s just a way to measure how surprised a language model is by the words in a sentence.

AI-generated text almost always has very low perplexity. That’s because AI models are trained to pick the most statistically likely word at every turn. Their writing follows common, logical, and often unsurprising paths. It's clean and grammatically perfect, but it can also feel a bit sterile and soulless.

On the other hand, human writing is full of wonderful little surprises. We use creative turns of phrase, odd metaphors, and unexpected word choices that an AI would never see coming. This creates a higher perplexity score—a strong hint that a human mind was behind the words.

The Rhythm of Writing: Burstiness

Next up is burstiness, which is all about the rhythm and variety of your sentences. Think about how you talk or write an email. You probably mix short, punchy statements with longer, more descriptive sentences that weave together complex ideas.

This creates a dynamic, varied rhythm—a “bursty” pattern of sentence lengths and structures. AI models, at least for now, really struggle with this. They tend to generate text with a monotonous, almost robotic uniformity, where every sentence is roughly the same length and complexity. This lack of natural variation is another major red flag for detection tools.

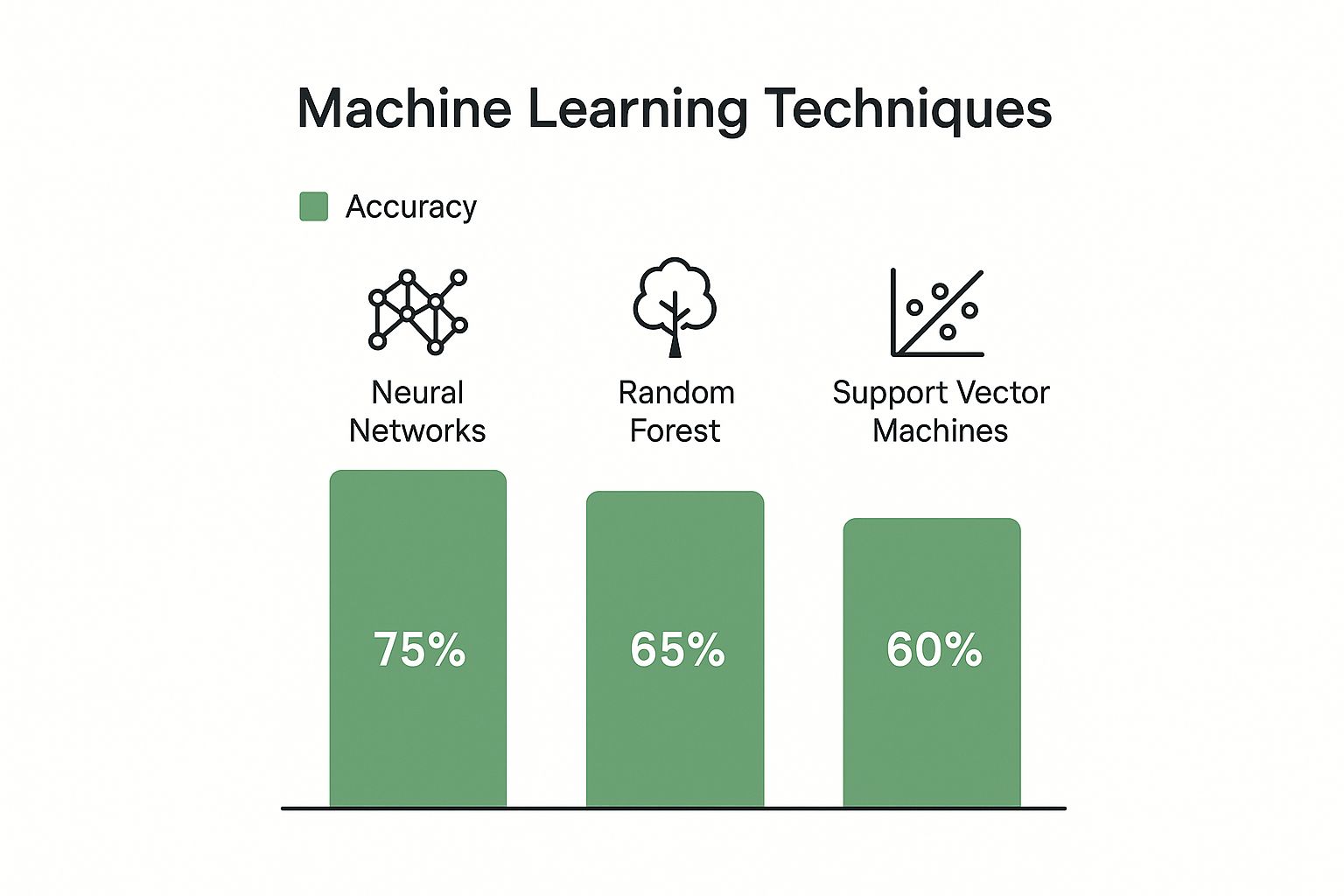

These aren't just abstract concepts; they are measurable signals. AI detectors use different machine learning models to spot these complex patterns, with some being better at it than others.

As you can see, Neural Networks are particularly effective at this, hitting around 75% effectiveness. By analyzing signals like perplexity and burstiness together, a detector builds its case, ultimately calculating a probability score that shows how closely the text matches the known fingerprints of AI.

Human vs AI Writing Signals

To put it all together, these detectors are essentially running a comparison against a checklist of known human and AI writing traits. Here’s a simplified look at what they're weighing.

| Linguistic Signal | Typical Human Writing | Typical AI Writing |

|---|---|---|

| Sentence Length | Varied and dynamic; a mix of short and long sentences. | Often uniform; sentences tend to have similar lengths. |

| Word Choice | Uses unique, specific, or sometimes quirky vocabulary. | Leans on common, safe, and frequently used words. |

| Perplexity | High; less predictable due to creative or unusual phrasing. | Low; highly predictable because it chooses the most probable words. |

| Burstiness | High; natural rhythm with bursts of complex and simple sentences. | Low; smooth and consistent, lacking natural peaks and valleys. |

| Tone | Often includes personality, opinion, or subtle emotion. | Tends to be neutral, objective, and overly formal. |

| Errors | May contain typos, occasional grammar mistakes, or idioms. | Usually grammatically perfect and free of errors. |

Ultimately, no single signal is a dead giveaway. But when several of these AI-like patterns appear together, it creates a strong statistical case that the text wasn’t written by a person.

The Tech Behind Modern AI Detection

To really get what makes an AI detector tick, you have to look past simple word choice and dive into the serious tech running under the hood. It’s a fascinating cat-and-mouse game, where modern detectors often use the same kind of deep learning that powers the AI writers they’re trying to catch. This is where things get interesting.

These tools have come a long way from the old, rule-based systems of the past. Over time, detectors evolved to use sophisticated deep learning, leaning on neural networks and transformer architectures. In fact, modern detectors are built on models inspired by the same transformer tech that underpins the GPT series, which is what lets them spot complex patterns that simpler analysis would just completely miss.

As of early 2025, these tools don't just give a "yes" or "no" answer. They provide a probability score, showing you the statistical likelihood that a piece of text was AI-generated. To get the full story on this, you can discover more insights about this evolution at autogpt.net.

From Words to Numbers with Embeddings

So, how does a machine actually “read” and make sense of text? The secret lies in a process called creating word embeddings.

Think of embeddings as a way to translate words into a highly detailed numerical map. Each word gets converted into a list of numbers—a vector—that captures its meaning, its context, and how it relates to all the other words around it. It's pretty clever.

For example, on this map, the words "king" and "queen" would be plotted very close together. Same for "walking" and "running." This numerical approach allows the detector to analyze the very fabric of the text, looking not just at what is being said, but how it's being said. It's a much deeper level of analysis than just counting words.

The Power of Transformer Models

Many of today's best AI detectors rely on transformer models. This is a type of neural network architecture that is exceptionally good at understanding context in sequential data, which is exactly what text is.

Below is a simplified look at how a transformer model is structured. This architecture is central to both creating and detecting sophisticated AI writing.

This diagram shows how a transformer pushes inputs through layers of “attention” mechanisms. This lets it weigh the importance of different words when analyzing a sentence, giving it a powerful sense of context. As AI continues to reshape how we create content, understanding related concepts like content automation that leverages AI helps paint a fuller picture of the landscape.

This complex analysis is precisely why detectors give you a probability score (like "98% AI") instead of a flat "yes" or "no." That score reflects a deep and intricate mathematical process happening behind the scenes, where the model weighs dozens of signals to make its most educated guess.

By using these advanced techniques, detectors can often spot the subtle, almost invisible fingerprints of AI writing that might easily fool a human reader. This technical depth is absolutely essential for keeping pace with generative AI that gets better by the day.

If you're interested in the foundational principles behind all this, check out our complete guide on how do AI detectors work for more information.

Where AI Detectors Falter and Why 100% Accuracy Is a Myth

While the technology behind AI detectors is impressive, let’s be direct: no AI detector is perfect. The promise of 100% accuracy is a myth, and understanding their limits is the only way to use these tools responsibly. The reality is a world of frustrating errors that can have real consequences.

These tools make two main types of mistakes:

- False Positives: This is when a detector incorrectly flags human-written content as being generated by AI. It’s the digital equivalent of an innocent person being picked out of a lineup.

- False Negatives: This happens when AI-generated text slips right past a detector, completely fooling it.

Both errors undermine the very purpose of detection. False positives can lead to unfair accusations, while false negatives allow a flood of low-quality AI content to go unchecked. The reasons for these mistakes are tied to the breakneck speed of AI development itself.

The Constant Arms Race

The biggest reason detectors struggle is that they are locked in a relentless arms race with the very AI models they’re built to catch. Generative AI is advancing at a dizzying pace, with each new version producing text that’s more nuanced, creative, and human-like than the last.

As soon as detectors are trained to spot the "fingerprints" of one AI model, a more advanced one is released with entirely new tells. This puts detectors in a constant state of playing catch-up, making absolute certainty an impossible target.

This dynamic explains why a detector can seem to work perfectly one day and feel completely outdated the next.

Nuance and Hybrid Content

Another massive hurdle is how people actually write and edit today. It’s not always a clean split between human and machine. AI detectors are often tripped up by a few key scenarios that blur the lines.

Hybrid Content: What happens when a human takes an AI-generated draft and heavily edits it? The final text becomes a blend of both styles. This "hybrid" content can easily fool a detector because the human touch adds the very creativity and unpredictability the tool was looking for. If you want to see how this works, our guide on how to bypass AI detection offers more context.

Non-Native Writing: Detectors are typically trained on enormous datasets of text written by native English speakers. This can bake in a serious bias. The writing patterns of non-native speakers—which might be more formal or structured—are often mistakenly flagged as AI-generated as a result.

Advanced AI Models: The best AIs can now mimic human error, adopt specific personas, and write with a level of creativity that was once a clear giveaway of human authorship.

At the end of the day, these tools provide a probability, not a verdict. They offer a statistical guess based on learned patterns, which is why their results should always be treated as a starting point for investigation, not a final judgment.

The Real-World Impact and Ethics of AI Detection

Beyond all the technical jargon, it's worth asking a simple question: why does any of this matter? The answer is that these tools are no longer just fascinating experiments. They're being used right now to make decisions that have real consequences for real people.

In classrooms, they’re used to catch plagiarism and uphold academic integrity. For publishers and anyone working in SEO, they’ve become the first line of defense against the flood of cheap, automated content created to fool search engines or push a narrative.

But with that power comes a serious weight of responsibility.

Navigating the Ethical Gray Area

The cost of a mistake can be huge. A false positive—where a detector wrongly flags human writing as AI-generated—could lead to a student being accused of cheating or a professional writer being unfairly penalized. That risk creates a massive ethical headache for any organization that puts too much faith in an automated score.

There’s also the growing problem of algorithmic bias. Many detectors are trained on very specific types of writing, which means they can inadvertently penalize styles that don't fit the mold. For example, the formal, structured English often used by non-native speakers can sometimes look a lot like AI-generated text to a machine, leading to unfair classifications.

At its core, an AI detector gives you a probability, not proof. A high AI score just means the text shares patterns with known AI writing. It’s not a guilty verdict.

This brings us to the big debate about where to draw the line. When does a helpful AI assistant become a tool for academic dishonesty? And how do we make sure these detectors support human work instead of punishing it?

These tools offer powerful data, but that data needs human judgment to be useful. It requires a clear-eyed understanding of what they can and can't do. For anyone looking to use AI as a starting point, learning how to humanize AI text has become an essential skill for navigating this new reality.

Got Questions About AI Detectors? We've Got Answers.

As AI detection tools pop up in more classrooms and offices, a lot of questions are coming up with them. It makes sense. When a tool has this much influence, you want to know how it actually works. Let’s break down some of the most common questions people have.

How Do AI Detectors Actually Work?

At their core, AI content detectors are pattern-matching machines. They use machine learning to scan a piece of text, looking for the kinds of statistical fingerprints that AI models tend to leave behind.

Think of it this way: AI is trained on enormous datasets of human and AI-generated text. This training teaches the detector to recognize common AI habits, like predictable sentence structures, a lack of personal voice, and certain word choices. When you feed it a document, it analyzes those characteristics and spits out a probability score based on what it finds.

Why Do These Tools Sometimes Flag Human Writing?

This is probably the biggest headache with AI detectors, and it’s known as a false positive. It happens because the tools aren't truly understanding the text; they're just checking for statistical patterns.

If your own writing is very formal, follows a rigid structure, or just doesn't have a strong personal flair, it can accidentally mimic the very patterns the detector is built to flag. It's a machine looking for machine-like traits, and sometimes we humans write a bit like machines.

Writers who learned English as a second language can also get flagged more often, simply because their sentence structures might not match the typical patterns found in the detector's training data. This is a huge reason why a high AI score should never be taken as proof.

A high AI score doesn't mean a machine wrote it. It just means the text shares qualities with common AI writing. Human judgment is still the most important part of the equation.

So, Are AI Detectors 100% Accurate?

Nope. Not even close. No AI detector is 100% accurate. They provide a probability, not a definite verdict. Think of them as a guide, not a judge.

Their reliability depends on a few things:

- How long is the text? Short snippets are notoriously difficult to analyze correctly. There just isn't enough data for the tool to make a confident call.

- Which AI model wrote it? The latest AI models produce text that's much more human-like, making it a lot harder for detectors to spot.

- Was it edited by a human? A little bit of human editing on an AI-generated draft can easily fool most detectors.

For all these reasons, you should treat these tools as one data point among many, not as the final word.

Ready to make sure your writing sounds genuinely human and confidently bypasses detection? Use the integrated AI checker from Natural Write to review your text, then use our one-click humanizer to refine it instantly. Try it for free at Natural Write.