How Do AI Detectors Work and Are They Accurate?

July 28, 2025

So, how do AI detectors actually work? At their core, they’re specialized systems trained to spot the statistical "tells" and non-human patterns in writing. Think of them as digital forensic experts examining a document not for fingerprints, but for linguistic giveaways that signal an AI's involvement.

Unmasking the Digital Ghost in the Machine

AI detectors function a bit like a counterfeit bill detector, which spots tiny inconsistencies invisible to the naked eye. Instead of checking for watermarks or security threads, these tools scrutinize text for tells that humans don't naturally produce. They’re built to answer one critical question: does this text have the statistical fingerprint of a machine?

The process boils down to two main approaches: good old-fashioned statistical analysis and more advanced machine learning classifiers. These systems don't "read" for meaning in the human sense. They analyze mathematical patterns.

To make this clearer, here’s a quick rundown of the most common techniques these tools use to sniff out AI-generated content.

Core AI Detection Methods at a Glance

| Detection Method | What It Looks For | Simple Analogy |

|---|---|---|

| Statistical Analysis | Predictable sentence length, low word variety, and lack of randomness. | It's like listening to a song where every beat is perfectly timed and has the exact same volume. It’s too perfect to be human. |

| ML Classifiers | Deep, subtle patterns learned from millions of human vs. AI text examples. | Like an art expert who has seen so many forgeries they can spot one instantly, even if they can't explain every single reason why. |

| Watermarking | A hidden, invisible signal or pattern embedded directly into the text by the AI model. | Think of it like a secret code woven into the fabric of the text itself, only visible to a special decoder. |

Now, let's dig into what those first two methods really mean.

The Statistical Investigation

The first layer of detection involves looking at linguistic metrics that are surprisingly hard for AI to replicate authentically. Human writing has a certain rhythm and chaos to it—a mix of long, flowing sentences and short, punchy ones. This natural variation is called "burstiness."

In contrast, AI-generated text often has an unnaturally consistent sentence length and structure. This creates a monotonous, almost robotic cadence that detectors can easily flag.

Another key metric is "perplexity," which essentially measures how predictable a piece of text is. Human language is full of surprises, weird idioms, and unexpected word choices. AI models, on the other hand, are trained to choose the most statistically probable next word, so they often produce highly predictable text that lacks our creative randomness.

A high perplexity score suggests human authorship, full of twists and turns. A low perplexity score often points to the predictable, straight road of an AI model.

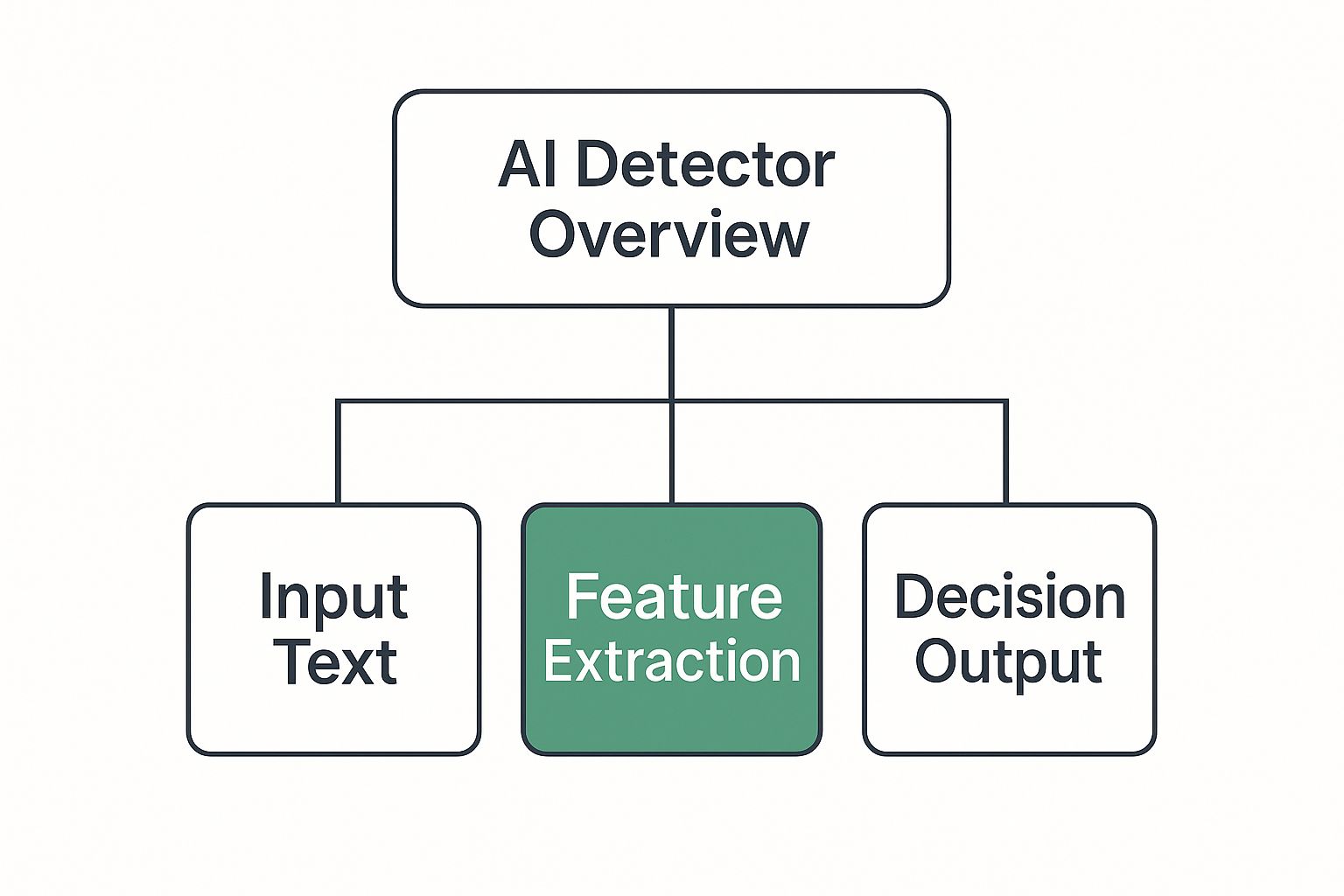

The image below gives a simple visual of how these systems break down text to analyze it.

As you can see, the raw text is broken down into analyzable features before a final judgment is made.

The Rise of AI-Powered Classifiers

The second, more powerful method uses AI to catch AI. These are machine learning classifiers, and they're trained on massive datasets containing millions of examples of both human and AI-generated writing. Over time, the model learns the subtle, deep patterns that distinguish one from the other.

Because these detectors work by identifying patterns unique to machine-generated content, exploring tools like LLM monitoring tools can give you a peek under the hood at the very text characteristics these detectors are built to find.

The technology has improved dramatically. One recent study showed that top tools can now detect GPT-4o content with over 85% accuracy—a huge leap from just a couple of years ago, when those rates struggled to even pass 20%.

For a deeper understanding of this cat-and-mouse game between AI writers and AI detectors, you might be interested in our guide on how to detect AI writing effectively.

Spotting the Digital Fingerprints in AI Writing

To really get how AI detectors work, you have to look for the statistical clues they’re trained to find. Think of them as digital detectives, searching for the fingerprints that large language models (LLMs) almost always leave behind.

Two of the most important clues they hunt for are perplexity and burstiness.

Perplexity vs. Burstiness

Let's start with perplexity. You can think of it as a measure of how predictable a text is. Human writing is often delightfully messy and unpredictable. We use weird idioms, make unexpected connections, and choose words that can surprise a reader.

AI models, on the other hand, are built on probability. Their whole job is to pick the most likely next word, which makes their writing statistically very predictable. An AI detector sees this low perplexity score as a major red flag because it’s missing the creative chaos of real human expression.

Now, what about burstiness? This is all about the rhythm and flow of the writing. A human writer naturally mixes things up, varying their sentence structure to create a dynamic pace.

Think about a good conversation. It has short, punchy statements and longer, more descriptive thoughts. That natural variation gives it a high burstiness. AI-generated text often lacks this. It tends to spit out sentences of a similar length and structure, creating a monotonous, uniform flow. This low burstiness is another key fingerprint detectors are designed to spot.

In short, perplexity measures what is said (the predictability of words), while burstiness measures how it's said (the rhythm of sentences). Both are critical clues for telling a human from a machine.

When a detector analyzes a piece of text, it puts these two factors together. A piece that’s highly predictable (low perplexity) and has a flat, uniform rhythm (low burstiness) is a dead ringer for AI-generated content.

Other Telltale Linguistic Clues

Beyond those two core metrics, detectors scan for other giveaways. These patterns are more subtle, but when they appear together, they paint a pretty clear picture. It’s like a detective collecting multiple pieces of evidence at a crime scene.

Here are a few common patterns that raise suspicion:

- Repetitive Sentence Starters: AI models often get stuck in a rut, starting sentences with the same transition words over and over (e.g., "In addition," "Furthermore," "It is important to note that...").

- Overly Safe Vocabulary: To avoid making mistakes, AIs tend to stick to a bland, generic vocabulary. They shy away from the niche terms or colorful slang a human expert might use.

- Flawless but Soulless Grammar: AI text is usually grammatically perfect, but it often lacks a distinct voice. The writing feels sterile, missing the personality, tone, and even the intentional imperfections that make human writing feel real.

When you see these elements combined—say, a text that leans on common transition words, uses a simple vocabulary, and has a perfectly even sentence structure—you’re looking at a prime candidate for AI detection.

Ultimately, these tools aren't trying to understand what the text means. They’re just deconstructing its mathematical and structural properties. Every piece of writing has these underlying characteristics, and the secret to how AI detectors work is their ability to see and interpret these subtle, yet revealing, digital fingerprints.

Using AI to Catch AI with Classifiers

While statistical quirks can give us some good clues, the real heavy lifting in AI detection comes from fighting fire with fire. To reliably spot AI-generated content, we need to use AI to catch AI. This is where machine learning classifiers enter the picture, and they represent a huge leap in how these tools actually work.

Think of a classifier as a highly trained K9 unit. But instead of sniffing for contraband, this digital bloodhound is trained to pick up on the subtle, almost imperceptible scent of machine-generated text. It’s the training that makes all the difference.

Developers feed these classifier models massive datasets filled with millions of text samples. Half of the data is purely human-written—full of all the weirdness, creativity, and occasional mistakes that make us human. The other half comes from a whole range of AI models. By sifting through this enormous library, the classifier learns the deep structural patterns, contextual tics, and other artifacts that separate human writing from machine output.

Beyond Just Counting Words

Simpler statistical methods might get hung up on surface-level stuff like sentence length or word repetition. Advanced classifiers, on the other hand, go much, much deeper. They’re built to analyze the complex relationships between words, the natural flow of ideas, and the underlying mathematical properties of the text itself.

Models like RoBERTa (A Robustly Optimized BERT Pretraining Approach) are a popular choice for this job. They don’t just count words; they understand context. This lets them spot patterns that are far too complex for a person or a simple script to ever notice.

For instance, a classifier can learn to recognize that a piece of text uses grammatically correct but contextually awkward phrasing. It can also spot when a text lacks a coherent, long-range narrative, which is a classic tell of an AI that’s just stringing together statistically probable sentences instead of weaving a genuine story.

Here’s a look at how a tool like Originality.ai displays its analysis, giving a clear score based on these deep patterns.

As you can see, the interface gives a straightforward percentage score and even highlights the specific sentences that feel AI-like. This kind of visual feedback is incredibly useful because it moves beyond a simple "yes" or "no" and shows you where the AI patterns are strongest.

The Never-Ending Detection Arms Race

The rise of these powerful classifiers has kicked off an ongoing "arms race" between AI content generators and AI detectors. Every time a new model like GPT-4o or Claude 3 gets better at sounding human, it makes the detector's job harder. In response, the detection models have to get smarter, too.

This constant back-and-forth is what pushes detection technology forward. Every time a more advanced AI writer is released, developers have to scramble to retrain their classifiers on its output just to keep up.

This is exactly why the best AI detectors are updated so frequently. Their entire effectiveness hinges on being able to recognize the unique "fingerprints" of the latest AI models out in the wild.

The cycle pretty much looks like this:

- A new, more natural-sounding AI writer hits the market.

- Detection accuracy takes a temporary hit because the old classifiers haven't seen these new patterns before.

- Developers get their hands on a ton of text generated by the new model.

- They retrain their classifiers on this new data, teaching the detector to spot the latest AI signatures.

- Accuracy bounces back, stronger than before, and the tool is ready for the next wave.

This loop isn't a bug; it's a feature. Modern AI detectors aren't static tools. They're dynamic systems that are constantly learning and adapting.

And that’s why classifiers are so much more effective against sophisticated AI writers. A human editor might get fooled by a polished piece of AI content, but a well-trained classifier can still pick up on the subtle mathematical patterns that give it away. It's this deep analytical power that makes them a must-have for anyone who needs to know if a text is the real deal.

Embedding Hidden Signals with Watermarking

So far, we’ve looked at methods that analyze text after it's been written, like digital detectives hunting for clues left behind. But what if we could build proof of origin directly into the content from the very start?

This proactive approach is called digital watermarking, and it completely changes the game.

Think of it like the subtle watermark on a banknote that proves it’s genuine. In the world of AI-generated text, this means the model itself is built to embed an invisible signature into everything it writes. It’s not a visible mark, but a specific, patterned way of choosing words that a special detector can spot later.

This gives us a powerful tool for proving where a piece of text came from. It moves us away from making educated guesses based on statistical weirdness and toward having hard, verifiable evidence baked right into the text.

How Invisible Signatures Work

So how does an AI hide a signal in plain sight? It all comes down to gently nudging the probabilities of word choices during generation. Normally, a language model just tries to pick the most likely next word. A watermarked model, however, operates with a clever little twist.

Before it generates a word, the model uses a secret key to quietly split its vocabulary into two buckets: a “green list” and a “red list.” It then intentionally steers its choices toward words from the green list just a bit more often than it normally would. This creates a faint but statistically unmissable pattern.

To you and me, this is completely invisible. The sentences read perfectly fine, and the word choices seem natural. But for a detector that has the same secret key, the slight overuse of "green list" words is a dead giveaway—a clear signal that the text was generated by a specific, watermarked AI.

What’s so smart about this method is that it doesn’t rely on clunky phrasing or weird, repetitive sentences. The signature is woven into the very fabric of the language at a statistical level. That makes it incredibly difficult to remove unless you rewrite the entire text from scratch.

The Potential and the Problems

The potential here is huge. Watermarking could be the key to fighting misinformation, allowing platforms to instantly verify the source of viral content. For publishers and creators, it offers a concrete way to identify and manage AI-generated submissions.

But this approach isn’t a silver bullet. For one, it depends on industry-wide adoption. To be truly effective, all the major developers of large language models would need to agree to build this feature into their systems. If they don’t cooperate, it just remains a niche solution.

Another big hurdle is how fragile the watermark can be when faced with simple edits.

- Heavy Paraphrasing: If someone takes the watermarked text and rephrases it heavily, they could disrupt the statistical pattern enough to completely erase the signal.

- Translation: Translating the content to another language and back would almost certainly destroy the watermark.

- Simple Edits: Even just swapping out a few key words could degrade the signature, making it much harder to detect.

Ultimately, watermarking gives us a fascinating glimpse into the future of AI detection. It shifts the focus from reactive analysis to proactive proof. It’s one of the most robust methods for content authentication we have—if we can figure out how to solve the logistical and technical challenges.

Why AI Detection Matters in the Real World

It’s one thing to understand the tech behind AI detectors, but their true worth snaps into focus when you see them at work in the real world. This isn’t some niche academic exercise. AI detection is quickly becoming a vital tool for anyone trying to maintain trust and authenticity online.

From college classrooms to company blogs, these tools are the new front line for originality. They’re essential for anyone who needs to know if the words they’re reading were written with genuine human thought and intention.

Protecting Academic Integrity

In schools and universities, the stakes are sky-high. The explosion of powerful AI writers has thrown a wrench into the long-held principles of academic honesty, and AI detectors are now the first line of defense.

Educators are using these tools to check everything from essays to research papers, making sure the work reflects a student's own thinking. It’s not about playing "gotcha." It's about protecting the value of education itself and ensuring degrees are earned, not generated.

The AI detection market is surging, mostly because of these academic pressures. Forecasts predict it will top $1 billion by 2028. Turnitin, a major player in academia, found that 11% of the 200 million papers it scanned in a year had at least 20% AI-written text. That stat alone shows you the scale of the problem and helps explain why 68% of high school teachers are now using these tools.

Safeguarding Digital Content and SEO

For publishers, marketers, and SEO pros, original content is everything. It’s what builds an audience, earns trust, and gets you noticed by search engines. Unchecked AI-generated content puts that entire model at risk.

A marketing agency, for example, can use an AI detector to make sure the freelance writers they hire are delivering human creativity, not just a polished-up AI script. In the same way, publishers can screen submissions to protect their brand and sidestep search engine penalties for low-value content. A solid AI content strategy now has to account for what these detectors are looking for.

Combating Digital Deception

Beyond content quality, AI detection is becoming a key player in cybersecurity. Scammers are already using AI to write more convincing phishing emails, churn out fake product reviews, and spread misinformation with articles that sound frighteningly real.

AI detectors act as a digital filter in these scenarios. They help security systems flag AI-crafted phishing attacks that might otherwise slip past an employee. E-commerce sites can use them to weed out bogus reviews that mislead customers and poison brand trust. A newsroom might even use a detector to add another layer of scrutiny when vetting an anonymous tip, helping in the fight against disinformation.

Of course, the goal isn't always to just catch AI text. For those who use AI as a writing partner, it's about refinement. There are great tools available to help you humanize AI text and infuse your own unique voice into the draft.

The Limits of AI Detectors and False Positives

https://www.youtube.com/embed/4Yt3BR6flxk

While the technology behind AI detection is impressive, no tool is perfect. Understanding how these detectors work also means being honest about their limits. It’s critical to see them as powerful assistants, not as infallible judges—especially when a wrong call can have serious consequences.

One of the biggest headaches is the false positive. This is when perfectly human-written text gets mistakenly flagged as AI-generated. This is a real concern for anyone whose writing style is naturally very structured or formal, as those patterns can sometimes mimic a machine.

For instance, non-native English speakers often learn the language through rigid rules and textbook examples. This can lead to writing that lacks the messy, creative "burstiness" an AI detector expects from a human. The same goes for technical or scientific writing, where clarity and precision are valued far more than stylistic flair, sometimes triggering a false alarm.

The Problem of Inaccuracy

On the flip side, you have the false negative, where AI-generated content slips right past the guards, completely undetected. This happens all the time, especially when AI text is heavily edited or “humanized” by a sharp writer. By simply changing up sentence structures, tossing in personal stories, and breaking that uniform robotic rhythm, you can often scrub away the most obvious digital fingerprints.

This puts us in a tricky spot. Even with sophisticated tools, there’s always a margin of error. Some of the most advanced AI detectors on the market claim accuracy rates approaching 99%, but even they aren't foolproof. A skilled user can almost always find a way to rework AI text to get past automated checks, which is why human review is still the gold standard for reliability.

This is exactly why a detector's score should never be taken as the final word.

An AI detection score is a probability, not a verdict. It’s a valuable data point that signals the need for a closer look, but it should always be paired with human judgment and context.

Relying only on a percentage can lead to unfair accusations in school or bad content decisions at work. For those using AI as a writing partner, it's just as important to understand these limits. If you need some tips on how to refine AI-assisted drafts so they truly reflect your own voice, check out our guide on how to pass AI detection.

Comparing Popular AI Detection Tools

To give you a better sense of the current landscape, here’s a quick comparison of some of the leading AI detection tools. Each uses slightly different methods and comes with its own set of strengths and weaknesses.

| Tool Name | Primary Technology Used | Best For | Noted Limitation |

|---|---|---|---|

| Originality.AI | Machine Learning, NLP | Content marketers and publishers | Can be overly sensitive to highly structured writing |

| GPTZero | Perplexity & Burstiness Analysis | Educators and students | Less effective on heavily edited or "humanized" text |

| Turnitin | Deep Learning, Integrated Plagiarism Check | Academic institutions | Accuracy can drop with short or mixed-source text |

| Sapling | Transformer-based Models | Business and customer support communications | Primarily focused on sentence-level, not holistic text |

This table isn't exhaustive, but it shows that there’s no single "best" tool for every situation. The right choice often depends on what kind of text you're analyzing and how much risk you're willing to accept.

Why Context Is King

Ultimately, an AI detector's accuracy is only as good as the context it's given. A few factors can easily throw off even the best tools:

- Short Text Samples: It's way harder to find reliable patterns in a single paragraph than in a 2,000-word essay.

- Creative Writing: Poetry, fiction, and song lyrics love to break the rules of grammar and structure, which completely confuses most detectors.

- Mixed-Authorship: Documents that blend human writing with pasted AI snippets are notoriously difficult to assess with any real accuracy.

Because of these variables, the only responsible way to use an AI detector is to treat its output as just one piece of a much larger puzzle.

A Few Common Questions About AI Detectors

Alright, we’ve covered the mechanics of how these tools tick. Now, let's get into the practical side of things and answer some of the questions that come up most often. Getting these details right is the key to using AI detectors responsibly.

Can You Really Trust Free AI Detectors?

Free AI detectors are fine for a quick, informal check, but they usually don't have the muscle of their paid counterparts. Many run on older detection models, which means lower accuracy, and they often cap how much text you can check at once.

If you’re using a detector for something important, like academic integrity or a serious content audit, a premium tool is almost always the better bet. They’re updated far more often to keep up with the latest language models, which is a must for getting reliable results.

Does Editing AI Text Make It Undetectable?

Yes and no. If you heavily edit and “humanize” AI-generated text, you can definitely fool a more basic detector. This usually involves rewriting sentences, adding personal stories, and even tossing in a few quirks to mess with the predictable AI rhythm.

But it’s a constant cat-and-mouse game. The best classifiers are trained to find deep statistical patterns that are incredibly tough to erase completely. While a good human touch can dramatically lower the AI score, it’s no guarantee that the text will fly under the radar of every advanced tool out there.

Why Does My Human Writing Get Flagged as AI?

This is one of the biggest headaches with AI detection—the dreaded "false positive." It's a critical limitation you have to keep in mind. It happens most often with writing that’s really structured, formal, or technical because that style can lack the messy, natural variation that detectors expect from a human.

Text from non-native English speakers can also sometimes trigger a false alarm, since it’s often learned from more formal, textbook-style examples. If you want to go deeper on this, there’s a great article that explores the reliability of AI detectors and other accuracy concerns.

This is exactly why a detector's score should be seen as a signal, not a verdict. A high AI score is a reason to investigate with your own judgment, not a final judgment in itself.

At the end of the day, context is everything. A single percentage can't tell you the whole story behind a piece of writing. When you combine these powerful tools with your own critical eye, you can sort out content authenticity with a lot more confidence.

Ready to move beyond detection and start refining your AI drafts? Natural Write instantly humanizes your text, making it clear, engaging, and ready for your audience. Try our free one-click humanizer at https://naturalwrite.com and see the difference.