Do AI Detectors Work? An In-Depth Accuracy Guide

August 6, 2025

So, do AI detectors actually work?

Yes, they're getting better all the time, but they are far from perfect. The top AI detectors can spot machine-generated text with impressive accuracy, but their performance is never guaranteed. They can, and do, make mistakes.

The Real Answer to How Well AI Detectors Work

When you run a piece of text through an AI detector, you’re basically asking a digital detective to look for clues. Think of it like a high-tech security system. It’s great at spotting obvious intruders but can sometimes be fooled by a clever disguise. Or worse, it might sound the alarm on a completely innocent person.

The real question isn't just if they work, but how well they perform under different conditions.

The effectiveness of these tools comes down to how well they handle two critical types of errors:

- False Positives: This is when the detector wrongly flags human-written text as AI-generated. This is the biggest concern because it can lead to unfair accusations and serious consequences.

- False Negatives: This happens when the detector completely misses AI-generated content, letting it pass as human.

Finding the perfect balance between these two is the ultimate challenge. A detector that’s too strict will flag everything, creating a lot of false positives. One that's too relaxed will just miss the mark entirely.

Understanding Detector Accuracy and Its Limits

The tech behind these tools is in a constant cat-and-mouse game with AI writers. Early detectors were easy to fool, but modern versions are much more sophisticated. Comparative studies show a massive leap in performance, with some tools boasting high detection rates.

For instance, some detectors caught text from advanced models like GPT-4o over 85% of the time, and a few specialized tools even claimed near-perfect accuracy on purely AI-generated drafts.

This constant back-and-forth highlights a tech "arms race" between the content generators and the detectors. While the detection tools are improving, the problem of false positives won't go away. Even the best tools can misclassify human writing up to 5% of the time. This is a huge deal, especially in schools or workplaces where the stakes are incredibly high.

To give you a clearer picture, here’s a quick breakdown of the factors at play.

Quick Look AI Detector Effectiveness

This table summarizes the key factors influencing the performance and reliability of AI content detection tools.

| Factor | Impact on Accuracy | Key Takeaway |

|---|---|---|

| AI Model Used | High | Newer, more advanced models (like GPT-4o) are harder to detect than older ones. |

| Human Editing | High | Even light human editing can significantly lower the chances of detection. |

| Content Complexity | Medium | Simple, factual text is often harder to classify than creative or nuanced writing. |

| Detector Quality | High | Not all detectors are created equal; some are far more reliable than others. |

| Language | Medium | Most detectors are optimized for English and may be less accurate for other languages. |

Ultimately, these tools are powerful, but they aren't flawless judges.

Key Takeaway: AI detectors are powerful guides, not absolute judges. A high AI score is a strong signal that warrants further investigation, but it should never be treated as undeniable proof on its own.

Understanding how AI detectors work is the first step toward using them the right way. Once you know what they look for—and where their blind spots are—you can make much more informed decisions. Their real value isn't in a single score, but in being one of several tools you use to ensure your content has integrity.

How AI Detectors Actually See Your Text

When you paste text into an AI detector, what’s really happening behind the curtain? It’s not just scanning for typos. Think of it more like a digital detective, hunting for the subtle fingerprints that machines leave behind.

These tools mainly use two clever methods to make an educated guess about whether a human or a machine wrote something. Once you get how they work, it's a lot clearer why they’re sometimes spot-on—and other times, completely miss the mark. A good starting point is understanding generative AI and how it constructs text in the first place.

The Linguistic Analysis Method

The first approach, linguistic analysis, is like having a literary critic on staff who has devoured millions of books. This critic has an almost uncanny knack for spotting tell-tale patterns in sentence structure, word choice, and the overall flow of the text.

AI models learn to write by training on massive datasets of human writing. In the process, they get really good at picking up on the most common, statistically probable ways to string words together. The result? Text that’s often grammatically flawless but feels a little too perfect, a little too predictable.

This method sniffs out specific clues:

- Uniform Sentence Length: AI often spits out paragraphs where every sentence is roughly the same length. It lacks the natural, varied rhythm of human writing.

- Repetitive Phrasing: You might notice a model overusing certain transitions or sentence starters simply because they’re statistically common.

- Predictable Word Choice: An AI will almost always choose the most expected word, whereas a human writer might grab a more creative or offbeat synonym to make a point.

A person might follow a long, winding sentence with a short, punchy one. That’s a natural cadence AI struggles to replicate, leading to text that feels polished but soulless.

The Perplexity and Burstiness Model

The second method sounds a bit technical, but the idea behind it is surprisingly simple. It all comes down to measuring two things: perplexity and burstiness.

Imagine reading a story where you can easily guess the next word in every single sentence. That’s a sign of low perplexity—it's predictable. AI-generated text often has very low perplexity because it’s built on statistical models that are always playing the odds and picking the most likely next word.

Key Insight: Human writing is wonderfully messy. We use slang, bend grammar rules for effect, and make surprising connections. This unpredictability gives our writing high perplexity, a trait AI finds incredibly difficult to fake.

Burstiness, on the other hand, is all about the rhythm of the text.

Think of it like a heartbeat on a hospital monitor. Human writing has peaks and valleys—a jumble of long, complex sentences mixed with short, simple ones. This creates a "bursty" pattern. In contrast, AI writing often has a much flatter, more consistent rhythm, like a steady, monotonous hum.

Here’s a quick breakdown:

| Characteristic | Human Writing | AI-Generated Writing |

|---|---|---|

| Perplexity | High (Unpredictable) | Low (Predictable) |

| Burstiness | High (Varied rhythm) | Low (Uniform rhythm) |

So when a detector scans your document, it’s doing more than just reading. It's measuring these statistical qualities. If the text is too predictable (low perplexity) and has a flat, even rhythm (low burstiness), the tool is going to flag it as likely AI-generated. This is a huge part of the answer to the question of why these tools work so well on unedited AI drafts—they're spotting a machine's mathematical precision.

Putting Modern AI Detector Accuracy to the Test

So we know how these tools are supposed to work in theory. But how well do they actually perform out in the wild? The answer isn't a simple yes or no.

The truth is, accuracy exists on a surprisingly wide spectrum. It all depends on the sophistication of the detector, which AI model generated the text, and—most importantly—how much a human has tweaked the content. When you put these tools to the test, the results can be all over the map. Some detectors are brilliant against certain AI models, while others fall flat.

This isn't about finding one "best" detector. It's about getting a realistic sense of what you can expect. The numbers tell a fascinating and complicated story.

A Look at Real-World Performance Data

There’s a constant "arms race" between AI content generators and the tools designed to spot them. This means performance benchmarks are always shifting. Still, recent studies give us a pretty clear snapshot of where things stand today, and the top-tier detectors have become remarkably good.

In fact, some of the best AI detectors now claim near-perfect accuracy on raw, machine-generated text. One study found that a leading tool, Copyleaks, hit an average detection accuracy of 99.52%. It correctly flagged almost all AI-written content thrown at it while managing zero false positives on human writing.

On the other end, tools like Sapling have shown less reliability, hovering around 72.00% accuracy and occasionally getting it wrong. You can dig into more of these performance metrics in this analysis of AI detection in 2025.

It just goes to show you: not all detectors are created equal. Their effectiveness really hinges on what they were trained on and the specific algorithms they use to spot those AI fingerprints.

Why Accuracy Varies So Drastically

So why does one tool hit nearly 100% while another barely scrapes past 70%? The huge swings in accuracy come down to a few key factors that make consistent detection a real challenge.

If you really want to know if these tools work, you have to understand these variables.

- The AI Model Being Tested: A detector trained to spot patterns from an older model like GPT-3 might get easily fooled by the more sophisticated, human-like text from GPT-4o or Claude 3. The goalposts are always moving.

- The Detector's Training Data: A detector's "education" is everything. If it learned from a massive, diverse library of both human and AI text across tons of styles and topics, it's going to be way more robust and reliable.

- The "Human-in-the-Loop" Factor: This is the big one. Pure, unedited AI text is the easiest to catch. But the moment a human editor steps in—tweaking sentences, fixing clunky phrases, and injecting a personal voice—the trail goes cold, and detection gets exponentially harder.

Key Insight: A detector's score is a probability, not a certainty. Think of it less like a final verdict and more like an educated guess based on the available evidence. A high AI score on raw AI text is a strong signal, but a low score on heavily edited text doesn't guarantee it's 100% human.

Ultimately, the data shows us that while the best detectors are incredibly powerful, you have to interpret their results with a healthy dose of context. They're just one piece of the puzzle, not the whole picture.

Why Human Expertise Still Beats Any Algorithm

For all the talk about complex technology, the single best tool for spotting AI-generated text might be the one you already have: your own judgment. AI detectors are a decent first pass, sure, but they’re no substitute for the nuanced understanding of an experienced writer, editor, or even just a sharp reader.

Algorithms are built to find mathematical patterns—things like predictability and repetition. But humans are uniquely equipped to sense something far more subtle and important: a lack of soul. We can feel it when writing has no distinct voice, when the phrasing is technically correct but emotionally hollow, or when an argument flows logically but completely misses the point.

The Irreplaceable Human Touch

An AI detector sees text as a spreadsheet of data points. A human reader sees a conversation. That fundamental difference is why our intuition is so powerful. We pick up on the tiny imperfections and surprising quirks that make writing feel real.

Think of it like this: an algorithm can tell you if a song hits all the right notes with perfect timing. But only a human can tell you if that song has any feeling.

This isn’t just a gut feeling, either. A recent study found that experts who use AI regularly can identify AI-generated text with an individual effectiveness ranging from 59.3% to 97.3%. When their judgments were combined, these experts nearly perfectly classified 299 out of 300 articles—a feat almost no commercial detector could touch. You can dig into the full findings to see just how effective the human-in-the-loop approach is.

It shows that as people get more familiar with AI’s writing style, their ability to spot it gets dramatically better. That makes human insight a critical layer of verification.

Key Insight: Don't dismiss your gut feeling. If a piece of writing feels "off" or lacks a clear voice, it's worth investigating further, no matter what a detector says. Trusting your instincts is a valid part of the evaluation process.

Where Humans Outshine the Machines

So, what are we looking for that an algorithm might miss? An experienced eye can spot flaws that go way beyond statistical analysis.

Here are the key areas where human judgment still wins:

- Detecting a Lack of Voice: AI really struggles to create a unique, consistent authorial voice. A human reader can easily tell when writing feels generic, like it was written by a committee with zero personality.

- Spotting Logical Gaps: AI can spit out sentences that are grammatically perfect but make no real-world sense together. It might present a string of facts without building a coherent argument, a flaw a critical reader will notice immediately.

- Recognizing Unnatural Phrasing: While AI is improving, it still produces clunky or awkward phrases a native speaker would never use. These are the "tells" that often slip right past automated checks.

- Judging Context and Nuance: This is a big one. AI has no true understanding. It can't grasp irony, sarcasm, or the subtle cultural context that gives writing its depth and meaning.

Ultimately, this isn't about humans versus machines. The smartest approach is a partnership. Use AI detectors as a helpful assistant to flag potential issues, but always let an expert human eye make the final call.

Common AI Detector Myths and Costly Mistakes

As AI detectors pop up everywhere, so do a lot of myths and bad assumptions. Believing these can lead to some pretty serious mistakes, from wrongly accusing a student of cheating to publishing content that just isn't ready. Let's clear the air and bust the most dangerous myths so you can use these tools the right way.

The biggest mistake people make is treating a detector's score as the absolute truth. Seeing a "100% Human" or "98% AI" score feels definitive, but it's not. These scores are just probabilities—an educated guess based on statistical patterns. A high AI score doesn't automatically prove cheating, and a perfect human score doesn't guarantee the text is original.

The Myth of Infallible Accuracy

One of the most persistent myths is that if a tool says text is AI-generated, it must be true. This leads directly to the serious problem of false positives, where perfectly good human writing gets flagged. And it happens more often than you'd think, especially with certain writing styles.

For example, writers who aren't native English speakers often use more formal, structured language that can accidentally mimic AI patterns. The same goes for highly technical or academic writing, which naturally has less "burstiness" and more uniform sentence structures. It's just the nature of the content.

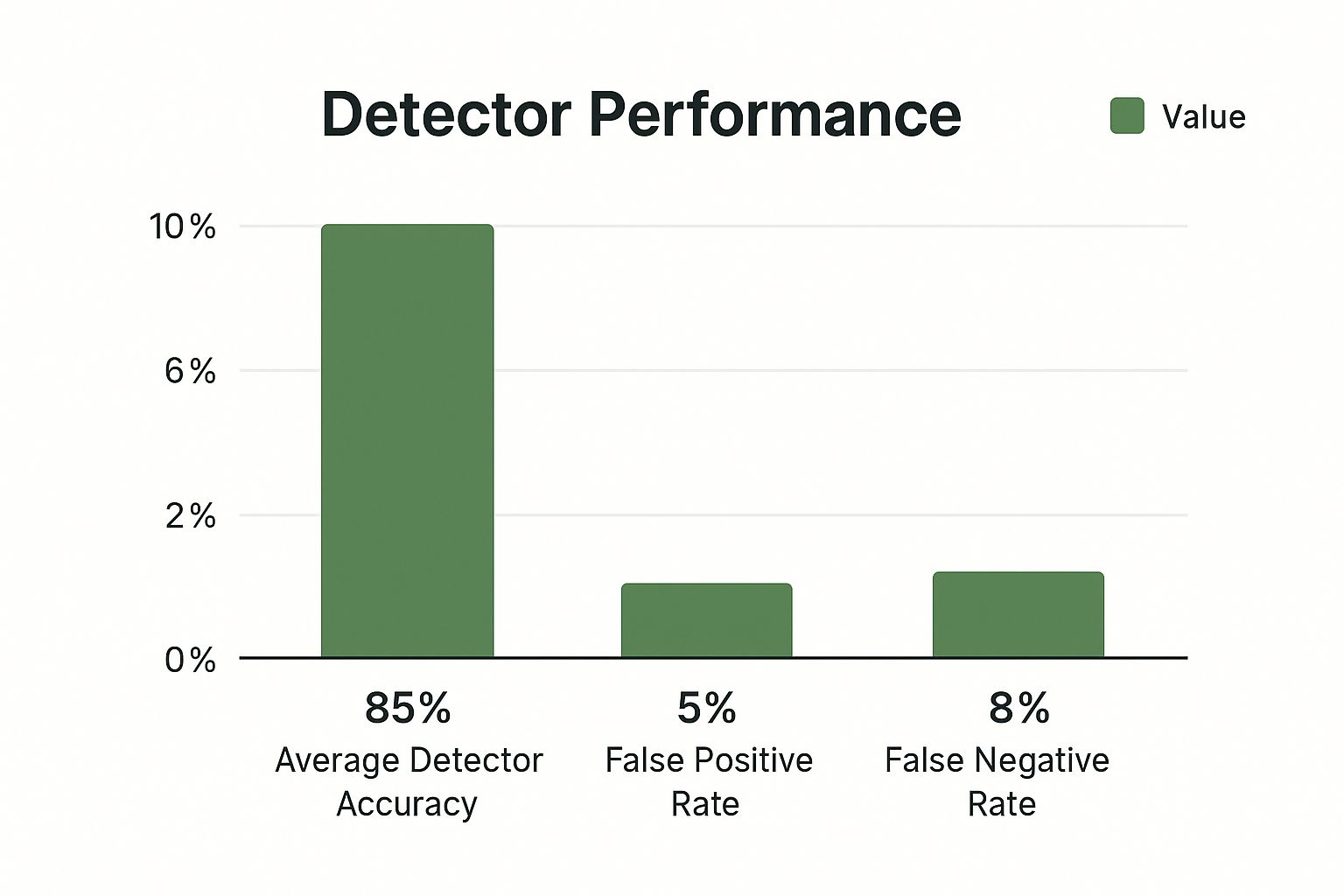

The image below gives a snapshot of typical AI detector performance, showing the constant tug-of-war between getting it right and making errors.

While the average accuracy might look high, that lingering false positive rate means some human-written content will always be misidentified. It’s unavoidable.

Key Takeaway: An AI detector score is a signal to investigate further, not a final verdict. Never use a single score to make a serious decision about academic integrity or professional conduct without finding more evidence.

Another common pitfall is relying on just one tool. As we’ve seen, accuracy can swing wildly between detectors. A piece of text might get a 90% AI score on one platform and a 20% AI score on another. Running your text through multiple tools helps create a more balanced and reliable signal, protecting you from the quirks or weak spots of any single algorithm.

The Messy Middle Ground of Mixed Content

A lot of people think text is either 100% human or 100% AI, but the reality is much messier. Today, many of us use AI as a writing assistant—for brainstorming ideas, sketching out an outline, or untangling a clumsy sentence. This creates a hybrid text that simply doesn't fit into a neat binary box.

This "human-in-the-loop" workflow is a massive blind spot for many detectors. Even simple paraphrasing or a light edit of an AI-generated paragraph can be enough to fool a tool into giving a "human" score. If you're curious about the specifics, our guide on how to bypass AI detection digs into the techniques that make text fly under the radar.

This whole situation creates a huge gray area. Where do you draw the line? If a writer uses AI for ideas but writes the content themselves, is it AI-written? Most would say no. But if they generate a draft and then edit it heavily, the answer gets a lot fuzzier.

To help you navigate this, let's look at some common myths versus the reality of how these tools work.

AI Detector Myths vs Reality

| Common Myth | The Reality |

|---|---|

| A "100% Human" score proves no AI was used. | It just means the final text doesn't match known AI patterns. AI could have been used for brainstorming, outlining, or early drafts. |

| AI detectors are lie detectors for text. | They are simply pattern-matching tools. They compare text against statistical models, they don't determine intent or "truth." |

| False positives are a rare, minor issue. | They are a significant and persistent problem, especially for non-native English speakers and in technical or academic writing. |

| If you find a good detector, you only need one. | Accuracy varies widely. Cross-checking with 2-3 tools provides a much more reliable and nuanced picture. |

Ultimately, understanding AI detectors means accepting their limits. They're useful for flagging content that needs a closer look, but they are not—and may never be—perfect judges of where a text came from. They should always be used to supplement human judgment, not replace it.

A Practical Framework for Using AI Detectors

Knowing AI detectors have their limits is one thing, but actually using them effectively is a whole different ballgame. To get any real value out of these tools, you need a smart approach that goes beyond just scanning a document and blindly trusting the score. A good workflow treats the detector as a guide, not a final verdict.

This mindset is key for everyone involved—from teachers and editors trying to ensure integrity to writers wanting to protect their original work. The idea is to use these tools strategically, letting their data inform your decisions instead of making them for you. It's less about a single "gotcha" moment and more about building a complete picture of where the content came from.

Adopt the Rule of Three

First things first: never rely on a single AI detector. As we’ve seen, accuracy can be all over the place. One tool might flag a text as 95% AI, while another calls it 30% AI. Using just one is like getting a single opinion on a major life decision—it's just too risky.

A much better way is to run your text through at least three different, reputable detectors. Then, look for a consensus.

- If all three agree that the content is very likely AI-generated, you’ve got a strong signal that it’s time to take a closer look.

- If the results are all over the map, that’s your sign that the tools alone can't give you a definitive answer.

This triangulation method helps cancel out the individual quirks and weak spots of any single algorithm, giving you a far more reliable read.

From Accusation to Conversation

For editors and educators, the biggest mental shift is changing why you use a detector. It shouldn't be a weapon for punishment. It should be a tool to start a conversation about quality and integrity. A high AI score isn't proof of cheating; it's a reason to ask questions.

A high AI score should prompt a discussion, not an accusation. Use it as an opportunity to discuss the writing process, review drafts, and understand how the content was created. This educational approach fosters trust and reinforces the importance of authentic work.

Understanding the fine points of AI detection can really make a difference in your digital efforts, including your overall content marketing strategies.

Strategies for Creators and Writers

If you're a writer, your goal is to build a distinctive voice that doesn't accidentally trigger false positives. AI-generated text often feels flat because it lacks "burstiness"—a natural, varied rhythm. You can fight this by focusing on what makes writing human.

- Vary Sentence Structure: Make a conscious effort to mix long, winding sentences with short, punchy ones. This creates a natural rhythm that machines find difficult to mimic.

- Inject Personality: Use unique analogies, tell personal stories, and maintain a consistent authorial tone. The more your writing sounds like you, the less it will sound like an algorithm.

- Refine Word Choice: Step away from safe, overly formal language. Go for more specific, interesting, and sometimes even quirky words that show off your personal style.

For creators using AI as a starting point, learning how to make AI writing undetectable comes down to mastering these same techniques. The key is to make sure the final piece is a true reflection of your own ideas and voice, not just a clean copy of the machine’s first draft.

Common Questions About AI Detectors

When you start using AI detectors, a lot of questions pop up. It's smart to be curious. Getting straight answers is the only way to use these tools the right way, so let's clear up some of the most common ones.

Can an AI Detector Ever Be 100% Accurate?

Nope. Not a single one.

While the best tools are impressively sharp, 100% accuracy is just not possible. They are not perfect. Detectors make two kinds of mistakes: flagging human writing as AI-generated (false positives) or completely missing text that was written by AI (false negatives).

It's best to think of a score from a detector as a strong suggestion, not a final verdict. How accurate it is in the real world depends on a lot of things—the AI that wrote the text, how complex the subject is, and how much a human edited it afterward. For any decision that matters, you absolutely must mix the tool's result with your own judgment.

Will I Get Flagged for Using AI to Brainstorm or Outline?

That's highly unlikely.

AI detectors aren't looking at your brainstorming notes. They analyze the finished product—the words, the sentences, the rhythm, and the patterns that make up the final text. They're built to spot the statistical fingerprints that a machine leaves behind.

If you use an AI to spitball ideas or map out a structure, those "fingerprints" won't be in your writing. As long as you’re the one crafting the actual paragraphs, the detector is analyzing your work, not the prep work.

Do These Tools Work for Languages Other Than English?

It's a mixed bag, and effectiveness varies wildly.

Most of the big-name AI detectors were trained on enormous English-language datasets. That’s their comfort zone, and it’s where they’re most reliable.

Many are starting to add other languages, but their accuracy is often much lower and hasn't been tested nearly as much. If you're checking content in another language, you really need to find a tool specifically built and proven to work for that language. Otherwise, the results aren't very dependable.

How Do AI Detectors Affect My SEO?

Directly, they don't. Google doesn't use third-party AI detectors to rank pages. Simple as that.

Google's main goal has always been to reward high-quality, helpful content made for people. The tool used to create it doesn't matter as much as the quality of the result.

However, detectors are incredibly useful for SEOs and content managers. Think of them as a quality control step. You can use one to catch low-effort, spammy AI content before it gets published, protecting your site from the kind of stuff that would hurt your search performance. Good SEO still comes down to creating great content that people actually want to read.

Ready to ensure your writing sounds genuinely human? Natural Write instantly refines AI-generated text, making it clear, engaging, and ready to bypass detection. Try our free tool to polish your work with confidence.