What Do AI Detectors Look For in Your Writing

January 29, 2026

Think of an AI detector as a digital detective. It doesn't read your content for meaning or understand the ideas you're trying to convey. Instead, it acts like a forensic analyst, dusting for the statistical fingerprints that AI models almost always leave behind.

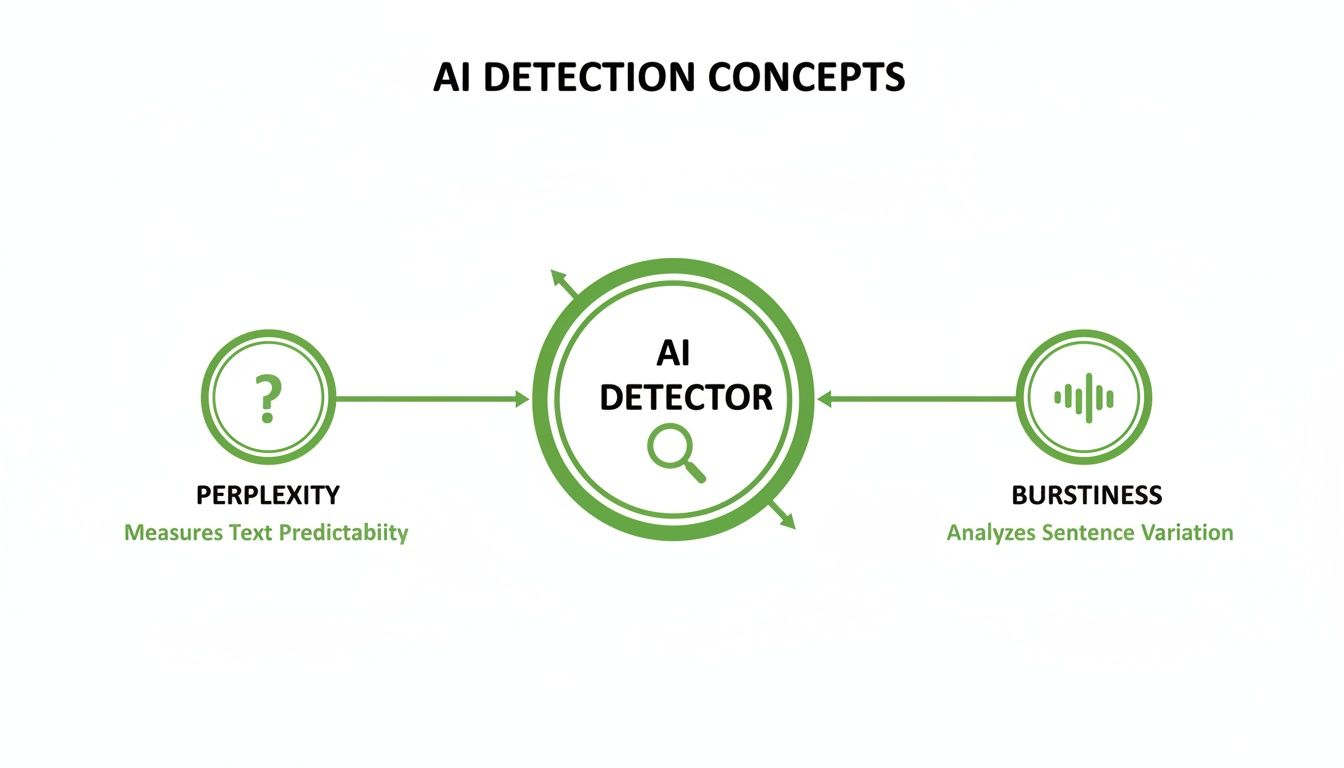

The two biggest clues it hunts for are low perplexity (how predictable the writing is) and low burstiness (how uniform the sentence structure is).

The Hidden Patterns AI Detectors Look For

So, what are these detectors actually looking for? It's less about creative genius and more about math. AI models are trained to pick the most statistically likely word to come next, and then the next, and so on. This process creates text that is often unnaturally smooth and even-keeled.

Human writing, on the other hand, is gloriously messy. We write in bursts—a long, winding sentence full of clauses, followed by a short, punchy one. We make surprising word choices. This natural, uneven rhythm is what detectors call burstiness, and its absence is a huge red flag.

The diagram below breaks down how these two core signals—perplexity and burstiness—form the backbone of AI detection.

As you can see, these metrics create a statistical baseline. Think of a human writer as a jazz musician improvising a solo—full of unexpected notes and rhythm changes. An AI, in contrast, is more like a metronome, producing a steady, predictable beat that a machine can easily spot.

Predictability as a Red Flag

This predictability is the Achilles' heel of most AI-generated content. Large language models (LLMs) are, at their core, incredibly sophisticated prediction machines. Their goal is to generate text that makes sense, and they do that by choosing the safest, most probable words.

A key insight from text analysis is that AI writing often has a lower perplexity score, meaning its word sequences are more common and expected than those found in human writing. This statistical uniformity is one of the easiest patterns for a detector to spot.

Simply put, AI tools are designed to play it safe. They string together words and phrases that have a high probability of appearing together based on the mountains of data they were trained on. The result is content that might be grammatically perfect but often feels bland, generic, and lacking a distinct voice—a dead giveaway for a detection algorithm.

To give you a clearer picture, here’s a quick summary of the main signals these tools are trained to find.

Key Signals Used by AI Detection Tools

This table breaks down the primary characteristics and patterns that AI detectors analyze to distinguish between human and machine-written content.

| Signal | What It Measures | Why It Matters for Detection |

|---|---|---|

| Perplexity | The randomness or unpredictability of word choices in a text. | Humans often use unexpected words. AI sticks to common, high-probability words, leading to low perplexity scores that are easy to flag. |

| Burstiness | The variation in sentence length and structure. | Human writing has a natural rhythm of long and short sentences. AI often produces sentences of similar length, creating a robotic, monotonous flow. |

| Token Distribution | The frequency and pattern of specific words or phrases. | LLMs can overuse certain "AI-sounding" words (e.g., "delve," "leverage," "unveil") or common transition phrases, creating a detectable pattern. |

| Stylometry | The unique stylistic "fingerprint" of a writer, including vocabulary richness and sentence complexity. | AI-generated text often lacks a consistent, unique style and tends to regress to a generic, formal tone that detectors can identify. |

| Punctuation Patterns | The usage of commas, periods, and other punctuation marks. | AI models often follow rigid grammatical rules for punctuation, whereas humans use it more flexibly and sometimes unconventionally for emphasis or style. |

Understanding these signals is the first step in learning not only how detectors work but also how you can ensure your own writing—whether AI-assisted or not—retains an authentic, human touch.

Understanding Perplexity and Burstiness: The Telltale Signs

To really get what AI detectors are looking for, we need to pull back the curtain on two core ideas: perplexity and burstiness. These aren't just fancy tech terms; they're the statistical fingerprints that can distinguish human writing from something a machine cooked up.

Think of perplexity as a measure of predictability. If I start a sentence, "The cat sat on the...," your brain almost instantly jumps to "mat." That’s low perplexity. The next word is obvious, expected, and doesn't surprise anyone.

AI models, at their core, are massive prediction engines. They're trained to pick the most statistically likely word to follow the last one. This makes their writing incredibly smooth and grammatically perfect, but it can also make it feel a bit… bland. There’s no spark of the unexpected.

Human writing, on the other hand, is full of surprises. We choose weird words, craft unique phrases, and sometimes go in directions an algorithm would never see coming. That unpredictability, that higher perplexity, is a hallmark of human creativity.

The Rhythm and Flow of Your Writing

Now, let's talk about burstiness. This is all about the rhythm and variation in your sentences. When you write naturally, you don't use sentences that are all the same length. You mix it up. A short, punchy sentence lands with impact, followed by a longer one that meanders and adds detail.

A human writer might create a passage like this:

- "The results were in. After months of grueling research, cross-referencing hundreds of data points, and more than a few late nights fueled by coffee, the team finally concluded that their initial hypothesis was, against all odds, completely right."

See that? A quick jab followed by a long, descriptive follow-up. That’s burstiness in action.

AI-generated text often misses this natural cadence. Since it's optimizing for clarity on a sentence-by-sentence basis, it can fall into a monotonous pattern. The sentences end up being roughly the same length and structure, which feels robotic to a human reader and is a massive red flag for a detector.

An AI detector is basically a pattern-finder. When it sees that sentence lengths are too consistent and word choices are too predictable, it's more likely to flag the text as machine-generated.

For instance, an AI might phrase that same idea like this:

- "The team analyzed all the extensive data. They worked diligently on the project for many months. The initial hypothesis was eventually proven correct. Their conclusion was based on the accumulated evidence."

The information is there, but the rhythm is gone. It’s flat, uniform, and a classic AI giveaway.

These two signals—the predictability of your words (perplexity) and the rhythm of your sentences (burstiness)—are what detectors use to build a statistical case for who, or what, wrote the text. To dive deeper into the nuts and bolts, check out our complete guide on how AI checkers work.

How an AI Detector Learns to Spot a Robot

So, how does a tool actually learn to tell the difference between human and AI writing? It's not magic, but it does involve a ton of data and some pretty smart training. Think of it like sending a detective to a specialized academy.

To train an art expert to spot a forgery, you wouldn't just give them a textbook on Picasso. You’d make them study thousands of genuine masterpieces right alongside thousands of convincing fakes. Over time, they develop an instinct for the subtle brushstrokes, the texture of the canvas, and the specific shades of paint that separate the real deal from an imitation.

AI detectors get the same kind of education, but with words. They’re fed millions of documents—a massive library of verified human writing from books, news articles, and academic papers, plus an equally huge collection of text generated by models like GPT-4.

The Two Main Training Philosophies

This training process usually takes one of two directions.

The first is a feature-based approach. This is like teaching the art expert to analyze the chemical composition of the paint and measure the spacing between brushstrokes. The detector is trained to look for specific, quantifiable statistical clues that tend to give AI away.

- Word Frequency: Are certain words and phrases popping up a little too often?

- Sentence Complexity: Is the structure too uniform, or does it lack variety?

- Vocabulary Distribution: How diverse and rich is the vocabulary?

This method breaks writing down into cold, hard numbers, hunting for the mathematical fingerprints that point to a machine.

The second path is a model-based approach. Instead of just picking apart individual features, this type of detector learns the overall "vibe" of AI text. It's much closer to the art expert who gets an intuitive feeling—a sense that something just feels off about a fake, even if they can't immediately say why.

This kind of model compares a new piece of text against its entire library of human and AI examples to see which one it fits best. For a deeper dive into the technical side, this Machine Learning for Businesses: A Practical Guide To AI Integration provides a great overview of the concepts that power these systems.

A Never-Ending Arms Race with Shaky Results

The thing is, the training never really stops. As AI writing tools get better at sounding human, the detectors have to be constantly retrained with new examples just to keep up. It's a perpetual cat-and-mouse game.

And the process is far from perfect. In fact, these tools can be notoriously unreliable. Even OpenAI had to shut down its own detection tool because it was just too inaccurate. It had a measly 26% success rate at spotting AI-generated text and, worse, it falsely accused humans 9% of the time.

This constant back-and-forth reveals a fundamental weakness: detectors are always playing defense. They're trained on the AI of yesterday, which makes them less effective against the more sophisticated models of tomorrow.

Ultimately, knowing how these detectors are trained shows their strengths and their very real limitations. They are powerful pattern-recognition tools, but their accuracy is a moving target in a field that's changing by the day. To learn more, read our guide on how an AI detector works.

Digging Deeper: The Statistical Fingerprints of AI Writing

While robotic rhythm and predictable phrasing are dead giveaways, the really good detectors go much further. They start looking at the statistical guts of the text, analyzing things that aren't immediately obvious to the human eye. These tools are trained to spot the subtle, almost invisible clues that scream "machine."

The Vocabulary Problem: Token Distribution

One of the biggest tells is something called token distribution. A "token" is just a fancy word for a chunk of text the AI processes—it could be a whole word like "apple" or a part of a word like "un-" or "-ing." AI models, at their core, are prediction engines. When they build a sentence, they're constantly choosing the next most statistically likely token.

Think of it like this: if you ask someone to finish the sentence, "The sky is...", most people will say "blue." An AI does the same thing, but for every single word. It almost always picks the most common, safest, most predictable option.

A human writer might say, "The sky was a bruised, melancholy grey," or "The sky blazed a fiery orange." We make interesting, unexpected choices. AI, on the other hand, tends to stick to the statistical middle of the road. The result is writing that's grammatically flawless but feels bland and lacks a rich, diverse vocabulary. It’s like a painter who only uses primary colors because they’re the most popular.

The Missing "You": Stylometry and the Author's Voice

This is where it gets really interesting. The most advanced detectors use a technique called stylometry, which is basically the science of identifying an author's unique "voice." Think of it as a literary fingerprint.

Every single one of us has writing quirks. Maybe you love using em dashes for dramatic effect. Perhaps you have a habit of starting sentences with "And" or "But." You might use certain words far more often than others. All these little habits—your sentence lengths, your punctuation choices, even how often you use common words like "the" and "of"—combine to create a distinct, measurable style.

Stylometry software is trained to look for these patterns. It analyzes dozens of features to build a profile of the author, including:

- Vocabulary Richness: How broad is the vocabulary? Is there a lot of word repetition?

- Punctuation Habits: What's the ratio of commas to periods? Are semicolons or colons used?

- Function Word Usage: The frequency of small, connective words ("it," "is," "on," "with") is a surprisingly stable marker of a person's writing style.

- Sentence Complexity: Are sentences long and winding, or short and punchy?

AI-generated text completely fails this test. Because it was trained on a massive, diverse soup of internet text from millions of different people, it doesn't have a single voice. It has a smoothed-out, averaged, generic style that belongs to everyone and no one.

AI detection isn't just about finding robotic patterns; it's also about noticing the absence of human ones. The lack of a unique style, personal idioms, and even the occasional beautiful imperfection can be as telling as a predictable word choice.

When a stylometry tool analyzes AI text, it's looking for a consistent authorial fingerprint. When it finds a surface that’s perfectly smooth and featureless, it raises a big red flag. This combination of vocabulary analysis and the search for a unique voice allows modern detectors to catch AI content with a lot more sophistication.

The High Stakes of False Positives

Think about a security system that constantly flags you as an intruder in your own home. That’s exactly what happens when AI detectors produce a “false positive”—they accuse legitimate, human-written text of being generated by a machine. This isn't just a rare hiccup; it's a deep-seated flaw in the technology with very real consequences.

These tools are not impartial judges of truth. A human writer who favors simple, direct language can be flagged as AI, while a carefully prompted and edited AI draft might slide by undetected. Worse yet, the very patterns these systems are trained to find, like predictable sentence structures, are often present in the writing of non-native English speakers, creating a serious bias.

The stakes here are incredibly high. A student could suddenly face an academic dishonesty hearing, or a freelance writer could lose a client, all based on the faulty verdict of an algorithm. It fosters a weird, stressful environment where people might even avoid writing clearly just to avoid being misjudged by a machine.

The Problem of Unreliable Evidence

At the heart of the matter is how AI detection scores are used. They are often treated as concrete proof when, in reality, they are nothing more than a statistical guess. Time and again, research has shown that even the most popular detectors have significant error rates.

A landmark 2023 survey from MIT researchers delivered a blunt warning on this very topic. They concluded that institutions should absolutely not rely on these tools for making high-stakes decisions, pointing out that the systems are easily tricked and produce far too many false positives. You can discover more about the challenges of LLM-generated text detection and see for yourself why experts are urging caution.

The reality is that no AI detector is foolproof. The technology is in a constant state of catch-up, and its results should always be viewed as a suggestion, not a verdict. A high AI-generated score is a signal to look closer, not a reason to jump to conclusions.

This fundamental unreliability is why understanding what AI detectors look for is so crucial. When you know their methods, you can also see their limitations. To explore this further, you might be interested in our guide on whether AI detectors are accurate. The evidence is clear: for now, human judgment remains the most important tool for assessing the authenticity of any piece of writing.

Writing Authentic Content in the Age of AI

Forget trying to "beat" the algorithms. The best approach has always been to write for people, not for machines, and that hasn't changed. The goal isn't to trick a detector; it's to build a genuine connection with a human reader.

Think of AI as a brainstorming partner or an assistant for creating a rough outline. It can get the ball rolling, but it shouldn't be the one writing the final piece.

The real work starts once you have that first draft. Now it’s time to inject your own perspective, your unique voice, and your personal experiences. An AI can’t tap into your memories, share a relevant story from your past, or capture the specific emotional tone you want to set. Weaving these human elements into the text is the quickest way to make it truly yours.

This means you have to get your hands dirty with editing. Never just accept what the AI gives you. Push back on it. Break up the predictable sentence patterns by mixing short, punchy statements with longer, more detailed ones to create a rhythm that feels natural to read.

Adding Your Human Touch

Take a hard look at the vocabulary, too. AI models tend to play it safe, often falling back on generic, overused words. Ditch phrases like "it is important to note" for something more direct. Find those common AI-favored words and swap them out for more vivid, precise language that actually sounds like you.

The ultimate goal is to move beyond simply conveying information and instead craft a narrative. Adding a unique point of view, questioning assumptions, and ensuring the final text has a natural, conversational flow are what make content valuable and credible.

As AI becomes a standard part of the content creation toolkit, it’s worth getting familiar with the different AI tools for freelancers and understanding how using them can shape the perception of your work. Using them responsibly is everything.

Here are a few practical steps you can take to humanize any piece of text:

- Fact-Check Everything: AI models are notorious for "hallucinating"—in other words, making things up. You have to verify every single statistic, claim, and source before hitting publish. This isn't just about quality; it's about building trust.

- Inject Personal Stories: Share a short, relevant anecdote. Nothing adds a layer of personality and authenticity faster than a quick story that only you can tell.

- Read It Aloud: Your ears will catch what your eyes miss. Listen for clunky phrasing, awkward sentences, or a monotonous tone. If it sounds robotic when you say it, it will definitely feel robotic when someone reads it.

In the end, these steps do a lot more than just help your work pass an AI detector. They make your writing more engaging, more trustworthy, and genuinely more valuable to your readers.

Got Questions About AI Detection? We've Got Answers

You’ve got the basics down, but when the rubber meets the road, specific questions always pop up. Let's dig into some of the most common ones to get a real-world sense of how these tools operate.

Can Detectors Pinpoint Which AI Model I Used?

It's a common claim, but the reality is messy. While some tools might be trained on outputs from models like GPT-4, telling you exactly which AI wrote your text is more of a statistical guess than a certainty.

As these language models get more advanced, their unique "fingerprints" start to blur. They all begin to sound more alike, making it incredibly difficult for any detector to definitively point a finger at one specific source.

Does a Quick Paraphrase Fool AI Detectors?

Not anymore. If you're just running AI text through a basic word-swapper, you're not going to get very far. Most modern detectors are smart enough to look past simple vocabulary changes.

They’re analyzing deeper patterns—things like sentence rhythm, predictability, and the overall structural flow. A simple paraphrasing tool often leaves those core AI giveaways completely intact.

To truly humanize a piece of text, you have to do more than just swap out a few words. It’s about fundamentally changing the rhythm. You need to vary your sentence lengths, add a genuine voice, and break up that perfectly logical, almost sterile, flow that AI is so good at producing.

Are AI Detectors Biased Against Certain People?

Yes, unfortunately, and this is one of their biggest problems. Research has consistently shown that these tools are more likely to flag writing from non-native English speakers as AI-generated.

This happens because some writing from non-native speakers might have simpler sentence structures or a more limited vocabulary—patterns that detectors mistakenly associate with machines. It’s a serious flaw and a huge red flag for anyone thinking about using these tools for important decisions, like grading a student or evaluating a job applicant.

Ready to turn your AI drafts into content that sounds genuinely human? Natural Write is a free, privacy-first tool designed to humanize your text in a single click. Give it a try and start writing with confidence at https://naturalwrite.com.