Is This Written by AI? How to Spot the Signs

November 5, 2025

Ever get that feeling something you're reading is just… off? You're not the only one. The question "was this written by AI?" has gone from a niche tech concern to a daily reality. Advanced models are now so good, it's getting genuinely hard to tell their work apart from ours.

Why Everyone's Asking About AI Content

This isn't just a matter of curiosity. It's about a fundamental need for authenticity in a world absolutely flooded with information. From teachers worried about academic integrity to marketers trying to maintain a genuine brand voice, the stakes are real.

And this isn't some far-off problem. By 2025, AI-generated writing is everywhere. Some reports suggest that nearly 50% of new articles online are created by AI, putting it on par with human output. This massive shift forces us to think hard about content quality and what human authorship even means anymore.

The New Digital Literacy

Learning to spot the tells of AI writing is no longer a skill just for tech geeks—it's essential for anyone who reads or creates content online. The challenge is that the old giveaways are gone. Today’s AI can mimic tone, personality, and structure with unnerving accuracy. You can dive deeper into this evolution and learn more about what AI-generated content is here.

This new reality means we have to do more than just read the words on the screen. We now have to weigh the source's credibility, question the depth of the insights, and look for those subtle human touches that machines still can't quite replicate.

The rise of strategies like Answer Engine Optimization (AEO)—which specifically formats content for AI platforms—only makes this skill more critical. Honing your critical eye is the best tool you have to make sure you can trust the information you depend on.

How to Use AI Detection Tools Wisely

AI detection tools feel like a silver bullet for a tricky question: "Is this written by a human?" You just paste the text, hit a button, and get a neat little score. But I've seen too many people treat that score as gospel, and that's a huge mistake.

These detectors aren't reading for meaning or intent. They're built to spot statistical patterns—things like word choice, sentence structure, and predictability. AI models often fall into very safe, uniform patterns that these tools are trained to catch. A piece of writing that’s a little too perfect or formal might get flagged, even if a human wrote every word.

First, Know Their Limits

The biggest elephant in the room with these tools is their accuracy—or lack thereof. Even the best detectors top out at around 80% accuracy, which means they get it wrong for one out of every five documents they scan. It gets a little ridiculous when you hear about them falsely flagging famous human-written texts like the US Constitution.

This problem gets even worse for non-native English speakers. I’ve seen data showing their writing can trigger false positive rates as high as 70%. Their natural writing patterns can accidentally mimic the very things detectors are trained to see as AI-generated.

A high 'AI-generated' score isn't proof. It's just a signal to dig deeper, not a verdict to hand down.

Relying on a single number is a recipe for false accusations and bad calls. The real value of these tools is as a first-pass filter—a way to flag text that needs a closer look from a real human. For a deeper dive on this, check out our guide on are AI detectors accurate.

A More Practical Way to Use Detector Scores

Instead of treating a detector’s score as the final answer, think of it as a starting point for your own investigation. A low score might give you some confidence, but a high score just means it's time to put on your detective hat and start looking for other clues.

I’ve found it helpful to break down what the scores actually mean in practice. It helps you decide what to do next without jumping to conclusions.

Decoding AI Detector Scores

| AI Probability Score | What It Likely Means | Recommended Action |

|---|---|---|

| 0-30% | The writing shows human-like variation and complexity. | Probably safe to trust. A quick scan for other red flags is still a good habit, but it's likely human. |

| 31-70% | This is the gray zone. Could be formal human writing or edited AI text. | Time for a manual review. This score is a strong signal to look for other tell-tale signs of AI. |

| 71-100% | Strong statistical patterns match machine-generated text. | Doesn't confirm it's AI, but it's a major red flag. This requires a careful, in-depth manual analysis. |

Ultimately, that score is just one piece of data. It tells you something is unusual about the text, but it's up to you to figure out what that is.

Learning to Spot AI Writing With Your Own Eyes

AI detection tools are a good starting point, but the best detector you have is the one you’ve been training your whole life: your own intuition. Once you learn to spot the subtle giveaways of machine-generated text, you can trust your gut when a piece of writing just feels… off.

AI models are trained on massive datasets, which makes them incredible mimics. The problem is, this training also leaves them with a total lack of genuine human experience. Their writing often feels hollow, missing the personal stories, emotional texture, and weird quirks that make our own writing feel real.

Think about it. An AI might write a perfectly structured post about the "best local coffee shops," but it won't mention the wobbly table by the window or the barista who remembers your order. It knows the facts, but it completely misses the feeling. That absence of a personal touch is a huge red flag.

Look for an Unnatural Polish

Let's be honest: human writing is beautifully imperfect. We use contractions, start sentences with "And" or "But," and bend the rules for effect. AI-generated text, on the other hand, often has a sterile perfection that just doesn't feel right.

Keep an eye out for these classic signs:

- Flawless Grammar and Punctuation: Good writing is the goal, of course. But AI text is often too perfect, lacking the natural rhythm and minor slip-ups a human might make.

- Overly Formal Tone: Is the writer using words like "henceforth" or "moreover" in a casual blog post? That can be a dead giveaway that a machine is trying too hard to sound authoritative.

- Consistent Sentence Structure: AI models often fall into repetitive patterns, churning out sentences of the same length and structure again and again.

The goal isn't to become a grammar detective. It's about noticing when writing feels so polished and predictable that it lacks a human soul.

This is something editors and academics are getting really good at spotting. In peer reviews, for example, experts watch for red flags like repetitive phrasing, over-explaining simple concepts, and generic language that feels like filler. An AI might restate a point multiple times or use empty phrases that signal it doesn't truly understand the topic. If you want to dive deeper, you can read about AI detection red flags that experts are watching for.

Spotting Repetitive Language and Vague Ideas

Another major tell is when the AI gets stuck in a loop. It might introduce a concept, explain it, and then re-introduce the same exact idea a few paragraphs later using slightly different words. This happens because the model doesn't actually comprehend the topic; it's just trying to fulfill its prompt by hitting key points over and over.

AI also really struggles with specifics. Be wary of vague, generic statements that sound nice but offer zero real insight. A human expert gives you concrete examples, data, and personal observations. An AI, however, will just say something like, "It's important to consider all the factors before making a decision," without ever telling you what those factors are.

This mix of repetition and vagueness creates content that looks solid on the surface but is informationally empty. And that’s a classic sign you're reading something written by a machine.

Investigating Content Beyond the Words

So, the writing feels human. But is it real?

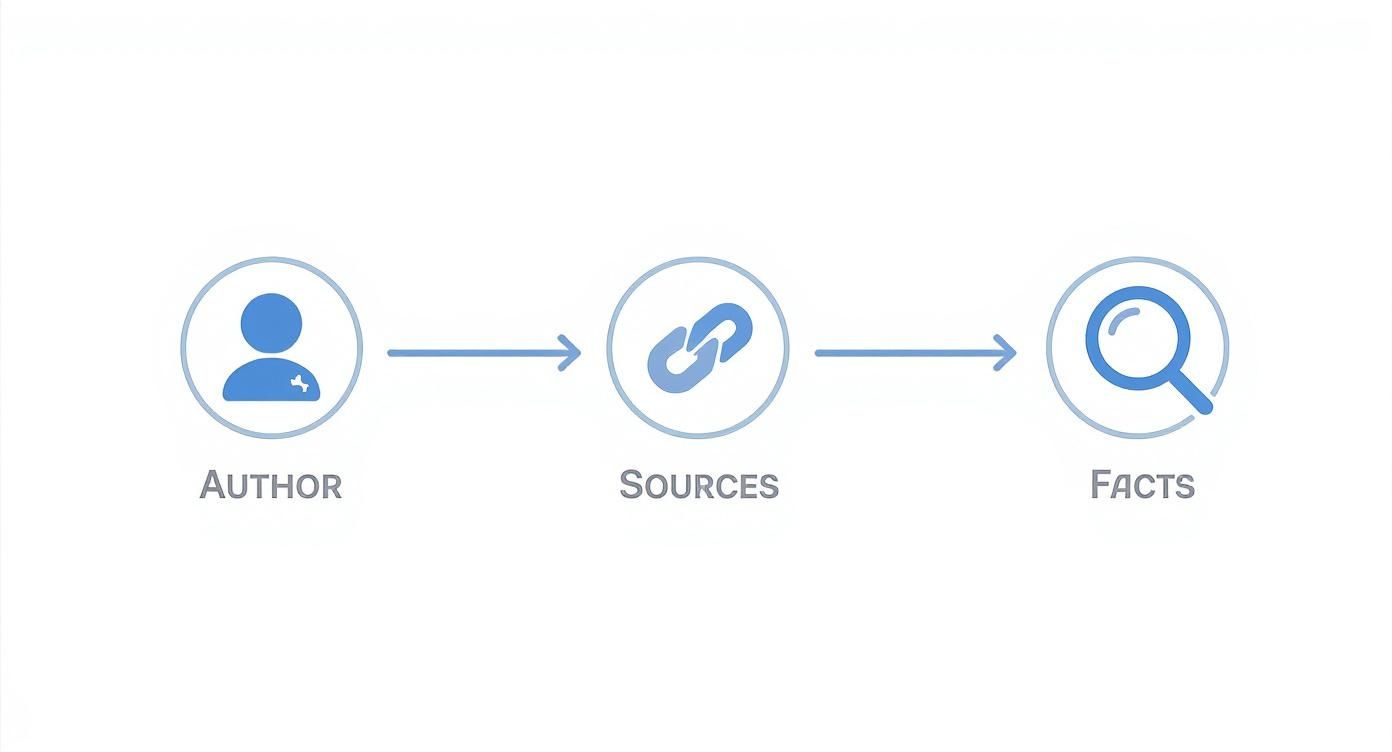

Authenticity runs deeper than just tone and flow. It's about whether the information is verifiable, credible, and comes from a legitimate source. This is where AI often gets tripped up, making it a crucial weak spot to check.

AI models are known for something called “AI hallucinations.” This is a fancy way of saying they confidently make things up. An AI might invent statistics, create quotes from non-existent experts, or even cite academic papers that were never written. It’s a sneaky kind of misinformation because it looks completely plausible on the surface.

A human expert might get a detail wrong, but they won’t invent a source out of thin air. An AI has no such conscience.

Putting the Facts on Trial

To catch these fabrications, you have to do a little detective work. Don't just read the words—investigate the world they describe. It’s a simple process of verification that only takes a few seconds.

For example, if an article claims a historical figure said something profound, a quick Google search should back that up. If it references a "2023 Stanford study," that study should be easy to find on the university's website or on Google Scholar.

When a source is vague or just plain impossible to locate, that’s a massive red flag.

The most reliable way to spot a sophisticated fake is to check its homework. If the sources are ghostly and the facts are phantoms, you’re likely dealing with AI-generated text.

This verification process is a lot like checking for other content integrity issues. In fact, our guide on how to check for plagiarism touches on some of the same principles of source verification.

Evaluating Author and Source Credibility

Finally, zoom out. Look beyond the article itself to the author and the platform it’s published on.

Does the author have a digital footprint? A real person usually has a LinkedIn profile, a professional bio, or a history of writing on the topic. An AI-generated author persona, on the other hand, often has a generic name and a background that evaporates under scrutiny.

Think about the publication, too. Is it a reputable site known for expert content, or a brand-new, anonymous blog with no clear editorial standards? A lack of transparency about who’s behind the content is a strong signal that it might not be trustworthy. This final step is a powerful piece of the puzzle.

Developing Your Content Authenticity Workflow

When it comes to figuring out "is this written by AI?", the most reliable answer won't come from a single tool. It comes from building a smart, repeatable process—a habit of informed skepticism that blends technology with your own critical eye.

Start with a quick scan using an AI detector. Think of the score it gives you as a preliminary flag, not the final verdict. It’s just the first data point.

If the score is high or your gut just tells you something is off, it's time to dig in with a manual review. This is where you look for the classic tells of AI: unnaturally perfect grammar, a noticeable lack of personal stories or anecdotes, and suspiciously repetitive phrasing. You're trying to feel out if the text has a human touch or if it just feels sterile and cranked out by a machine.

While your main focus here is detection, it's also helpful to understand how this content performs in the wild. Resources like a ChatGPT Rank Tracker Free Tool can give you a peek into the broader landscape of AI content online.

This simple workflow points to the final, and most crucial, step: checking the author, their sources, and the actual facts.

Honestly, this last part—investigating the substance and credibility of the content—is what usually gives you the clearest answer. By combining these three stages, you create a solid method for confidently figuring out if what you're reading is the real deal.

Got Questions About AI Content? We've Got Answers

Even when you're armed with the best tools and a sharp eye, things can get murky. When the line between human and machine writing starts to blur, it’s only natural to have a few questions. Here are some of the most common ones I hear.

Can I Really Trust a Free AI Detector?

Think of a free tool as a first pass—a quick gut check. They’re a great way to flag content that might need a closer look, but you shouldn't treat their verdict as the final word.

Why? They often have a higher rate of false positives and aren't as sophisticated as their paid counterparts. So, use them as a signal, not as definitive proof.

What if I Think It's AI but Can't Prove It?

Honestly, this happens all the time. When you hit a wall, shift your focus from who wrote it to how well it's written.

Is the text vague and full of generic fluff? Does it lack credible sources or, worse, contain factual errors? If so, the content is untrustworthy, regardless of whether a human or a machine wrote it. Your judgment should always come down to quality first.

The goal isn't just to play "spot the AI." It's about making sure the information you use and share is solid, reliable, and authentic.

Are AI Detectors Biased?

Yes, and this is a huge deal. Some studies have shown that certain detectors are more likely to flag text from non-native English speakers as AI-generated.

This is a serious flaw. It's also a powerful reminder of why human judgment is non-negotiable. You have to consider the context, or you risk making an unfair call.

Will It Always Be This Easy to Spot AI Writing?

Definitely not. The game is constantly changing. As AI models get smarter and more sophisticated, the dead giveaways we look for today will become less and less common.

That’s why the best long-term strategy isn't about finding one perfect tool. It’s about building a solid process that combines detector scans, careful reading, and good old-fashioned fact-checking. That’s how you’ll stay ahead.

Ready to turn your AI drafts into natural, human-sounding content? Natural Write uses a one-click process to humanize your text, helping it bypass AI detectors while keeping your core ideas intact. Give it a try for free today.