Does Undetectable AI Work? A Practical Guide

September 24, 2025

So, does undetectable AI actually work? Yes, it absolutely does—but it’s not magic. Its effectiveness hinges on how well the AI content is “humanized.” The whole point is to mimic the subtle, often imperfect patterns of human writing so it can slip past detectors built to spot robotic text.

The High-Stakes Game of AI Content Creation

There’s a fascinating tug-of-war happening in the world of digital content. On one side, you have AI generators churning out articles, essays, and marketing copy at incredible speed. On the other, you have AI detection tools designed to sniff out that machine-made text.

This creates a high-stakes “cat-and-mouse game” for anyone using AI to scale up their work. For students, marketers, and writers, the challenge is pretty clear: how do you use the power of AI without getting flagged? The goal isn't to deceive, but to refine an AI-generated draft into something that’s genuinely valuable and reads like a person wrote it. This is exactly where "undetectable AI" comes in.

Balancing Efficiency with Authenticity

At its core, this isn't just about fooling a machine. It's about preserving the human touch that makes content engaging and trustworthy. Raw AI output often lacks the nuance, rhythm, and slight imperfections that make communication feel authentic. That’s why a simple copy-paste approach almost always falls flat.

Effective undetectable AI tools, like Natural Write, get to work on the key giveaways of machine writing:

- Predictable sentence structures: They mix things up, varying sentence length and complexity.

- Overly formal tone: They swap out vocabulary and adjust phrasing to sound more natural and conversational.

- Lack of unique voice: They help inject the text with a more distinct, human-like personality.

The real objective is to elevate AI-assisted content to a level where it not only bypasses detection but also genuinely connects with a human audience. Success means producing work that is both efficient to create and authentic to read.

Ultimately, understanding how undetectable AI works is about recognizing this balance. It’s about using technology not just to create text, but to polish it into something that feels real, provides value, and meets the standards of both algorithms and human readers.

To wrap things up, here’s a quick breakdown of what you can realistically expect from undetectable AI tools and where their limits lie.

Undetectable AI Performance at a Glance

| Aspect | Summary |

|---|---|

| Bypassing Detection | Highly effective. By rewriting text to vary sentence structure, word choice, and flow, these tools successfully mimic human writing patterns, often achieving scores of 90-99% human on major detectors. |

| Preserving Meaning | Mostly reliable. The core message of the original text is usually kept intact, but complex or highly technical information may require a quick manual review to ensure complete accuracy. |

| Adding Voice & Tone | Good, but needs guidance. Humanizers can shift the tone to be more casual, professional, or engaging. However, they can’t invent your unique voice. That final touch of personality still comes from you. |

| Limitations | Not a substitute for fact-checking. An undetectable AI tool won’t verify the accuracy of the original AI-generated text. It’s a polisher, not a fact-checker. You’re still responsible for the content’s truthfulness. |

| Best Use Case | Refining, not creating. These tools shine when used to transform a solid AI-generated draft into a polished, human-sounding final piece. They are the final 10% of the work, not the first 90%. |

Think of these tools as a finishing step. They’re fantastic for bridging the gap between a robotic first draft and a final piece that connects with people, but they work best when you’re still in the driver’s seat.

Inside the AI Detection Arms Race

To really get why "undetectable AI" is even a thing, you have to look at the battlefield it’s fighting on. It's a constant chess match between the AI that writes content and the AI that tries to catch it. Each one is always trying to guess the other's next move.

Think of AI detectors as digital detectives. They’re trained to hunt for specific clues that just scream "robot." These giveaways usually include things like unnaturally perfect grammar, super predictable sentence patterns, and a consistent, almost sterile tone from start to finish. We actually dive deeper into what they're looking for in our guide on how AI detectors work.

This back-and-forth has created a massive industry. In fact, the AI content detection market is expected to grow at an annual rate of about 24%. But here’s the thing: these tools are far from perfect. Studies have shown they can incorrectly flag human writing anywhere from 10% to 28% of the time, and they miss about 20% of AI-generated content completely. You can dig into more of the data on detector accuracy in this 2025 guide on undetectable AI tools.

How Humanizers Outsmart the Detectives

This is where AI humanizers step in. They aren't just glorified paraphrasers; they're built to think like the detectors they're trying to beat. They know exactly which robotic traits to spot and how to strategically break them down.

A humanizer’s job is to introduce the beautiful, subtle messiness that makes human writing feel, well, human. It intentionally messes with sentence length, swaps out common AI words for ones with more nuance, and tweaks the rhythm so the text doesn't feel so monotonous.

The real strategy here isn’t about deception. It’s about transformation. It’s about taking a logically sound but soulless AI draft and breathing life into it, making it far more authentic and engaging for a real person to read.

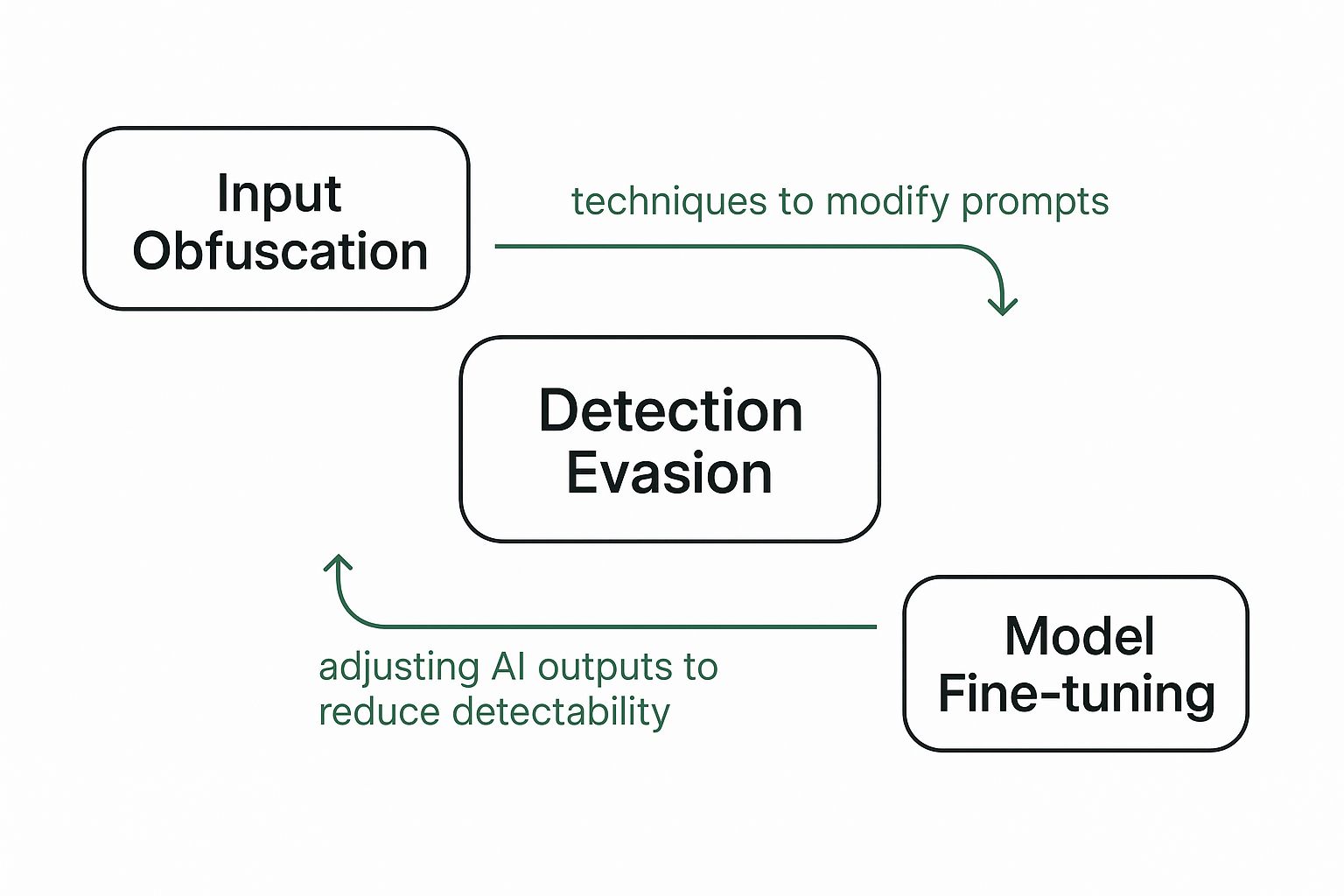

This image breaks down the core ways AI content is tweaked to fly under the radar.

As you can see, it’s a mix of adjusting the initial prompts, fine-tuning the AI model itself, and using specific evasion techniques—all working together to produce content that detectors will happily ignore.

The Two Key Metrics in Detection

To win this game, humanizers have to disrupt two key things that detectors are obsessed with:

- Perplexity: This is just a fancy way of measuring how predictable a string of words is. AI text often has low perplexity because it defaults to the most statistically likely word to come next, which sounds robotic. Humanizers dial up the perplexity by using less common words and more creative sentence structures.

- Burstiness: This is all about the variation in sentence length. Humans write in bursts. We’ll write a few short, punchy sentences and then follow up with a longer, more complex one. AI, on the other hand, tends to keep things uniform. Humanizers introduce that natural variance, breaking up the monotonous flow that detectors are trained to flag.

By zeroing in on these two factors, a good AI humanizer can make a piece of text statistically indistinguishable from something a person would have written.

How AI Humanizers Make Content Sound Authentic

So, how does an AI humanizer actually work its magic? It’s less about trickery and more about smart editing. Think of it as a tool that reverse-engineers the robotic habits of AI-generated text, much like a seasoned editor who knows exactly what makes writing feel stiff and predictable.

A lot of creators use AI for content creation to get a first draft on the page. It's fast. But the raw output often lacks the natural rhythm and flow that actually connects with a reader. This is where a humanizer, like Natural Write, steps in to close that gap and make the final piece feel polished and genuine.

The whole process boils down to a few key techniques that work together to transform the text.

Restructuring for a Natural Flow

The first thing a humanizer tackles is sentence structure. AI models tend to fall into a rut, churning out sentences with similar lengths and patterns. It creates a monotonous, singsong rhythm that AI detectors can spot from a mile away.

A good humanizer acts like a composer, varying the cadence of your writing. It’ll shorten some sentences to make a point and lengthen others to add detail, creating a much more dynamic and interesting read. That intentional variation is a dead giveaway of human writing.

Elevating Word Choice

AI writers also lean on a very predictable vocabulary. They’re programmed to pick the most statistically likely word, which often makes the writing feel generic and totally devoid of personality. A humanizer gets in there and performs intelligent synonym swaps.

But this is way more than a simple thesaurus. It considers context and nuance to replace common AI words with more specific, evocative alternatives. This little trick alone enriches the vocabulary and helps the text develop a more distinct voice.

Key Takeaway: The best humanizers don't just rephrase text; they re-engineer it at a structural and lexical level to introduce the subtle imperfections and variations that characterize human expression.

Mastering Tonal Adjustments

Finally, a humanizer refines the tone. Raw AI content can sound way too formal or sterile, lacking the warmth needed to connect with an audience. By tweaking phrasing and word choice, these tools can shift the text to be more conversational, professional, or persuasive—whatever you need.

This three-pronged approach is what makes the technology work.

- Sentence Variation: It breaks up predictable AI patterns by mixing short, direct sentences with longer, more descriptive ones.

- Lexical Diversity: It introduces a wider range of words to sidestep the repetitive choices common in AI text.

- Rhythmic Pacing: It adjusts the flow of paragraphs to create a reading experience that feels less robotic and more natural.

By making these changes, an AI text humanizer can seriously improve the quality of machine-generated content. The goal isn't just to bypass detectors, but to make the writing genuinely resonate with readers. It’s less about hiding the AI's involvement and more about elevating its output to meet human standards of quality and authenticity.

Putting AI Content Detectors to the Test

So, does undetectable AI actually work? To answer that, you have to look at the other side of the coin: the AI detectors themselves.

The truth is, they're all over the map. Some are incredibly easy to fool, while others are surprisingly good at sniffing out machine-generated text. It’s a confusing and inconsistent space for anyone trying to create content.

Why Detectors Are So Inconsistent

Independent studies have shown, time and again, that a detector’s underlying tech is what makes or breaks it. The tools that rely on a single, isolated algorithm are usually the least reliable. They’re trained on a narrow definition of what "AI writing" looks like, which causes two huge problems. They often miss AI-generated content entirely, and even more frustratingly, they flag human writing as AI. We’ve all seen those dreaded "false positives."

This inconsistency is a real headache. You can run the exact same article through three different checkers and get three wildly different scores. It leaves you wondering where you actually stand. These tools struggle the most with content that's been heavily edited—where a human has polished up an AI-generated first draft.

The Secret to Better Detection

The most effective detectors don't put all their eggs in one basket. Instead of relying on a single method, they use multiple algorithms that cross-reference each other to come to a more balanced conclusion. Think of it as getting a second, third, and fourth opinion all at once. This multi-layered approach cuts down on errors and gives you a much more reliable verdict on where the text came from.

For example, some of the best systems use a federated or consensus-based model, which pulls in results from several different detection engines. This approach has been put through its paces in a ton of independent studies. Research from big names like ZDNet and PubMed Central found that one of the top tools, Undetectable AI, consistently hits a cumulative accuracy score between 85% and 90%. Why? Because it synthesizes results instead of trusting just one.

The table below breaks down how different approaches stack up, based on the findings from these kinds of studies.

AI Detector Performance Comparison

| Detection Model Type | Typical Accuracy Range | Key Weakness |

|---|---|---|

| Single Algorithm Models | 50% - 70% | High rate of false positives; easily fooled by simple edits. |

| Multi-Algorithm Models | 75% - 85% | Better, but can still be inconsistent with heavily mixed content. |

| Consensus-Based Models | 85% - 95% | Far more reliable, but no system is 100% foolproof. |

As you can see, the more perspectives a detector has, the harder it is to trick.

The key takeaway is simple: a detector’s strength comes from its ability to analyze text from multiple angles at once. A single viewpoint is just too easy to bypass. A consensus-based model is a much tougher nut to crack.

This is why the question of do AI detectors work doesn't have a simple yes or no answer. Their effectiveness is all in their design.

Ultimately, no detector is perfect. But the ones using advanced, multi-algorithm systems give you a much more realistic assessment. They’re better equipped to handle the way modern content is actually made—often with a mix of human and machine collaboration. And that makes them a far more trustworthy benchmark for testing whether your own humanized content truly flies under the radar.

Why Human-Like Quality Is the Real Goal

The whole conversation around "does undetectable AI work" often gets stuck on a technical cat-and-mouse game. While bypassing a detector seems like the point, it misses something much bigger. The real goal isn't just to trick a machine; it's to create content that genuinely connects with a human being.

Think about it from Google's side for a second. Its entire Helpful Content System is built to reward stuff that gives people a satisfying experience. It's looking for content that feels authentic and insightful, not robotic or spammy.

That simple fact shifts the entire focus from just evading detection to a much more valuable goal: quality.

Aligning with People and Algorithms

The best brands and publishers already get this. They aren't losing sleep over whether a detector flags their content. What they really care about is whether their audience trusts it. If your content feels fake or machine-generated, you erode that trust—and that’s way more damaging than a bad AI detection score.

The real win isn't being "undetectable" by a machine but being indispensable to a human. When your content is valuable, trustworthy, and compelling, it works for both audiences and algorithms.

This is why human-like quality is the true north star. When you use a tool like Natural Write to polish AI-generated text, you’re not just covering the AI’s tracks. You’re elevating the content to meet a human standard of excellence by improving its clarity, readability, and natural flow.

This approach has become critical everywhere. AI's role in content now touches everything from marketing to academic integrity, making human-like output a global priority. While a university might use a detector to check for plagiarism, Google’s algorithm rewards content that reads naturally. This has created a huge need for tools that can help preserve search rankings by ensuring that quality.

The Strategic Edge of Humanization

When you focus on quality, you gain a clear strategic advantage. Instead of constantly worrying about the next detector update, you can put your energy into creating assets that build real authority and actually resonate with the people you’re trying to reach.

Here’s what that looks like in practice:

- Better Engagement: People spend more time with content that flows well and speaks to them directly.

- More Trust and Credibility: Writing that sounds authentic builds a much stronger connection with your audience.

- Stronger SEO Performance: When you align with Google's "helpful content" principles, you set yourself up for better long-term visibility.

To make content truly indistinguishable from human writing, it helps to understand the principles of effective AI search engine optimization. At the end of the day, the question shifts from "does undetectable AI work?" to "does my content provide real value?"

When you can confidently answer "yes" to that second question, the first one starts to feel a lot less important.

Got Questions About Undetectable AI?

Even with a solid grasp of how undetectable AI works, a few common questions always seem to pop up. Let's clear the air and tackle the practical and ethical side of using these powerful new tools.

Is It Actually Legal and Ethical to Use an AI Humanizer?

Using an AI humanizer is perfectly legal. Think of it as a super-advanced writing assistant, not much different from a grammar checker or a professional editor.

The ethics, though? That comes down to how you use it.

It’s one thing to use a humanizer to polish an AI-assisted blog post or sharpen up your marketing copy to make it more engaging. That’s widely considered ethical. But the line gets crossed when these tools are used for academic dishonesty—like passing off an AI-generated essay as your own in a class that forbids it. The tool itself isn't the problem; it's the intent behind its use.

Will Google Flag My Humanized AI Content?

Here's the thing about Google: it cares less about who (or what) wrote the content and more about whether it's actually helpful and reliable. Their whole system is built to penalize spammy, low-effort articles that don't answer a user's question.

If you use an AI humanizer to produce a well-written, accurate, and genuinely valuable article that people want to read, you're unlikely to get penalized. In fact, well-humanized text often aligns better with Google’s quality standards than the raw, robotic output from a language model.

At the end of the day, the golden rule of SEO hasn't changed: write for people, not for algorithms. If your humanized text does that well, Google is far more likely to reward it.

What’s Next for AI Content Detection?

The world of AI detection is definitely not standing still. We're starting to see more complex methods on the horizon, like AI watermarking, which embeds invisible signals into AI-generated text or images to make their origin easier to trace.

But as AI writers get better and better at mimicking human nuance, this cat-and-mouse game will just keep going. It's highly unlikely that any detection tool will ever hit 100% foolproof accuracy. This constant back-and-forth will probably shift the focus away from pure detection and more toward encouraging responsible AI use, with overall content quality becoming the only metric that truly matters.

Does an AI Humanizer Guarantee My Content Is Undetectable?

Nope. No single tool can promise a 100% guarantee that your content will be undetectable forever. While a top-tier humanizer like Natural Write gives you a massive advantage against today's best detectors, the technology on both sides is always evolving.

Content that flies under the radar today might get flagged by a more advanced tool a year from now. The smartest approach is a simple, three-step workflow:

- Generate your first draft with AI.

- Humanize it with a powerful tool to smooth out the robotic patterns.

- Review it yourself for a final polish, adding your own insights and checking the facts.

This blend of machine speed and human oversight is what creates the most authentic and reliable results.

Ready to turn your AI drafts into polished, natural-sounding content that’s ready for the world? Natural Write offers a free, one-click way to humanize your text so it sails past detectors while keeping your core message intact. Give it a try and feel the difference.