Does Turnitin Check for AI: does turnitin check for ai Explained

December 30, 2025

Yes, Turnitin absolutely checks for AI-generated text. But this isn't a simple add-on to its famous plagiarism checker. It’s a completely different beast, and its arrival has kicked up a lot of debate for both students and educators.

So, Does Turnitin Detect AI? Yes, But It’s Complicated.

Since early 2023, Turnitin has built a sophisticated AI writing detector right into its platform. This was a direct answer to the explosion of powerful tools like ChatGPT. But it's crucial to understand this new system isn't looking for copied-and-pasted text from a database of old papers or websites.

Instead, it acts more like a linguistic detective. It analyzes the style and structure of the writing itself, trained to spot the subtle, almost invisible fingerprints that AI models tend to leave behind.

Think of it this way: the classic plagiarism checker is looking for stolen goods. The new AI detector, on the other hand, is analyzing the handwriting to see if it looks a little too perfect, a little too robotic.

How Does This New Detection Work?

The AI detector is all about patterns. It zeroes in on specific linguistic traits that often differ between how a person writes and how a machine generates text.

It’s important to know that it doesn't "prove" misconduct on its own. What it does is give educators an indicator—a percentage score—suggesting how much of the submitted text was likely written by an AI. This shifts the focus from simple copy-paste plagiarism to the much murkier waters of authorship and originality.

The core function of the AI detector is to identify writing patterns, not to make a final judgment. It is designed as a tool to help educators start a conversation about academic integrity, not end one with an accusation.

Why Its Accuracy Is So Heavily Debated

While Turnitin claims its detector is highly accurate—boasting a 98% success rate on papers with significant AI content—the real-world performance is a subject of intense discussion. The tool’s effectiveness can swing wildly depending on a few key factors:

- Editing and Paraphrasing: Lightly edited AI text is much harder to spot than raw output from a chatbot.

- Hybrid Content: Papers that mix human writing with AI-generated sentences are notoriously tricky for any detector to analyze correctly.

- False Positives: There are very real, documented concerns about human-written text being incorrectly flagged as AI-generated.

Because things are so complex, just knowing that it checks for AI isn't enough. You need to understand how the detector works and what its results really mean.

To make things clearer, here’s a quick breakdown of what Turnitin's AI detection is all about.

Turnitin's AI Detection at a Glance

| Feature | Key Detail |

|---|---|

| Primary Function | Analyzes text for patterns characteristic of AI writing, not direct plagiarism. |

| Output | Provides a percentage score indicating the likelihood of AI generation. |

| Technology | Based on training with large language models (LLMs) to recognize stylistic "fingerprints." |

| Claimed Accuracy | Turnitin reports a 98% accuracy rate for papers with over 20% AI content. |

| Main Limitation | Prone to false positives and struggles with heavily edited or hybrid (human-AI) text. |

| Intended Use | A tool for educators to start a dialogue, not definitive proof of academic misconduct. |

Ultimately, this new technology has changed the game. Navigating it successfully means demystifying the tech, knowing its limits, and figuring out how to work in this new academic reality.

How Turnitin's AI Detector Actually Works

So, does Turnitin check for AI? The simple answer is yes, but how it does it is a whole different ballgame from its classic plagiarism checker. This isn’t some magic trick; it’s a sophisticated kind of pattern recognition that looks for clues hiding in plain sight.

Think of it like two different detectives on a case.

The first detective—the old-school plagiarism checker—heads to a massive warehouse filled with known stolen goods (i.e., a database of papers, articles, and websites). Its job is to find an exact match. It's looking for direct, verifiable copies.

The second detective—the new AI detector—doesn't even glance at the warehouse. Instead, it analyzes the suspect's handwriting, their tone of voice, and the weird little patterns in their speech. It’s looking for the subtle, almost invisible tells that suggest the words didn't come from a human. That's exactly how Turnitin's AI detector operates.

The Two Big Clues: Perplexity and Burstiness

The system is trained to spot the linguistic fingerprints that AI models tend to leave behind. Two of the most important concepts here are perplexity and burstiness. They might sound a bit academic, but the ideas are actually pretty straightforward.

Perplexity is just a fancy way of measuring how predictable text is. Human writing is often messy and full of surprises. We use weird word choices and creative phrasing. AI, on the other hand, is trained to pick the most statistically likely next word, which makes its writing incredibly smooth but also… predictable. A low perplexity score is a red flag that the text is too "perfect."

Burstiness is all about rhythm. Humans write in fits and starts. We’ll write a long, winding sentence and follow it up with a short, punchy one. This creates a natural, uneven flow. AI models often spit out sentences that are eerily similar in length and structure, creating a monotonous, flat rhythm that just doesn't feel human.

The AI detector scans a paper for these signals, sentence by sentence, building a profile of the writing style.

It’s kind of like the detector is constantly asking itself, "What are the odds a human would have written this exact sequence of words?" If the answer keeps coming back "not very likely," the AI score starts to creep up.

From Signals to a Score: What the Percentage Means

After its analysis, Turnitin gives you a percentage score. This number shows how much of the document the model is confident was written by AI. But here’s the crucial part: this score is an indicator, not a guilty verdict.

When a professor sees a high score, they're meant to use it as a conversation starter, not as the final word on academic misconduct. They’ll likely look for other signs, like a sudden shift in writing style from a student's past work or if the student can't explain their own paper's ideas.

When Turnitin first rolled out this feature in April 2023, it boasted a 98% accuracy rate for identifying AI-generated text, with a false positive rate under 1% on papers with over 20% AI writing. The scale of this is massive. By July 2023, it had already scanned 65 million papers and found that over 10% contained significant AI writing. You can dig into some of the early data on Turnitin's AI detection capabilities to see just how quickly this has changed the academic world.

This context is everything. The tool is there to provide data that supports an educator’s judgment, not replace it. It’s designed to flag statistical oddities in the writing, leaving the final call up to a human.

Accuracy Claims Versus Real-World Performance

Turnitin proudly advertises a 98% accuracy rate for its AI detector, a number that sounds almost bulletproof. But that figure comes from a clean, controlled lab setting. Out here in the real world—with messy drafts, creative edits, and students blending their own writing with AI suggestions—the story gets a lot more complicated.

The detector is at its best when it's analyzing raw, unedited AI output. If a student just copies a block of text straight from ChatGPT and drops it into their paper, Turnitin is pretty good at catching it. That kind of writing has all the classic AI tells: predictable word choices, a monotonous rhythm, and an almost unnatural consistency.

But that’s not how most people actually use AI.

The Achilles' Heel: Edited and Hybrid Content

The real test comes when AI-generated text gets edited, paraphrased, or woven into a student's own writing. This is the detector's Achilles' heel.

Even small tweaks, like swapping a few words or rephrasing a couple of sentences, can start to confuse the algorithm. The more a piece is revised and refined by a human, the less it looks like a machine's work.

This is where that impressive 98% claim starts to fall apart. Independent studies have found that Turnitin’s real-world performance often doesn’t live up to the hype. For example, one analysis found its detection rate for pure AI-generated text was just 77%. For "disguised" AI—like ChatGPT text run through a basic paraphraser—it dropped to 63%. And for papers mixing human and AI writing, the success rate plummeted to between 23% and 57%. To see the full breakdown, check out the detailed AI detector accuracy test from BestColleges.

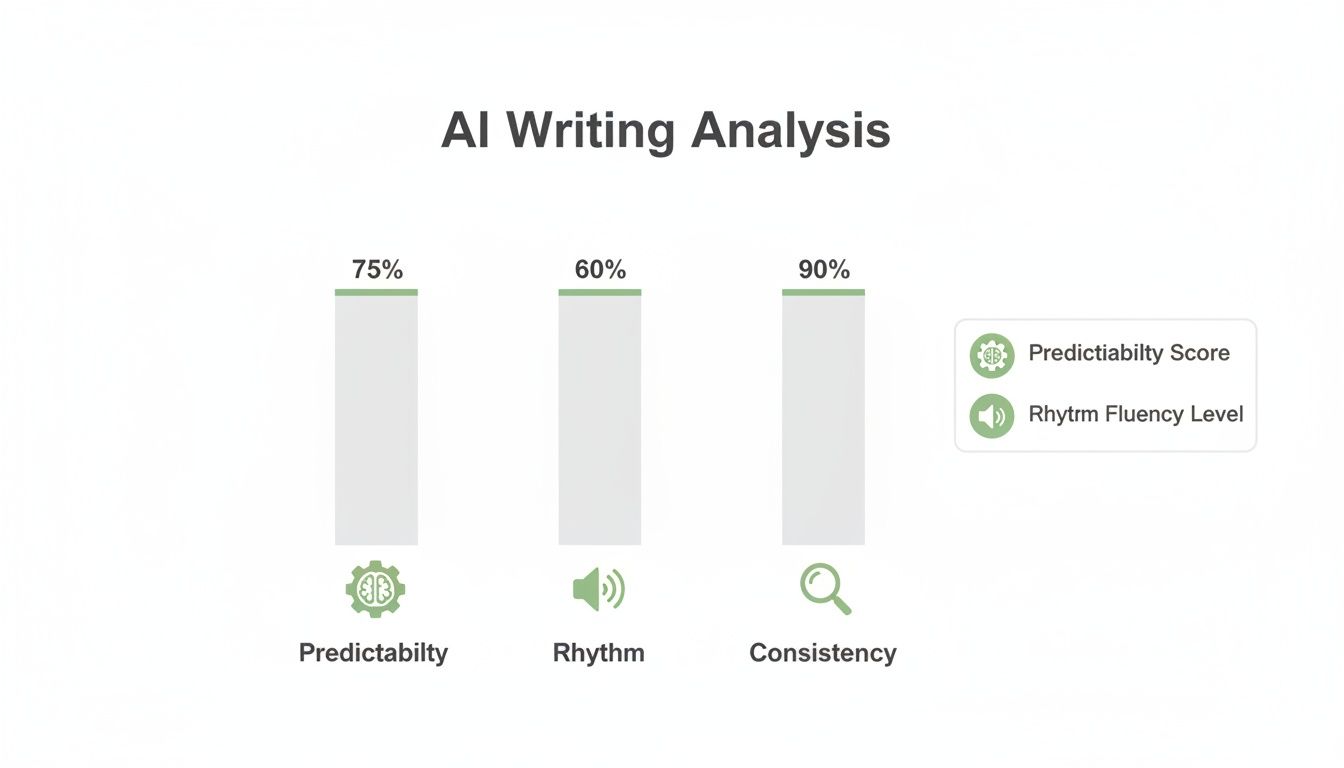

The AI writing analysis below shows some of the linguistic signals that these detectors look for to spot robotic patterns.

As you can see, the overly consistent rhythm and predictable sentence structures are dead giveaways—and they’re also the first things a good human editor would fix.

Turnitin's detection accuracy can vary quite a bit depending on what kind of content it's scanning. Here’s a quick look at how it fares with different types of writing.

Turnitin AI Detection Accuracy by Content Type

| Content Type | Reported Detection Accuracy | Why It's Harder/Easier to Detect |

|---|---|---|

| Raw AI-Generated Text | High (often 70-85%) | Easiest to detect. Unedited output has a distinct, predictable statistical pattern that stands out clearly to the algorithm. |

| Paraphrased AI Text | Moderate (often 40-65%) | Harder. Simple paraphrasing tools can alter sentence structure and vocabulary just enough to confuse the detector, but often leave some AI fingerprints behind. |

| Mixed Human-AI Text | Low (often 20-55%) | Very hard to detect. When human writing is blended with AI suggestions, the overall text loses its robotic consistency, making it difficult for the algorithm to separate the two. |

| Technical/Formal Writing | Variable (Higher false positive risk) | Can be tricky. This style often uses straightforward language and structured formats, which can accidentally mimic the low "perplexity" of AI writing, even if 100% human-written. |

This shows us that as soon as a human hand gets involved in editing, the AI's signature begins to fade, making reliable detection much tougher.

The Serious Risk of False Positives

Maybe even more worrying than missing AI-written text is the risk of false positives. This is when a student's completely original, human-written work gets incorrectly flagged as AI-generated. It’s not just a technical glitch; it's a real problem that causes incredible stress for students who are wrongly accused of cheating.

A few things can trigger a false positive:

- Formulaic Writing: Students who stick too rigidly to a specific essay structure (like the classic five-paragraph format) can produce text that feels predictable enough to fool the detector.

- Technical or Formal Language: Straightforward, unembellished writing, which is common in science and technical fields, can sometimes look like the low-complexity text an AI would generate.

- Non-Native English Speakers: Writers who learned English as a second language sometimes use simpler sentence structures or more common words, which an algorithm can misinterpret as AI writing.

A high AI score isn't a guilty verdict. It's just a statistical assessment from an algorithm, and algorithms make mistakes. It should be the start of a conversation, not the final word.

This gray area gets to the heart of the problem. As AI gets better and writers learn to use it as a smart assistant, the line between human and machine writing is only going to get blurrier. To understand this challenge better, you might want to read our guide on how accurate AI detectors truly are. Relying on a percentage score alone is a risky game, and it makes human judgment more critical than ever.

The Evolving Arms Race of AI Bypassing Tools

The moment AI detectors hit the scene, a whole new category of software popped up right alongside them: AI bypassing tools. This kicked off a constant, back-and-forth technological cat-and-mouse game. On one side, you have Turnitin, constantly tweaking its algorithms to spot the signature of robotic writing. On the other, you have tools designed specifically to make AI text sound human enough to fly under the radar.

And we're not just talking about simple paraphrasers that swap out a few words. While those might get you past a basic plagiarism check, they usually leave the core AI sentence structure intact—a dead giveaway for a sophisticated detector like Turnitin. The real players in this game are the more advanced "AI humanizers."

From Simple Rewrites to Sophisticated Humanization

Think of a basic paraphrasing tool as a digital thesaurus on autopilot. It might change "The experiment was conducted successfully" to "The test was carried out effectively," but the underlying rhythm and predictability don't really change. Turnitin's system is built to look right past those surface-level edits and analyze the deeper linguistic patterns.

Advanced humanizers, though, are playing a totally different sport. They don't just swap words; they fundamentally restructure the text to mimic the natural, slightly chaotic flow of human writing. This often involves:

- Varying Sentence Length: Deliberately mixing long, complex sentences with short, punchy ones to break up the monotonous flow that AI is known for.

- Improving Word Choice: Replacing common, high-probability words with more nuanced or creative alternatives—the kinds of words a human writer would choose.

- Injecting "Burstiness": Recreating the uneven, stop-and-start rhythm of human thought, which helps the text feel more natural to an analytical tool.

These tools are essentially reverse-engineering what AI detectors look for and then systematically scrubbing those tells from the text. As detectors get smarter, so does the technology designed to refine AI-generated drafts, like specialized AI Content Humanizer tools.

The Role of Ethical AI Editors

This is where platforms like Natural Write come into the picture. They aren't positioned as tools for academic cheating, but rather as advanced editors for an AI-assisted workflow. The idea isn't to help a student pass off a 100% AI-generated paper as their own. It’s to take a first draft—where a user has already provided the core ideas and critical thinking—and polish it to a higher standard of quality and authenticity.

An ethical AI humanizer acts as a final editing layer. It helps ensure that a writer’s unique ideas are communicated with the clarity, style, and natural flow of a professional human writer, removing any lingering robotic artifacts from the text.

This ongoing arms race is pushing technology forward at an incredible pace. By 2025, Turnitin's battle against AI bypassers is expected to mark a major shift in academic tech. Forecasts suggest future updates will specifically target tools that mimic human writing styles, yet false positives are still predicted to linger at around 1-2%. This reality has sped up the development of platforms like Natural Write, which helps users ethically refine AI drafts for all sorts of needs. You can get a much deeper look into this process in our article on bypassing AI detection.

By focusing on enhancing a user's original work, these tools aim for a responsible middle ground. They help writers use AI for efficiency while making sure the final product reflects genuine human insight. It makes the question of "does Turnitin check for AI" part of a much bigger conversation about the future of writing itself.

What to Do If Your Work Is Flagged for AI

Seeing a high AI score next to your name can send a jolt of panic through any student. But before you spiral into a worst-case scenario, take a breath. An AI flag isn’t a guilty verdict; it's the start of a conversation, and you can absolutely prepare for it.

Remember, the score is just an indicator. As we’ve covered, these detectors are imperfect tools prone to false positives, especially with technical writing or text from non-native English speakers. Your first move is to stay calm and approach the situation methodically, not defensively.

Think of it as a chance to prove your academic integrity and walk someone through the real work you put into your assignment.

Step 1: Understand Your Institution's Rules

Before you do anything else, you need to know the rules of the game. Every school has its own academic integrity policy, and most are scrambling to update them with guidelines on artificial intelligence.

Find your university's or even your instructor's specific policy on AI use. Does it permit AI for brainstorming? For fixing grammar? Or is there a zero-tolerance policy? Knowing the official stance will frame your entire conversation and help you understand what, if any, line was crossed.

Step 2: Gather Evidence of Your Writing Process

This is where you build your case. The best defense against an AI flag is a well-documented paper trail that proves you are the author. Your goal is to pull together concrete evidence showing how your ideas grew from a spark to a finished paper.

Your evidence file should include things like:

- Outlines and Brainstorming Notes: Any rough sketches, mind maps, or jotted-down notes show the real, messy start of your ideas.

- Rough Drafts: Having multiple versions of your paper is powerful. It demonstrates a clear progression of work over time, not a single, perfect output.

- Research Materials: Keep a log of the articles, books, and websites you consulted, along with any notes you took. Your browser history can even act as a timeline of your research efforts.

- Version History: This is your silver bullet. If you use Google Docs or Microsoft Word online, the version history provides a time-stamped, uneditable log of your writing process, showing every single change you made.

Think of your version history as a digital alibi. It provides an undeniable record of your work, showing the text taking shape through your own effort, word by word, over hours or days—a pattern that is impossible for an AI to fake.

Step 3: Prepare for a Constructive Conversation

With your evidence in hand, you're ready to talk to your professor. Schedule a meeting and go into it with the mindset of having a discussion, not a confrontation. You’re there to transparently show your work and articulate your understanding of the material.

During the meeting, walk your instructor through your process. Explain your thesis, how you developed your arguments, and why you structured the paper the way you did. If you can confidently discuss the nuances of your own work, it becomes much harder for anyone to believe it was written by a machine.

By staying calm, gathering your proof, and preparing for a mature conversation, you can turn a stressful AI flag into a moment that actually reinforces your integrity as a student.

Using AI Ethically to Future-Proof Your Writing

Instead of getting bogged down in how to avoid detection, the smarter move is to completely reframe your relationship with AI. It’s not a cheating device. Think of it as a powerful creative partner that can make you a more efficient and thoughtful writer.

The conversation is finally shifting from "does Turnitin check for AI?" to "how can I use AI responsibly?"

AI tools are fantastic for smashing through common writing barriers. They can be a tireless assistant for brainstorming, structuring a tough argument, or just getting past that intimidating blank page. The key is to treat them as a launchpad, not a crutch.

This kind of ethical workflow lets you stay in full control of your work while tapping into AI’s speed and power.

A Responsible AI Writing Workflow

A responsible approach puts you firmly in the driver’s seat. The process should always start and end with your own brain and your own voice.

Brainstorm and Outline with AI: Use a model to spitball ideas, explore potential arguments, or map out different ways to structure your paper. It’s a great way to see angles you might have missed.

Generate a Rough First Draft: Once you have your outline and core ideas, ask the AI to produce a basic draft. Treat this output as raw material—a block of clay waiting to be shaped, not a finished sculpture.

Inject Your Voice and Analysis: This is the most important step. Take over completely. Rewrite the draft from your perspective, infusing it with your personal analysis, unique insights, and distinct writing style. This is where the real academic work happens and what makes the paper yours.

By taking an AI-generated draft and fundamentally reshaping it with your own intellect, you are not committing academic dishonesty. You are using a tool to assist your process while upholding the core principles of originality and intellectual ownership.

Polishing Your Work Ethically

After you’ve woven in your own analysis and voice, a final, ethical polishing step can make all the difference. This is where an advanced editing tool comes in handy. Think of it not as a way to hide anything, but as a sophisticated proofreader designed to sharpen clarity, improve flow, and ensure your final paper is impeccably written.

This step guarantees your AI-assisted work is not just original in thought but also meets the highest standards of quality.

By developing strong AI literacy, you can use these powerful tools to augment your own skills. This approach doesn't just help you produce better work now—it also future-proofs your abilities for a world where AI collaboration is becoming the norm. For a deeper dive, check out our guide on what is academic integrity in the age of AI.

Turnitin and AI: Your Questions Answered

When you're trying to figure out exactly how Turnitin handles AI-written text, a lot of questions pop up. Let's walk through some of the most common ones to clear the air and give you some straight answers.

Can Turnitin Detect ChatGPT-4o and Other New Models?

Yep. Turnitin is constantly playing catch-up, updating its system to spot the tell-tale signs of the latest AI models, including GPT-4o and its peers. The core of its detection method hasn't really changed—it's still looking for that unnatural, statistically predictable flow that even the most advanced models produce.

But here's the thing: the fundamental weaknesses of AI detection are still there. Even though new models can generate incredibly sophisticated text, they're built to do one thing: predict the next most likely word. That process, by its very nature, leaves behind subtle machine fingerprints.

So, whether the text came from an older model or the latest and greatest, your best defense is still the same: significant human editing. The real challenge for Turnitin isn't how new the AI is, but how much you have reshaped the content with your own thinking.

Is It Possible to Get a 0 Percent AI Score?

A 0% AI score is totally achievable—and frankly, it's what you should expect for anything you've written entirely yourself. If you're using AI as a starting point, getting that score down to zero takes real work. It's much more than just swapping out a few words.

To hit that zero, you need to tear the text apart and rebuild it with your own voice, your own perspective, and your own analysis. Break up the monotonous rhythm of AI-generated sentences. Ditch the predictable, safe vocabulary and bring in language that’s specific to your topic and style. For a deeper dive into how this process works, this guide to AI-powered content creation offers some great insights.

Getting to a 0% score means transforming the text so completely that it reflects your thought process, not the AI's. The goal is to make the final work an authentic extension of your own ideas and writing style.

Does Turnitin Work for Other Languages?

Turnitin's AI detector was built and trained primarily on English. While the company says it works for other languages, its accuracy is definitely highest on English-language papers.

Reports from users and independent tests show that its performance can get a bit shaky with languages like Spanish, French, or German. This can lead to more false positives (flagging human work) and false negatives (missing AI work). So, if you're submitting a paper that isn't in English, be extra cautious. The linguistic patterns it's designed to hunt for just don't always translate.

Is Using an AI Humanizer Academic Dishonesty?

This is the big one, and the answer isn't a simple yes or no. It all comes down to your school's specific academic integrity policy and, more importantly, how you're using the tool.

It is absolutely academic dishonesty if you:

- Have an AI generate an entire essay for you.

- Run it through a humanizer just to hide the AI's tracks.

- Pass that work off as your own original thought.

However, it can be a perfectly ethical part of your writing process if you:

- Use AI as a brainstorming partner or to knock out a rough first draft.

- Personally rewrite the text, adding your own critical analysis and ideas.

- Use a humanizer as a final editing step to polish the flow and clarity.

The line is drawn at intent and ownership. If the core ideas, research, and critical thinking are yours, using a tool to refine the language is just an advanced form of editing. But always, always check your school's guidelines first.

When used ethically, a tool like Natural Write can be an invaluable partner in your writing process. It helps refine your AI-assisted drafts to ensure they are clear, natural, and reflect your unique voice, all while bypassing AI detectors. Transform your writing today at https://naturalwrite.com.