Check Essay for AI: Easy Ways to Ensure Academic Integrity

August 11, 2025

When you need to check an essay for AI, a two-pronged attack works best. First, run it through a good AI detector like Natural Write to get a baseline score. Then, do your own manual review, looking for the classic giveaways—things like stiff, overly formal language, perfect grammar that lacks any real personality, and sentences that all sound the same. Relying on just one of these methods is a gamble; using both gives you a much clearer picture.

The New Academic Reality of AI Writing

The rise of powerful tools like ChatGPT Plus has completely changed the game for academic writing. Trying to ban these tools outright isn't working. Instead, educators and students are having to figure out a new normal where the line between original work and automated text is getting harder and harder to see.

This has created a brand new challenge: how do you tell the difference between authentic student writing, a paper that got a little help from AI, and one that was cranked out entirely by a machine? Getting good at spotting the unique fingerprints of AI text is the first step toward keeping things fair and honest.

Understanding the Scale of AI Use

Just how common is this? Turnitin recently looked at over 200 million student papers and the findings were pretty revealing. They discovered that about 11% of assignments had at least 20% AI-generated text. A smaller group, around 3%, submitted work that was 80% or more AI-written.

What this tells us is that while wholesale cheating is a problem, the more common scenario is students using AI as an assistant. This requires a more thoughtful approach to detection, one that goes beyond a simple "yes" or "no" verdict.

Key Insight: The real challenge isn't just about catching essays written entirely by AI. It's about figuring out where human effort stops and AI assistance starts, a task that demands both smart technology and sharp human judgment.

This guide will walk you through the practical skills you need to check an essay for AI with confidence. Before we dive into the specific steps, here's a quick look at the methods we'll be covering.

Quick Guide to AI Essay Checking Methods

To get started, it helps to understand the main tools and techniques at your disposal. This table breaks down the primary methods for checking an essay, what they're best for, and why they're useful.

| Method | Primary Use | Key Benefit |

|---|---|---|

| AI Detection Tools | Getting a quick, data-driven probability score of AI use. | Fast, objective, and great for initial screening of many documents. |

| Manual Review | Identifying the subtle, stylistic signs of AI that tools might miss. | Catches nuances in tone, voice, and reasoning that software can't. |

| Source & Fact-Checking | Verifying claims, citations, and data points within the essay. | Exposes "hallucinations" or fabricated information common in AI output. |

| Plagiarism Checkers | Comparing the text against a vast database of existing content. | Identifies copied-and-pasted content, a common low-effort tactic. |

Think of these as layers of investigation. Using them together provides a much more reliable and fair assessment than relying on any single one alone. Now, let's get into the step-by-step process.

Getting the Most Out of an AI Detection Tool

Using a tool to check an essay for AI isn't just about copying and pasting text. The real art is in knowing how to read the results with a critical eye. When you get a report back, it's rarely a clean "yes" or "no" answer. What you’ll probably see is an overall probability score, along with some color-coded highlighting that points you to the iffy spots.

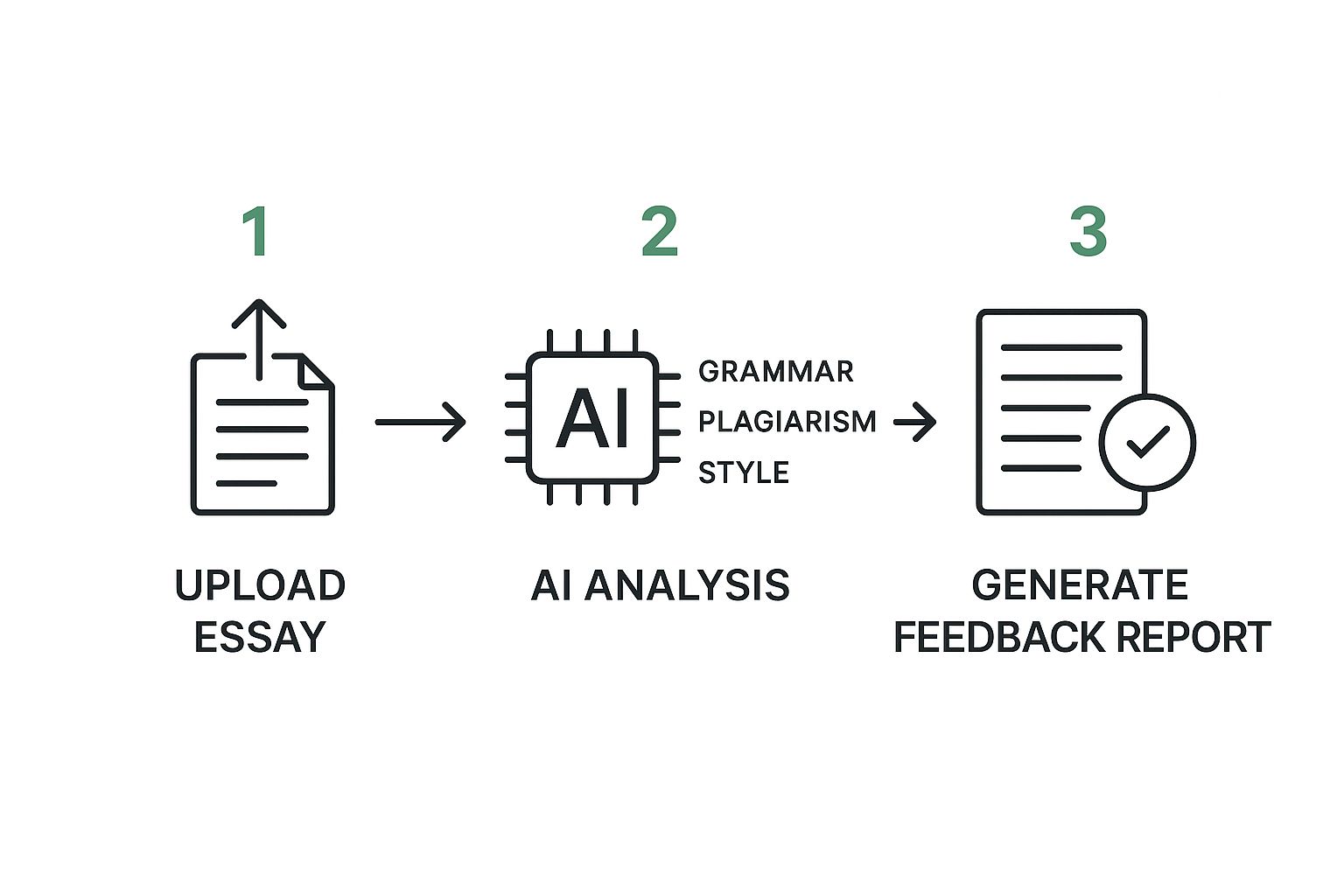

The process itself is usually simple: upload the text, let the tool analyze it, and then review the feedback.

This visual gives you a peek behind the curtain. The tool isn't just looking for one red flag; it's weighing multiple characteristics of the writing before it gives you a final score.

Understanding the Results

Your first move should be to look beyond that big percentage number. A score of 75% AI-generated doesn't automatically mean three-quarters of the essay was spat out by a machine. Instead, it reflects the tool's confidence level based on the patterns it's been trained to spot.

Here’s what I focus on when I get a report back:

- Highlighted Sentences: Pay extra attention to these. Any section flagged as "likely AI" usually has tell-tale signs, like predictable phrasing (low perplexity) or an unnatural, inconsistent rhythm (low burstiness).

- The Confidence Score: Think of this score as a probability, not a final verdict. A high score is your cue to dig deeper and inspect the text manually. A low score might just mean the writing is a bit formulaic, which is common in academic work.

The goal isn't to treat the AI score as the absolute truth. It's a starting point—a data point that tells you where to focus your own human judgment.

Putting It All Together

At the end of the day, these tools are powerful allies in maintaining fairness. If you’re interested in the bigger picture, it’s also smart to understand how institutions are adapting. You can get a sense of this by exploring some common academic integrity guidelines that address these new technologies.

This kind of automated analysis isn’t just for the classroom, either. Professionals are using AI-driven tools for legal document review, showing how text analysis can boost accuracy in other fields, too. By blending a tool’s output with your own expertise, you create a review process that's both balanced and fair.

Be Skeptical of AI Detector Scores

While it's a good idea to check an essay for AI with a detection tool, it’s critical to remember these tools aren't foolproof. They give you a probability score, not a definitive verdict. Treating a high AI score as concrete proof can easily lead to false accusations against students who did the work themselves.

The biggest trap is the 'false positive'—when a tool flags human writing as AI-generated. And it happens a lot more than you'd think. One recent analysis found that popular AI detectors are only accurate about 63% of the time. Even more concerning was the false positive rate, which hovered around 25%.

That means one in every four completely original essays could be incorrectly flagged.

The Never-Ending Game of Cat and Mouse

AI writing models are getting smarter every day, which makes spotting their output a constant challenge. On top of that, users are getting more sophisticated at blurring the lines between human and machine-generated text.

Here are a few common ways this plays out:

- Advanced Paraphrasing: People use "humanizer" tools to rephrase AI text, specifically to trick detectors. Running a piece of text through a rewriter multiple times—a technique called recursive paraphrasing—can drop detection accuracy by as much as 55%.

- Mixed Authorship: A student might use an AI for brainstorming ideas or structuring an outline but then write the entire essay in their own words. The final piece ends up with a blend of human and AI fingerprints, which can easily confuse detection software.

- Simple Writing Styles: Ironically, human writing that is clear, concise, and follows a standard formula—like many academic essays are taught—can sometimes be mistaken for AI.

Key Takeaway: Think of an AI detection score as a starting point for your investigation, not the final word. It’s a signal to dig deeper, not a guilty verdict.

At the end of the day, fair assessment is what matters. Understanding how some users try to fool these systems can actually help you become a better judge of authenticity. Knowing the common tricks, like those detailed in guides on how to bypass AI detection, gives you the context to spot signs of manipulation and rely more on your own judgment.

Looking Beyond the Score: How to Spot AI Writing in an Essay

When you check an essay for AI, the score you get from a tool is a great starting point, but it's not the final word. Your own judgment is still the most powerful tool in your arsenal. Learning to spot the tell-tale signs of automated writing is a skill that complements any software, giving you a much clearer picture of what you're looking at.

So, what should you keep an eye out for? AI-generated text has a few quirks that tend to jump out once you know what to look for.

- Overly Formal Language: The tone often feels a bit...off. AI loves using complex words and rigid sentence structures that just don't match a student's typical voice.

- Repetitive Sentence Structures: You might start to notice a pattern. Many sentences might follow the exact same subject-verb-object format, creating a monotonous, robotic rhythm.

- Perfect Yet Lifeless Prose: The grammar and spelling will likely be flawless. But the writing often lacks personality, unique phrasing, or the small imperfections that make a human voice feel authentic. It's technically correct but has no soul.

Watch Out for Factual Glitches

One of the biggest giveaways is the presence of subtle factual errors, what some people call "hallucinations." An AI can confidently state incorrect dates, misattribute quotes, or even invent sources that look totally legitimate but don't actually exist.

For instance, an essay might cite a groundbreaking study from "The Journal of Applied Psychology, 2023," but a quick Google search shows no such article was ever published.

This is where your gut instinct comes into play. If a claim or a source feels just a little too convenient or slightly off, it’s worth a quick fact-check. This kind of manual review is more important than ever. Recent surveys show that 68% of educators are now using AI detection software. This has led to a sharp increase in disciplinary actions for AI misuse, with rates jumping from 48% to 64% in just two years. You can dig deeper into these trends in AI plagiarism statistics to see the bigger picture.

Key Takeaway: The most reliable way to assess an essay is to combine a technology check with your own expert review. Be on the lookout for perfect grammar that feels flat, repetitive sentence patterns, and facts that seem just a little too good to be true. These human insights are often more telling than any detection score.

Your Final Verdict Checklist for AI Detection

Alright, you’ve run the essay through an AI detector. Now what? You're probably looking at a score, maybe some highlighted sentences, and a whole lot of questions. A high AI detection score is definitely a major red flag, but it’s rarely the whole story.

Before you jump to any conclusions, you need to pull all the evidence together. This isn't about one number; it's about building a case by combining the tool's output with your own expert judgment and the context you have on the student. Rushing this part can lead to unfair or incorrect assessments.

The Holistic Review

A fair verdict comes from looking at the big picture. Think of this as your final gut check—a way to make sure your decision is balanced, defensible, and considers all the moving parts. This helps you avoid putting too much weight on any single clue.

Run through these questions:

- Is the AI score genuinely high? A consistent score over 70% is where I start paying very close attention. But even then, it needs backup evidence.

- Do you see the classic AI fingerprints? Look for that tell-tale robotic tone, strangely repetitive sentence patterns, or prose that’s grammatically perfect but completely devoid of life.

- Does the writing style feel right? Compare the essay's voice and complexity with the student's past work. A sudden, dramatic shift is one of the most compelling signs.

- Are there any factual errors or "hallucinations"? This is a big one. AI is notorious for inventing sources, getting dates wrong, or making confident claims that just fall apart under a quick fact-check.

Final Takeaway: The strongest case for AI use is a combination of a high detection score, multiple stylistic red flags you've spotted yourself, and a clear mismatch with the student's known writing style.

To help structure your thoughts, use this simple checklist. It forces you to document each piece of evidence, which is crucial for making a fair and well-supported decision.

Final Assessment Checklist

| Checklist Item | Yes/No | Notes/Observations |

|---|---|---|

| Is the overall AI score over 70%? | ||

| Are specific passages flagged as 100% AI? | ||

| Does the tone feel robotic or generic? | ||

| Is the sentence structure repetitive? | ||

| Is the writing style consistent with past work? | ||

| Are there factual errors or fake sources? | ||

| Does the essay lack personal insight or opinion? |

By the time you fill this out, your final verdict should feel less like a guess and more like a logical conclusion based on solid evidence.

This methodical approach ensures you're being fair. And for those interested in the other side of the coin, understanding how to ethically humanize AI text can offer powerful insights into the editing patterns that distinguish human writing from machine output.

Common Questions About Checking Essays for AI

Let's be honest: navigating the gray areas of AI detection can feel like walking a tightrope. The results from an AI checker aren't always a simple "yes" or "no." So, let's unpack some of the most common and tricky questions that pop up when you're reviewing a student's essay.

What Should I Do with 'Mixed' Results?

A "mixed" result is probably one of the most frequent outcomes you'll see. This is where a tool flags some parts of an essay as AI-generated and others as human-written. It often means a student used AI to brainstorm or build an outline but then wrote the main body of the work themselves.

Instead of jumping to conclusions, this is your cue to start a conversation. A mixed result isn't a red card; it's more like a yellow one. It signals that you need to dig a little deeper, not pass immediate judgment.

Approach the student and ask them about their process for the sections that were flagged. This turns a potentially disciplinary moment into a teaching opportunity about proper citation, academic integrity, and the ethical lines of using AI for schoolwork.

A Quick Tip: When a paper shows mixed authorship, it's the perfect chance to clarify your school's specific policies. Make sure students know exactly what counts as acceptable AI assistance versus what crosses into academic dishonesty. Clarity here is everything.

Where Is the Ethical Line for Using AI?

This is the big one, and the answer almost always comes down to your institution's official policy. As a general rule, most educators agree that using AI for brainstorming, checking grammar, or structuring an outline is fine. Think of it like a modern thesaurus or a spell-checker.

The line gets crossed when the AI is doing the heavy lifting—writing entire paragraphs or the bulk of the essay. The student's own critical thought, analysis, and voice should be the engine driving the work. When AI starts replacing that core effort, that's typically when it veers into a breach of academic integrity.

Do AI Detectors Violate Student Privacy?

That's a completely valid concern. Reputable AI detection tools are built with privacy as a top priority. A quality checker, for instance, will analyze the text's patterns and structure in real-time without ever storing the actual content of the essay on its servers.

Before you commit to a tool, always take a look at its privacy policy. An ethical service will be transparent about how it handles data, giving you peace of mind that student work stays confidential and isn't being used for anything else.

Ready to check essays with confidence and clarity? Natural Write provides an integrated AI checker that flags robotic text, helping you review and revise with precision. Try it for free and ensure your assessments are fair and informed.