Can Turnitin Detect ChatGPT? Find Out the Truth Today

September 8, 2025

So, can Turnitin actually detect ChatGPT?

The short answer is yes, but it’s not that simple. Think of Turnitin's AI detection as a powerful new feature, not some foolproof lie detector. This is the start of a complicated cat-and-mouse game between AI writing tools and the software trying to spot them.

The Reality of AI Detection Technology

The real question isn't just a simple yes or no. It's about understanding the constant back-and-forth between AI's explosive growth and the detection tools scrambling to keep up.

This creates a real tension for students and educators. How do you responsibly use AI to be more efficient without crossing the line into academic dishonesty? It’s a gray area where technology, ethics, and school policies all collide. The core challenge is telling the difference between AI used as a helpful sidekick—like a grammar checker on steroids—and AI used to ghostwrite an entire assignment.

And that's where things get tricky.

A High-Stakes Balancing Act

Turnitin's approach is to provide data, not a final verdict. The platform gives instructors an AI score, but it’s meant to be a conversation starter, not an automatic accusation.

This score is based on analyzing tiny linguistic patterns that are often different in human versus machine-generated text. Our guide on how AI detectors work dives deeper into the mechanics behind this process.

The screenshot below shows you the main dashboard educators see. It's where they manage submissions and check originality reports.

This is basically the command center where instructors can view the AI detection report right alongside the classic plagiarism check, showing how integrated these tools have become.

"AI detection, like Turnitin’s AI writing detection feature, are resources, not deciders. Educators should always make final determinations based on all of the information available to them."

Accuracy Claims and Real-World Performance

So, how accurate is it? In 2025, Turnitin claims its AI detection system is about 98% accurate when it flags a text as AI-written. They say it has a false positive rate of less than 1% for documents with over 20% AI writing.

This high accuracy comes from their own algorithms, which analyze writing patterns and compare them against massive databases of both human and AI-authored content. You can find more insights on this at detecting-ai.com.

But remember, no system is perfect. Things like heavy editing, paraphrasing, or mixing different tools can definitely muddy the waters and affect the results.

To give you a clearer picture, here’s a quick rundown of how Turnitin handles different types of AI-assisted content.

Turnitin's ChatGPT Detection At a Glance

This table summarizes how likely Turnitin is to flag content depending on how AI was used to create it.

| Content Type | Detection Likelihood | Key Factors |

|---|---|---|

| Raw AI Output | Very High | Unedited text copied directly from models like ChatGPT. It has clear AI linguistic markers. |

| Lightly Edited AI | High | Minor changes to wording or grammar. The core structure and sentence patterns remain AI-like. |

| Heavily Edited AI | Moderate | Significant rewrites, added personal insights, and structural changes. Blends human and AI patterns. |

| Paraphrased AI | Low to Moderate | Content rewritten by a paraphrasing tool. Success depends on the tool's sophistication. |

| AI as an Idea Generator | Very Low | AI was used for brainstorming or outlines, but the final text is written by a human. |

| Humanized AI | Very Low | Text processed by a humanization tool to mimic human writing styles and remove AI giveaways. |

As you can see, detection isn't a simple on-or-off switch. The more a student edits, rewrites, and infuses their own voice into an AI-generated draft, the less likely it is to trigger a high AI score.

How Turnitin's AI Detector Works

So, how can Turnitin tell if ChatGPT had a hand in your paper? It’s important to understand that its AI detector isn't looking for plagiarism in the traditional sense. It's not scanning the web for copied-and-pasted text.

Instead, think of it as a linguistic detective. It’s trained to spot the subtle, almost invisible fingerprints that AI writing leaves behind. It analyzes a document piece by piece, hunting for statistical patterns that separate human writing from machine-generated text.

The system was built by feeding it a massive library of both human and AI-written examples, allowing it to learn the signature style of each. When a paper is submitted, the detector breaks it down and compares the writing characteristics of each section against what it’s learned. It’s less about what you said and more about how you said it.

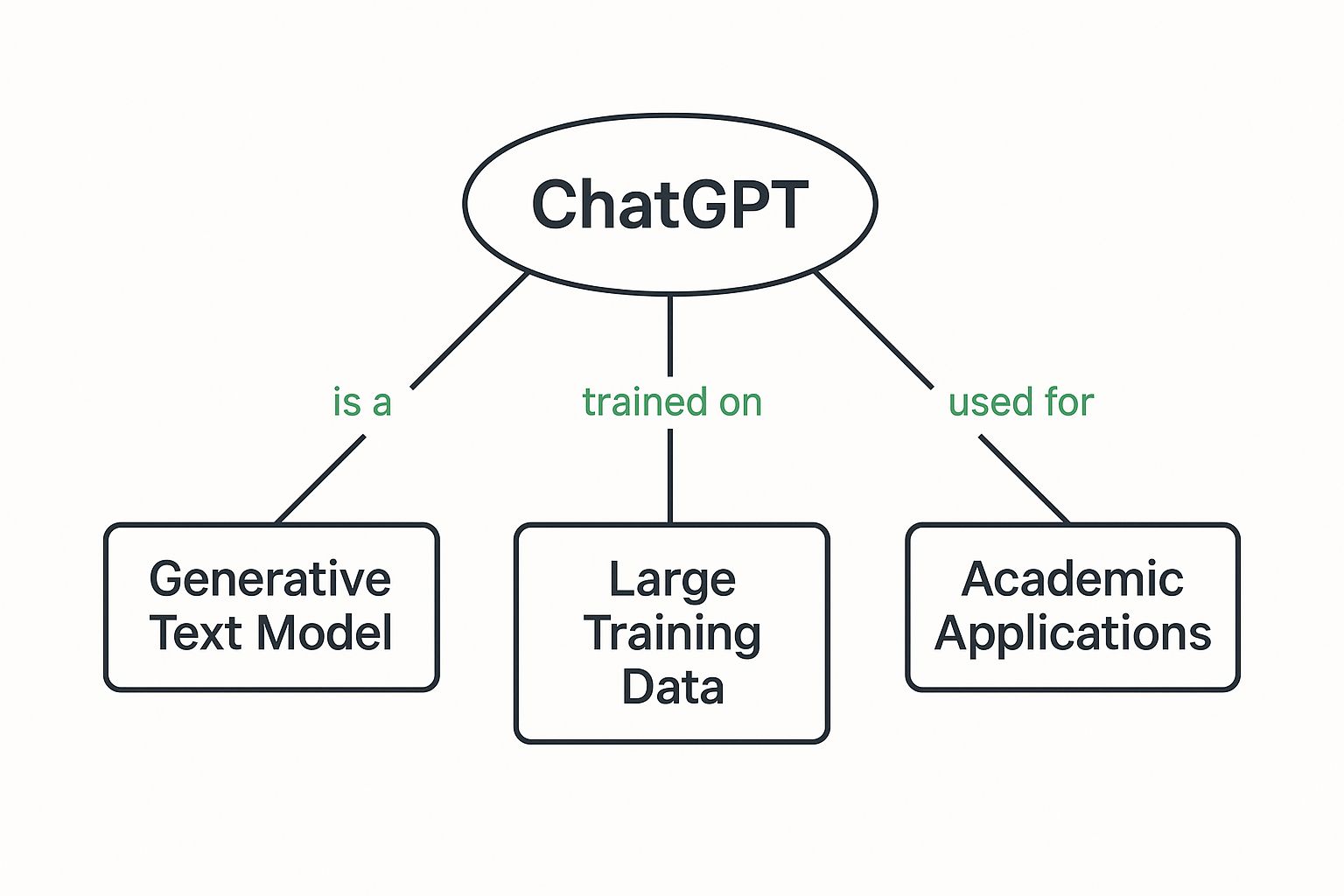

This image gives a simplified look at how language models like ChatGPT work, which is exactly what creates the patterns these detectors are built to find.

The model’s reliance on its training data is its greatest strength and its biggest tell. It’s what helps it write coherent sentences, but it’s also what creates the predictable rhythms that detectors can pick up on.

Key Metrics in AI Detection

What clues is this detective actually looking for? While Turnitin's exact formula is a company secret, it’s built on principles common across the industry. It all comes down to a few key ideas that measure the "humanness" of a piece of writing.

Two of the most important metrics are perplexity and burstiness.

Perplexity: This is just a fancy term for how predictable the writing is. Think of it like a guessing game. If I say, "The dog chased the...", you can probably guess the next word is "ball" or "cat." AI text tends to have very low perplexity because it's programmed to pick the most statistically likely word. Human writing, on the other hand, is full of surprises. We make less obvious word choices, which gives our writing higher perplexity.

Burstiness: This is all about rhythm and flow. Humans write in bursts. We’ll fire off a few short, punchy sentences, then follow up with a longer, more complex one. This creates a natural, uneven tempo. AI models often struggle with this, producing sentences of very similar length and structure. The result is a flat, monotonous rhythm with low burstiness.

The AI score you see in a Turnitin report isn't a plagiarism score. It's a statistical prediction—a number representing how likely it is that a machine wrote a given section, based on these linguistic patterns.

How the AI Score Is Calculated

Turnitin's model doesn't just look at the whole document. It assigns a probability score to every single sentence, highlighting the parts it suspects are AI-generated.

The final percentage you see is simply the proportion of the document that the model confidently flagged as AI. So, a score of 60% means the detector found AI-like patterns in about three-fifths of the text.

It's absolutely critical to remember that this score is just a data point, not a final verdict. Turnitin itself advises instructors to use it as a conversation starter, not as concrete proof of academic misconduct. There are plenty of things that can influence the score, which is why understanding its flaws is just as important as knowing how it works.

How Accurate Is Turnitin, Really?

An AI detection score isn't a simple "guilty" or "not guilty" verdict. It's more like a probability gauge, and its accuracy really depends on how a student used AI in the first place. When someone just copies and pastes text straight from ChatGPT without making any changes, Turnitin is at its most effective.

In these clear-cut cases, the writing is packed with the predictable, low-complexity sentences that the detector is trained to spot. This kind of "raw" AI output is the easiest for the system to flag because the machine's linguistic fingerprints are all over it.

But the moment a human starts to heavily edit that text, the detector’s confidence begins to waver.

Where the Accuracy Starts to Drop

The real gray area for AI detection is in "hybrid" writing. This is what happens when a student uses ChatGPT to create a first draft but then dives in to rewrite, restructure, and inject their own voice and ideas into the text.

This editing process essentially scrambles the signals the detector is looking for by blending human and machine patterns. The more a writer tweaks the text, the more they disrupt the AI's predictable rhythm and word choice, muddying the waters for the algorithm.

Turnitin’s model isn't just spitting out a single score; it's evaluating the statistical probability of AI authorship sentence by sentence. Heavy human editing introduces the kind of unpredictability that drives this probability down, creating ambiguity in the final report.

This is where the accuracy takes a noticeable hit. For these heavily edited hybrid texts, detection rates can fall to around 60-80%. That’s a pretty big drop from its near-perfect performance on raw AI, and it points to a major limitation in the technology.

The Problem with Paraphrasing and Heavy Editing

The system struggles even more with skillfully paraphrased AI content. When AI-generated text is completely rephrased—not just edited—its core linguistic structure changes, making it much harder to flag.

In these situations, Turnitin's detection accuracy can plummet to as low as 40-70%. This is exactly why the company advises that AI scores should only be used as a starting point for a conversation, never as conclusive proof. You can dig deeper into these rates and the need for human review in recent AI detection findings.

Here are the scenarios where Turnitin’s accuracy is most likely to be challenged:

- Hybrid Drafts: A mix of AI-generated sentences and original human writing.

- Skillful Paraphrasing: Content that has been manually rewritten to alter sentence structure and vocabulary.

- 'Humanizer' Tools: Services specifically designed to rephrase AI text to fly under the radar.

- Atypical Writing Styles: Highly technical, formulaic, or even some non-native English writing can sometimes mimic the patterns of AI.

Because of all these variables, the context behind a high AI score is everything. It's a signal to ask more questions, not to jump to conclusions.

With the buzz around "can Turnitin detect ChatGPT" getting louder, a whole world of myths and shaky strategies has popped up. Everyone's looking for a way to outsmart AI detectors, but most of these tricks are based on a misunderstanding of how the tech actually works.

Let's bust a few of the most common—and ineffective—tactics.

One of the oldest tricks in the book is swapping out words with synonyms. This "thesaurus method" might have fooled old-school plagiarism checkers, but AI detection is a whole different ballgame. It doesn't really care about individual words. It’s looking at the statistical relationships between them and the overall predictability of your sentences. Changing "important" to "significant" won't hide the robotic rhythm underneath.

Along the same lines, running your text through a basic online paraphraser is usually a waste of time. Most of these tools are just fancy synonym-swappers, and Turnitin’s model is specifically trained to spot the awkward patterns they leave behind.

The Rise of So-Called 'Humanizer' Tools

Then you have the "AI humanizer" services that promise to rewrite your text until it's undetectable. While some are definitely better than others, they're not a magic bullet. In fact, many spit out awkward or unnatural phrasing that can be a dead giveaway on its own. It's a constant arms race: as the humanizers get smarter, so do the detectors.

The real problem with these methods is they only tackle surface-level changes. Genuine human writing is messy. It has a unique voice and an unpredictable flow—qualities that are incredibly hard to fake with simple software tricks.

If you're curious about the deeper mechanics, this detailed guide on how to bypass AI detection breaks down what actually works and what doesn't. At the end of the day, the best "humanizer" is a human editor who can completely rethink and restructure the content.

The Flip Side of Detection: False Positives

It's also important to talk about the other side of the coin: false positives. Even though Turnitin claims its false positive rate is very low, no system is perfect. Certain human writing styles can sometimes get flagged as AI-generated by mistake.

Here are a few situations where that might happen:

- Non-Native English Speakers: Someone who learned English as a second language might naturally use more structured, textbook-style sentences. This can accidentally mimic the predictability that AI detectors are trained to find.

- Highly Technical Writing: Think about scientific papers or legal documents. They often use formulaic language and a very direct tone, which lacks the creative "burstiness" of other writing styles.

- Over-relying on Grammar Checkers: Tools like Grammarly are great, but their advanced sentence-rewriting features can smooth out your writing so much that it loses its human variation. This can sometimes trigger a flag.

This is exactly why Turnitin insists that a high AI score isn't an automatic accusation. It's supposed to be the start of a conversation between the instructor and the student.

How to Use ChatGPT Ethically in Your Studies

The fear of Turnitin catching AI-generated text often pushes students toward one question: "How do I get around it?" But that’s the wrong question. A much better approach is to figure out how to use tools like ChatGPT as a powerful—and ethical—study partner.

When you use it right, AI doesn’t have to violate academic integrity. Think of it like a calculator. You wouldn't use it to solve a basic math problem you’re supposed to do in your head, but you'd absolutely use it to check your work on a complex equation. One replaces your thinking; the other supports it. The goal is to keep your own critical analysis and unique voice front and center.

Brainstorming and Idea Generation

One of the best ways to ethically use ChatGPT is as a brainstorming partner. We’ve all been there—staring at a blank page, stuck on a complex topic with no idea where to start. AI can get the gears turning without writing a single word of your final paper for you.

You could ask it to generate potential arguments for a debate, suggest subtopics for a broad research question, or create a mind map of related concepts. This is just a launchpad. The real intellectual work of developing those ideas into a coherent essay is still all on you.

Crucial Tip: Never copy AI-generated ideas or phrases directly. Use them as inspiration to fuel your own original thinking and guide your research.

Outlining and Structuring Your Paper

Getting your thoughts organized into a logical structure can be a real struggle. ChatGPT is an excellent tool for building a solid framework for your paper. You can feed it your main thesis and key points, then ask it to propose a coherent outline.

This helps you see the flow of your argument before you even start writing, making sure each section connects logically to the next. It’s like creating a blueprint before you start building.

- Do: Ask for different structural models, like a chronological outline versus a thematic one, to see which fits your topic best.

- Don't: Let the AI fill in the content for each section. The outline is just the skeleton; you have to provide the substance.

Simplifying Complex Topics and Refining Your Work

Let’s be honest, some academic sources are dense and nearly impossible to read. Pasting a complicated paragraph into ChatGPT and asking it to "explain this in simple terms" is a fantastic way to actually understand what you're reading. It's no different than looking up a word in a dictionary or asking a tutor for help.

AI can also act as a pretty good grammar and style checker. It’s a fast way to polish your work. Some educators are even exploring chatbots for education and AI tutors to integrate this kind of assistance directly into learning.

But be careful. Relying too heavily on AI to rephrase your sentences can strip the writing of its authentic voice. If you want to keep your style intact, our guide on how to humanize AI text is a great resource. It helps ensure your unique personality shines through—something no detector can replicate.

The line between using AI as an assistant and letting it do the work for you can get blurry. Here’s a quick breakdown to keep you on the right side of academic integrity.

Ethical vs. Unethical Use of ChatGPT for Assignments

| Task | Ethical Use (AI as a Tool) | Unethical Use (Academic Misconduct) |

|---|---|---|

| Brainstorming | Generating a list of potential essay topics or research questions. | Copying an AI-generated topic and outline without changes. |

| Research | Asking for summaries of complex theories to aid understanding. | Citing AI-generated summaries as if they were original sources. |

| Outlining | Requesting a structural template based on your thesis and key points. | Using an AI-generated outline and having it write the content for each section. |

| Drafting | Using AI to rephrase a clumsy sentence you wrote for better clarity. | Generating entire paragraphs or the full essay and submitting it as your own. |

| Editing | Checking for grammar, spelling, and punctuation errors. | Asking the AI to rewrite your entire paper to "sound more academic." |

| Feedback | Getting suggestions on how to strengthen a specific argument you've already written. | Submitting a draft that is more than 20% AI-generated content. |

Ultimately, the goal is to enhance your learning, not replace it. As long as the final work reflects your own ideas, research, and critical thinking, you're using AI as the powerful tool it was meant to be.

Your Top Questions About Turnitin and AI, Answered

Let's be honest: navigating AI tools in an academic setting can feel like walking through a minefield. The rules are changing fast, and it’s totally normal to have questions about how tools like Turnitin fit into the picture.

This section cuts through the noise to give you direct, clear answers to the most common questions students and educators are asking. We’ll cover everything from false positives to the future of AI detection, so you know exactly where things stand.

Can Turnitin Catch Older Versions of ChatGPT?

Yep. Turnitin can spot text from older models like GPT-3.5 just as well as it can from newer ones like GPT-4. The reason is simple: the detector isn’t looking for a specific AI model’s "signature."

Instead, it's trained to recognize the underlying patterns of machine-generated text. Things like suspiciously predictable word choices (low perplexity) and an unnaturally even sentence structure (low burstiness) are dead giveaways. While newer AI models are getting more sophisticated, they still build sentences based on statistical probabilities. That process leaves behind digital fingerprints, and Turnitin’s job is to find them, regardless of which GPT version was used.

What Should I Do If Turnitin Falsely Flags My Work?

Getting a false positive—where your own writing gets flagged as AI-generated—is incredibly stressful. First things first: don't panic. That AI score isn't a final verdict; it's just one piece of information for your instructor.

An AI score is meant to start a conversation, not end one. Your instructor is expected to use their own judgment and look at the score in context with your past work and writing style.

If this happens to you, here’s a simple, step-by-step game plan:

- Look at the Report: Ask your instructor if you can see the report. You need to know which specific parts of your paper were flagged. Sometimes, highly technical or structured writing can trigger the detector.

- Gather Your Proof: Pull together any evidence of your writing process. This could be anything from research notes and outlines to early drafts or even the version history in Google Docs. This isn't about being defensive; it's about showing your work.

- Have a Calm Conversation: Set up a time to talk with your instructor. Calmly explain your process and show them the evidence you collected. The goal is to demonstrate that the work is yours, not just to argue against the score.

Remember, most educators know these systems aren't perfect. A respectful, transparent chat is usually all it takes to clear things up.

How Are Universities Changing Their AI Policies?

Universities are definitely scrambling to adapt, but there’s no universal rulebook yet. The approaches vary—a lot. Some schools have put a hard ban on using generative AI for any graded work, treating it just like any other form of plagiarism.

Others are taking a more measured approach. They're creating guidelines that distinguish between smart and unethical uses. For instance, you might be allowed to use ChatGPT for brainstorming ideas but forbidden from using it to write entire paragraphs. Many now require a disclosure statement if you've used AI, asking you to explain exactly how you used it.

The bottom line? Always check your school's and your professor's specific policies. Don't assume anything. Your course syllabus is the final word on what's okay and what's not.

Will AI Detectors Ever Be 100 Percent Accurate?

It's highly unlikely. The core challenge is that AI writing and human writing are both moving targets. As AI models get better at sounding less predictable and more "human," the detectors have to constantly play catch-up.

Then there's the whole "hybrid" text problem—writing that's a mix of human and AI. Trying to untangle that with perfect accuracy is nearly impossible. Because of this, there will always be a margin of error. That's why these tools will always need a human in the loop to apply critical thinking and good judgment.

Ready to make sure your writing sounds genuinely human and bypasses AI detection? Natural Write offers a free, powerful platform to transform AI-generated text into natural, polished content. Our one-click humanizer refines your drafts while preserving your core ideas, making sure your work is ready for submission with confidence. Try it today at https://naturalwrite.com.