A Practical Guide: ai checker for teachers in the Classroom

February 2, 2026

An AI checker is a tool that helps teachers figure out if a student's writing might have been generated by AI. It’s less like a typical plagiarism scanner and more like a linguistic analyst, trained to spot the subtle, almost-too-perfect patterns that tools like ChatGPT tend to leave behind. This guide is your practical roadmap for using this new technology well.

Why AI Checkers Are a New Reality for Teachers

The sudden explosion of generative AI has completely changed the game in education. It's left a lot of teachers searching for dependable ways to know if student work is truly their own. An AI checker for teachers is a direct answer to that problem, going beyond the old copy-and-paste checks to look at the actual style and structure of the writing.

Instead of just comparing text to a massive database of existing content, these tools look at qualities that are tough for a person to catch consistently. They're scanning for things like predictable word choices and sentence structures that are just a little too uniform—the kind of tell-tale signs that a piece of writing didn't come from the messy, creative process of a human brain.

The Growing Need for Verification

This isn't just a hypothetical problem; it’s happening in classrooms right now. More and more, educators are using these tools to open up conversations about what academic honesty even means in the age of AI.

The rush to adopt AI detection tools shows just how urgent this feels for schools. In the 2023-24 school year, 68% of secondary teachers said they used one, a huge jump from only 38% the year before. You can get more details on AI detection tool trends and their impact.

This trend makes it clear that we need practical solutions. But just having a tool isn't enough—bringing an AI checker into your classroom has to be done thoughtfully. It’s not about playing "gotcha" with students; it’s about fostering a learning environment that’s fair, transparent, and focused on genuine learning.

Key Considerations Before You Start

Before you jump in and pick a tool, it's really important to pause and think through a few things. A careful approach makes sure the technology actually supports your teaching goals instead of just creating an atmosphere of mistrust.

Here is a quick summary of the essential factors to weigh before integrating an AI checker into your assessment process.

Key Considerations for Using an AI Checker

| Consideration | Why It Matters for Teachers |

|---|---|

| Accuracy & False Positives | No detector is flawless. You need a clear plan for what to do when the tool flags something incorrectly to ensure fairness. |

| Student Privacy | You have to know where your students' data is going. Choosing a FERPA-compliant tool that respects privacy isn't just a good idea—it's a requirement. |

| Pedagogical Purpose | Is your goal to catch and punish, or to teach? An AI checker for teachers works best as a conversation starter about authorship and using AI ethically. |

Ultimately, these aren't just technical details; they're the foundation for using these tools responsibly and effectively in your classroom.

How AI Detection Tools Actually Work

So, how does an AI checker for teachers actually figure out if a student's essay was written by a machine? It's not magic, but it is pretty clever. Think of it less like a plagiarism scanner and more like a linguistic detective. These tools have been trained on mountains of text—some written by humans, some by AI—learning to spot the subtle giveaways that each one leaves behind.

Instead of just checking for copied-and-pasted content, AI detectors analyze the very fabric of the writing itself. They're looking for statistical patterns and quirks that are incredibly common in machine-generated text but feel a bit off when compared to how people naturally write.

The Clues AI Checkers Look For

At the heart of it, these tools are primarily looking at two things: perplexity and burstiness. Getting a feel for these two concepts is key to understanding where that AI-generated probability score actually comes from.

Perplexity: This is really just a fancy way of measuring how predictable the writing is. Human writing tends to be full of surprises. We use unusual words, craft odd phrases, and jump around in ways that an algorithm might not expect. This gives our writing high perplexity. AI, on the other hand, is trained to choose the most statistically probable next word, making its prose very smooth and predictable—and giving it low perplexity.

Burstiness: This one is all about rhythm. Think about how you talk or write. You might use a few short, punchy sentences and then follow them up with a long, winding one that explains a complex idea. That's burstiness. AI models often struggle with this, producing text where the sentences are all roughly the same length and structure. It feels a little too uniform, a little too perfect.

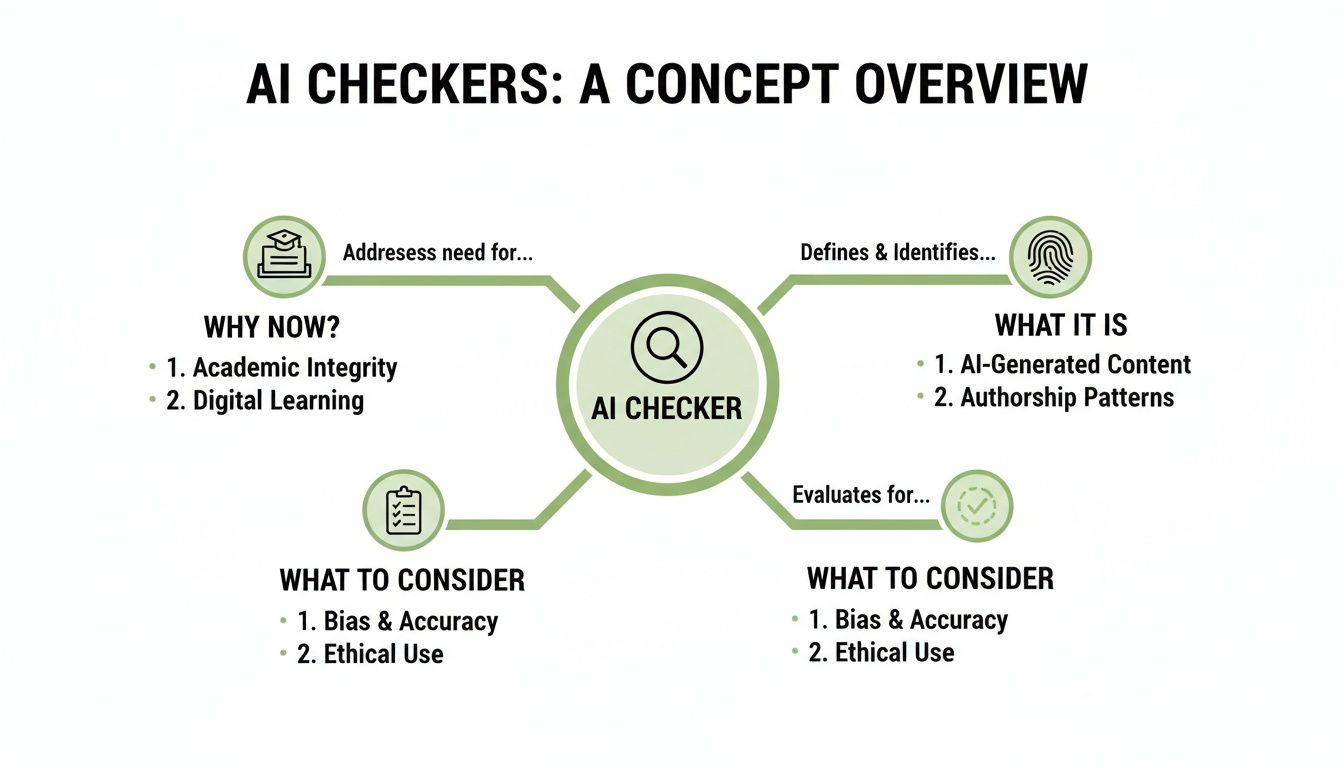

This quick overview shows how an AI checker fits into the bigger picture for educators, touching on everything from academic integrity to the ethical questions we need to ask.

As you can see, it’s not just about catching students. It’s about starting a much larger conversation in our schools. If you want to get into the nitty-gritty, we break it down even further in our guide on how AI checkers work.

Probabilistic, Not Definitive

This is probably the most important thing to remember: AI checkers give you a probability, not a certainty. They're making an educated guess.

An AI checker's result is a statistical assessment, not a final verdict. It might say there’s a 90% chance a text is AI-generated, but it can never be 100% sure. This is a critical distinction for treating students fairly.

This is also why you can paste the same essay into three different tools and get three wildly different scores. Each tool uses its own secret sauce—a unique algorithm trained on a different set of data—so they all interpret the clues differently. An AI score should always be the start of a conversation with a student, never the final, damning piece of evidence.

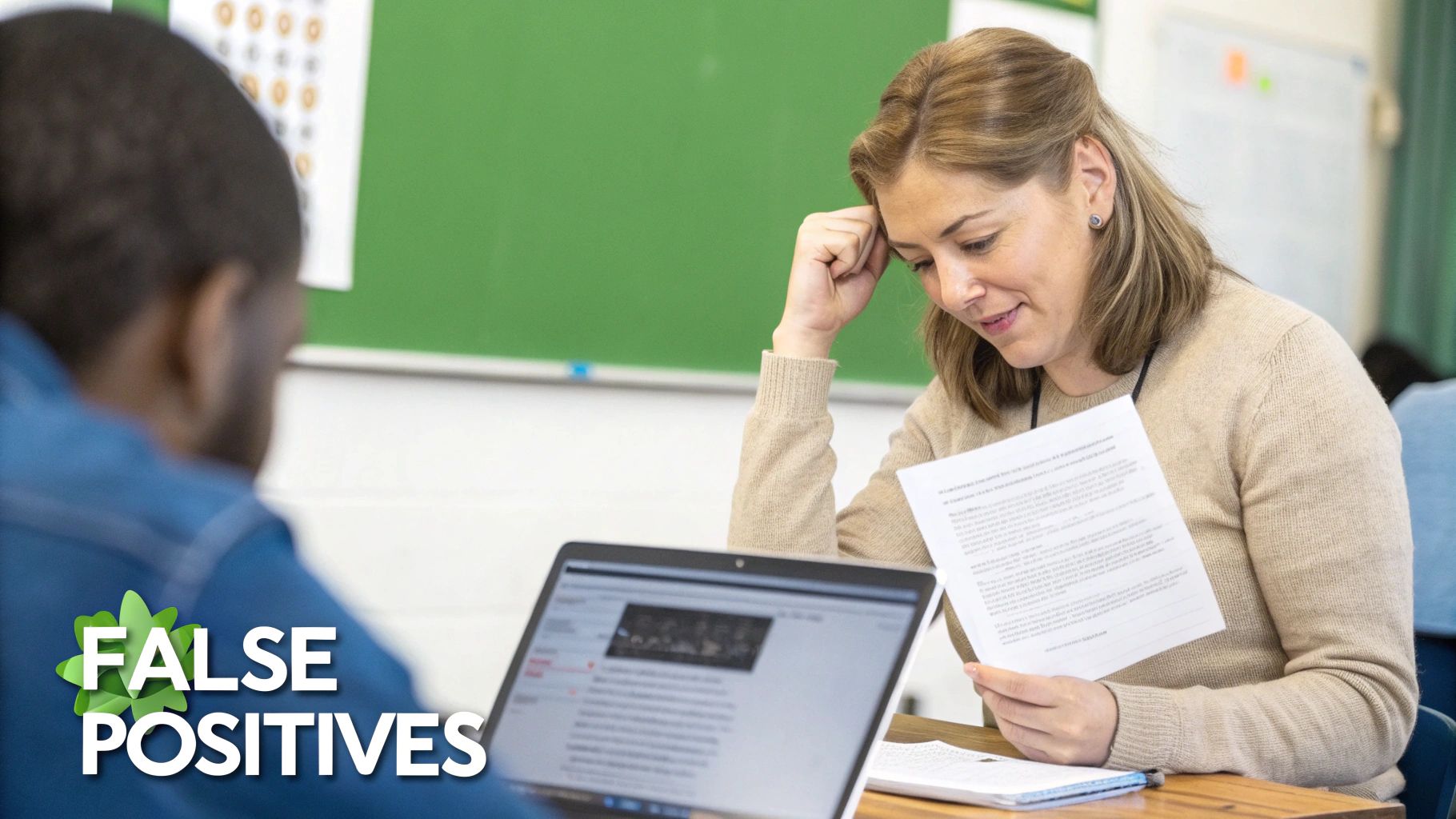

Understanding the Hidden Flaws of AI Checkers

While an AI checker for teachers feels like a modern solution for protecting academic integrity, it's essential to pull back the curtain and see these tools for what they are: imperfect. Their most significant and troubling weakness is the risk of false positives—when the software mistakenly flags a student's original writing as AI-generated. This single issue can create serious, and entirely unfair, problems in your classroom.

A high "AI score" should never be the final word. Think of it as a single data point, not a verdict. It’s a signal to look closer, a conversation starter, but never grounds for an accusation on its own. Understanding these limitations is the first step to using any AI checker ethically and effectively.

Why False Positives Are a Major Concern

False positives don't just happen randomly. They often target specific writing styles, which means certain students are far more likely to be flagged, raising real equity concerns that can damage the learning environment.

Students who are more vulnerable to being misidentified include:

- Non-native English speakers who might naturally use more structured or formulaic sentences while mastering the language.

- Neurodivergent students who may prefer to write in very logical, predictable patterns that can mimic an AI's output.

- Students who diligently follow a rubric or essay template, producing highly organized text that lacks the "burstiness" or variation the tool expects from a human.

When a tool penalizes a student for developing clear, structured writing skills—the very things we often teach—it's working against our educational goals. This is precisely why a teacher's careful, human-centered judgment is irreplaceable.

The core message for every teacher is this: An AI checker's score is a piece of data, not a definitive judgment. Its value lies in guiding your professional intuition, not replacing it.

The Accuracy Dilemma

Even the developers behind these tools will tell you they aren't foolproof. A big reason for this is the inherent AI speed accuracy trade-off; in the world of AI, processing things faster often means sacrificing precision. This balancing act guarantees that no detector can be right 100% of the time.

Just how inaccurate can they be? The numbers might surprise you. When OpenAI tested its own AI text classifier back in 2023, it found the tool only had a 26% success rate at correctly identifying AI-written text. To put it another way, its own system would miss AI-generated content nearly three out of every four times.

This reality check underscores why an AI checker should only be a small part of your overall assessment strategy. It might point you in a direction, but it's your expertise, your relationship with the student, and your analysis of their work over time that truly matters.

Creating a Fair and Effective Classroom AI Policy

Now that we know AI checkers aren't a silver bullet, it's time to shift our focus. Instead of getting stuck in a cat-and-mouse game of detection and punishment, we can use this moment to teach and guide. A clear, proactive classroom AI policy turns a potential problem into a real learning opportunity.

The goal isn't just to catch cheaters. It's to set transparent expectations that open the door for honest conversations about digital literacy and ethical scholarship. A good policy doesn't just list what's forbidden; it clearly shows students what appropriate AI use looks like.

Let's face it: AI is a tool our students will use for the rest of their lives. It's our job to teach them how to use it responsibly. This changes the conversation from a confrontational "Did you cheat?" to a more productive "How did you use this tool to support your original thinking?"

Building Your Classroom Policy

A strong policy starts by drawing clear lines in the sand. You need to spell out what’s encouraged, what's allowed (with proper citation), and what’s completely off-limits. This removes the guesswork for students and gives you a fair, consistent standard for grading.

Here’s what every good policy should cover:

Define Acceptable Uses: Be specific about how students can use AI tools to help them learn. This could be anything from brainstorming initial ideas and generating a rough outline to checking the grammar on a final draft.

Define Unacceptable Uses: Be just as clear about what crosses the line into academic dishonesty. This usually means generating entire essays, using AI to answer questions without understanding the material, or passing off AI text as their own work.

Establish Citation Standards: If you allow students to use AI for certain tasks, you have to show them how to cite it. Just like they’d cite a book or a website, they need a standard way to credit the role AI played. This teaches them a crucial lesson: give credit where it's due.

Outline Clear Consequences: Your policy needs teeth. State the consequences for breaking the rules clearly and make sure they align with your school's existing academic integrity policies. This ensures everyone is treated fairly.

To help you get started, here's a checklist of key components to consider when building your policy.

AI Classroom Policy Checklist

This table breaks down the essential parts of a comprehensive AI policy. Use it as a guide to start conversations at your school and ensure your own classroom rules are clear, fair, and educational.

| Policy Component | Key Question to Address |

|---|---|

| Philosophy & Rationale | Why does this policy exist? How does it support learning goals? |

| Definition of AI | What specific tools are we talking about (e.g., ChatGPT, Gemini, etc.)? |

| Permitted Uses | What specific tasks are students allowed to use AI for? (e.g., brainstorming, outlining) |

| Prohibited Uses | What actions are considered academic misconduct? (e.g., submitting AI work as original) |

| Citation Requirements | How should students acknowledge and cite their use of AI tools? |

| Disclosure & Transparency | Do students need to declare their AI usage on assignments? If so, how? |

| Consequences for Misuse | What happens if a student violates the policy? (Link to school-wide policies) |

| Teacher's Role | How will the instructor support students in using AI ethically? |

| Focus on Learning | How does the policy reinforce the value of original thought and critical thinking? |

A well-crafted policy based on these components helps create a predictable and fair environment for everyone.

Fostering a Culture of Integrity

Ultimately, your policy is a teaching tool. It's your chance to have an ongoing conversation about what authentic intellectual work really means in the 21st century.

An AI policy should be a living document, something you discuss at the start of the year and bring up again before major assignments. This constant reinforcement shows students the focus isn't on policing them, but on guiding their learning process and helping them find their own voice.

This proactive approach turns a potential classroom headache into a foundational lesson on ethics and critical thinking. By setting clear, fair rules, you give students the confidence to navigate this new technology with integrity. While the right AI checker for teachers can support your efforts, it can never replace a thoughtful policy and an open classroom dialogue.

Choosing the Right AI Checker for Your School

When it comes to picking an AI checker for teachers, it’s tempting to just grab the one with the highest accuracy score. But for any school or district, that’s just one piece of the puzzle. The real priorities need to be student safety, data privacy, and whether the tool actually works in a real classroom.

Think of it like following a detailed AI vendor due diligence checklist; you have to look under the hood. The first and most critical question should always be about student data. Where does a student's essay go once it's been scanned? Is the vendor compliant with strict privacy laws like FERPA? These are deal-breakers, and you need clear answers before you go any further.

Key Features Beyond Accuracy

Even if a tool is secure, it has to be practical. A high-powered detector that's a nightmare to use will sit on a shelf, collecting digital dust. The goal is to find a platform that feels intuitive for both teachers and students, without a steep learning curve.

Here are a few must-have features to look for:

- LMS Integration: Does it play well with your existing systems? A tool that plugs directly into Canvas, Schoology, or Google Classroom is a huge win. It saves teachers precious time and keeps their workflow in one place.

- Transparent Reporting: A simple percentage score isn't enough. The best tools show their work, highlighting the specific sentences or patterns that raised a flag. This gives you a starting point for a real conversation with a student, not just an accusation.

- A Focus on Pedagogy: The right tool should feel like an educational partner, not a digital hall monitor. Does the platform offer resources to help you talk about academic integrity and responsible AI use with your students?

Evaluating Your Options

As you start comparing different platforms, build a scorecard based on what truly matters to your school. Don't get fixated on a single accuracy test you saw online—every detector has its blind spots. The real question is which tool will best support your teachers and protect your students day in and day out.

The most effective AI checker is one that supports a culture of academic integrity without creating an atmosphere of suspicion. Its primary role should be to provide data that empowers educators to make informed, human-centered decisions.

Ultimately, choosing the right tool is about finding a balance. You need a reliable AI checker for teachers that performs well, but also respects student privacy and fits into the demanding reality of the classroom. To see how different platforms stack up, check out our guide to the 12 best AI detectors available today.

Integrating AI Checkers Into Your Workflow

An AI checker for teachers is most powerful when it’s treated as a teaching instrument, not just a high-tech "gotcha" tool. Its true value isn't in catching students after the fact, but in being woven into your assessment process from the very beginning. When you do that, it stops being an accuser and starts being a guide.

The best way to handle a high AI score is to see it as a signal to start a conversation. Rather than jumping to conclusions, a flag from the checker should simply prompt a closer look at that student’s entire writing process. This moves the focus away from a single, often unreliable score and toward a more complete understanding of their work.

From Detection To Discussion

Adopting this mindset completely changes the purpose of the tool. It’s no longer about proving a student did something wrong. It's about spotting those moments where they might be struggling to form their own ideas or are leaning too heavily on AI to get started.

Here’s what a more proactive and supportive workflow could look like:

Analyze Drafts Early: Don't wait for the final paper. Run early drafts through the checker to catch potential over-reliance on AI for brainstorming or outlining. This gives you a chance to step in and help.

Pair Scores with Evidence: A score on its own means very little. The real work starts when you compare the flagged text to that student’s past assignments. Do you see jarring shifts in writing style, voice, or vocabulary?

Initiate a Process-Based Conversation: The most important step. Sit down with the student one-on-one and ask them about their process. "How did you get started? What was your research like? Talk me through your outline." This kind of chat reveals more about authorship than any percentage score ever could.

An AI checker should serve as one data point among many. The goal is to gather a complete picture of a student's effort, turning a moment of potential conflict into a valuable opportunity for learning and growth.

This approach shifts the classroom dynamic from adversarial to supportive. It becomes a teachable moment about what authorship really means, how to think critically, and how to use technology responsibly. By focusing on the process over the final product, you end up reinforcing the very skills that matter most.

If you're looking for more strategies, you might be interested in our article on how teachers can detect AI writing.

Common Questions About AI Checkers in Education

As you start to bring AI detection tools into your classroom, it's completely normal for a bunch of questions—both practical and ethical—to pop up. Getting a handle on these new challenges means finding clear answers and, above all, staying committed to fairness for your students.

Let's tackle some of the most common concerns we hear from educators. The main thing to remember is that an AI checker for teachers is just a guide, not a final verdict. Its findings are a starting point, and they should always be viewed through the lens of your own professional experience and followed up with a supportive chat with your student.

What Should I Do if a Student's Work Is Flagged?

First, don't panic. A flag from an AI checker is a signal to look closer, not a reason to jump to conclusions. Think of it as the start of a conversation, not the end of one.

A good first step is to pull up some of the student's previous assignments. Are there any jarring or out-of-character changes in their writing style, tone, or even their vocabulary? A sudden shift can be a clue.

After that, the most crucial part is to sit down with the student for a private, non-confrontational chat. Ask open-ended questions about how they approached the assignment. "Can you walk me through your process for writing this?" or "What part of this did you find most challenging?" This conversation will give you a real sense of their understanding, which is a far better indicator of authorship than any software can provide.

Can Students Be Falsely Accused by an AI Checker?

Yes, they absolutely can. This is one of the biggest limitations of the technology right now. False positives are a known issue, and these tools can sometimes mistakenly flag text written entirely by a human. This is especially true for non-native English speakers or students who naturally write in a very formal or structured way.

Because the risk of a false positive is real, a score from an AI checker should never be the only piece of evidence in an academic integrity case. It's a data point, not a verdict. It needs to be backed up by your own professional judgment and a direct conversation with the student.

How Can I Teach Students to Use AI Tools Ethically?

The best strategy is to get ahead of the problem with education, not just react to it with punishment. It all starts with creating a crystal-clear AI policy for your classroom. This policy should spell out the difference between acceptable uses (like brainstorming ideas or checking grammar) and unacceptable ones (like having an AI write the entire essay).

Teach students how to properly cite AI if they use it as a research assistant, just as you'd teach them to cite a book or a website. By talking openly about what AI can and can't do, you position it as another tool in their academic toolbox—one that requires critical thinking and responsible use. This approach helps build genuine digital literacy instead of just policing for misuse.

Ready to integrate a secure, privacy-first AI checker into your workflow? Natural Write offers powerful AI detection and humanization tools without ever storing your students' data. Explore Natural Write for free today.