How Can Teachers Detect AI A Practical Guide for Modern Educators

January 18, 2026

Detecting AI-generated content isn't about just one magic bullet. It's a combination of knowing your students' work, using technology wisely, and rethinking how we assign projects in the first place. The most obvious signs are often the most telling: perfectly structured but oddly lifeless writing, generic ideas, or a sudden leap in quality that just doesn't match the student's previous work. A balanced approach that puts critical thinking first will always be more effective than relying on a single tool.

The Educator's Dilemma: Understanding AI in Student Work

The rise of generative AI has thrown a real curveball at educators. We're all trying to uphold academic integrity and encourage genuine learning, but it’s tough when students have tools that can write an essay in seconds. The problem isn’t just about catching cheaters; it’s about figuring out where to draw the line between a helpful tool and academic dishonesty in this new reality.

To navigate this, we first have to understand what we're looking at. If you need a primer, our guide on https://naturalwrite.com/blog/what-is-ai-generated-content is a great place to build that foundational knowledge.

Our role is evolving. We have to maintain a fair classroom while adapting to technology that can replicate human writing with startling accuracy. This guide is designed to give you practical strategies that go beyond a simple "gotcha" approach. It's about fostering a classroom culture that values critical thinking, personal voice, and real skill development over just turning something in.

The Scale of AI in Academics

This isn't just a hypothetical problem—it's happening in classrooms every day. The numbers paint a clear picture. During the 2023-24 school year, a staggering 68% of K-12 teachers reported using AI detection tools regularly.

The need is obvious. Out of over 200 million papers scanned by Turnitin, about 11% had at least 20% AI-generated writing. That shows just how common this has become.

This shift forces us to be more than just graders; we have to be investigators of the learning process itself. The real goal isn't just to catch dishonesty but to guide students toward ethical AI use, ensuring their work genuinely reflects their own understanding.

For a deeper dive into how AI is shaping education, the SmartSolve AI blog offers some great perspectives on the challenges and opportunities we're facing.

To give you a clearer picture of the strategies available, here’s a quick summary of the main detection methods we'll be exploring.

At-a-Glance AI Detection Strategies for Teachers

This table breaks down the different approaches you can take, highlighting what each involves and where they shine—or fall short.

| Strategy | What It Involves | Best For | Limitations |

|---|---|---|---|

| Manual Observation | Knowing your students' writing style, voice, and typical errors. Looking for sudden, unnatural shifts in quality or tone. | Spotting inconsistencies in work from students you know well. Identifying papers that just "feel" off. | Subjective and time-consuming. Can be difficult in large classes or with new students. |

| AI Detection Tools | Uploading student text to software like Turnitin or GPTZero that analyzes patterns to estimate the probability of AI generation. | Quickly scanning a large number of assignments for potential red flags. Providing an initial data point for investigation. | Prone to false positives and negatives. Can be fooled by paraphrasing. Should never be used as sole proof. |

| Assignment Design | Creating "AI-resistant" tasks that require personal reflection, in-class work, analysis of current events, or multimedia components. | Proactively reducing the incentive and ability to misuse AI. Promoting higher-order thinking and genuine engagement. | Requires more creative planning and may not be suitable for all types of assessments. |

| Student Dialogue | Having structured conversations with students about their writing process, sources, and understanding of the material. | Investigating suspected cases in a supportive, non-accusatory way. Gauging a student's actual knowledge. | Relies on the student being honest. Can be intimidating for both the teacher and the student if not handled carefully. |

Ultimately, a mix of these strategies will give you the most reliable and fair framework for navigating AI in your classroom.

A Preview of Detection Strategies

In the rest of this guide, we'll dig deeper into a multi-layered strategy for identifying AI-generated work. Relying on any single method is a recipe for frustration and false accusations. Instead, a thoughtful combination of human insight, technology, and smart pedagogy is the only way forward.

Here’s a quick look at what’s coming up:

- Manual Red Flags: We’ll explore the tell-tale signs of AI—like that overly formal tone, the strange lack of personal stories, or the use of unnecessarily complex vocabulary.

- Using AI Detectors: You'll learn how to use these tools effectively while understanding their very real limitations and biases.

- Proactive Assignment Design: Get practical ideas for creating assignments that are much harder to cheat on, like those requiring personal reflection or in-class presentations.

- Fair Investigation: We’ll walk through a humane process for talking to a student when you suspect AI misuse, ensuring you handle it fairly and without jumping to conclusions.

Recognizing the Digital Fingerprints: Manual AI Detection Techniques

Before you reach for any detection software, remember that your most powerful tool is your own professional judgment. You know your students. You know their voices, their typical mistakes, and how their thinking has evolved throughout the semester. AI-generated text, for all its sophistication, often leaves behind subtle clues—digital fingerprints that just feel wrong to an experienced educator.

So, where do you start? Trust your instincts. If a paper feels "off" or doesn't sound at all like the student you've been working with, that’s your first and most important red flag. That gut feeling is often the most accurate sign that you need to take a closer look.

The Absence of a Human Voice

The classic tell of AI writing is its sterile, generic tone. It often reads like a perfectly competent summary from a textbook, but it’s missing the spark—the genuine curiosity, personal opinion, or unique insight that makes a student's work their own. Real student writing, even when it’s flawed, has a distinct voice.

Think about it. A student grappling with a tough idea might use a clunky but heartfelt metaphor, share a quick personal story to connect with the material, or even express frustration with a source. AI doesn't do that. Its goal is to be correct and comprehensive, not personal and reflective.

A key giveaway is writing that is technically perfect but emotionally empty. It hits every point on the rubric but makes no real argument or connection to the material. This emotional flatness is a hallmark of machine-generated text.

When you're reading a paper and you can't sense the person behind the words, there’s a good chance an AI was the author. For a deeper dive into these textual giveaways, our article on spotting AI writing provides more context and examples: https://naturalwrite.com/blog/is-this-written-by-ai

Unnatural Polish and Inconsistent Quality

Students' writing skills develop over time, and their work shows that journey. A paper that is suddenly flawless—completely free of the typos, grammatical quirks, or run-on sentences you’re used to seeing from a particular student—is immediately suspicious. This is especially true if their past work showed a consistent pattern of specific writing challenges.

Keep an eye out for these common red flags:

- Sudden Vocabulary Surge: The writing is peppered with sophisticated or overly formal words that are totally out of character. For instance, a student who usually writes in a clear, direct style is suddenly using words like "henceforth," "juxtaposition," or "myriad."

- Perfectly Uniform Structure: Every paragraph is roughly the same length. Each one follows a rigid topic-sentence-support-conclusion formula, and the transitions are almost too perfect. Human writing is messier and has a more natural, varied rhythm.

- Lack of Specifics: The text is full of broad generalizations but lacks concrete examples, especially ones drawn from class discussions, specific assigned readings, or personal experiences requested in the prompt.

A sudden leap from C-grade mechanics to A-grade perfection, without a matching improvement in critical thinking, is a massive indicator of AI assistance.

Questionable and Fabricated Sources

Citations are another area where AI frequently messes up. While the models are improving, they are still notorious for "hallucination"—inventing sources that sound plausible but are completely fake.

You might see a citation for an article that seems highly relevant, complete with authors and a journal title. But when you do a quick search, you discover the article, the journal, or even the authors don't exist. That's a smoking gun for AI misuse.

Even when the sources are real, the AI might misrepresent their arguments or just drop them in without any real analysis. It might list a dozen impressive sources in the bibliography, but the essay itself fails to engage deeply with any of them. If a student can’t explain the core argument of a source they cited, it's a good bet they didn't actually read it. That kind of shallow engagement is a clear digital fingerprint left by an AI tool.

Navigating AI Detection Tools: Promises and Pitfalls

When you suspect a student has used AI, the instinct is to reach for a quick technological fix. Tools like Turnitin, GPTZero, and Copyleaks have quickly become the go-to for many educators, offering what seems like a simple button to press for a definitive answer.

But let's be clear: these tools are not the silver bullet they appear to be.

Relying solely on an AI detector is a massive gamble. While they can offer a helpful starting point, their results should never be treated as concrete evidence of academic dishonesty. Think of a detector's score as a single, often unreliable, data point—a sign that you might need to dig a little deeper, not a final verdict.

Understanding the Tech's Very Real Limits

At their core, AI detection tools are pattern-finders. They scan text for signals like perplexity (how predictable and simple the word choices are) and burstiness (the natural ebb and flow of sentence length). Human writing is messy and inconsistent; AI writing, on the other hand, often has a strange, uniform smoothness to it.

The problem is, AI models are getting frighteningly good at mimicking human chaos, making their output harder and harder to flag. It's a constant cat-and-mouse game where the detectors are always a step behind the generators. If you want to get into the weeds, our guide on the best AI detectors unpacks the technology in more detail.

Worse yet, these tools are riddled with biases. A staggering 19% of teachers report having clear AI policies at their schools, leaving most to rely on these flawed systems. Research has shown that these detectors can systematically penalize non-native English speakers. One study found that Black teenagers are 2.86 times more likely than their White peers to have their writing incorrectly flagged as AI-generated.

Here’s a look at the Turnitin interface, which many educators are familiar with.

The clean, simple dashboard makes it seem easy to get a quick read on a paper's originality. But that simplicity hides the deep-seated unreliability of the AI scores it produces.

A Practical Guide to AI Detection Tools

To help you make sense of the options out there, here’s a look at some of the most common tools teachers are using. Remember, none of these are foolproof, and each comes with its own set of strengths and weaknesses.

Comparing Popular AI Detection Tools

| Tool Name | How It Works | Pros | Cons & Known Biases |

|---|---|---|---|

| GPTZero | Analyzes text for low perplexity and burstiness, which are common traits of AI-generated content. | Offers a simple interface, browser extensions, and detailed sentence-by-sentence highlighting. Often considered one of the more accurate options. | Can still be fooled by edited AI text or human writing that is very formulaic. Struggles with text from newer, more advanced AI models. |

| Copyleaks | Uses a multi-layered approach, analyzing both statistical patterns and semantic meaning to identify machine-generated text. | Integrates with many Learning Management Systems (LMS) and checks for both plagiarism and AI. Provides a clear percentage score. | Has been shown to have a bias against non-native English speakers, whose writing patterns can sometimes mimic AI. False positives are a known issue. |

| Turnitin | Integrated directly into their plagiarism checker, it analyzes writing consistency and other machine-like patterns. | Convenience is its biggest plus—it’s already part of the workflow for many schools. The "all-in-one" report is easy to access. | The detector's accuracy has been widely questioned by educators. The score can be influenced by paraphrasing tools and highly structured academic writing. |

| Originality.AI | Marketed towards content creators but used by educators, it's trained to detect patterns from popular models like GPT-4. | Claims a higher accuracy rate (99%) on newer AI models and can detect paraphrased content effectively. | It's a paid tool with a steeper cost. Like others, it can misinterpret human writing that lacks stylistic flair, leading to false accusations. |

Ultimately, no tool can replace your professional judgment. They can provide a signal, but you are the one who has to interpret it and decide what to do next.

How to Interpret the Results (Without Jumping to Conclusions)

So, a paper comes back with a 95% AI-generated score. What now? The most important first step is to take a breath and resist the urge to fire off an email. A high score is not proof of cheating; it's a signal to start a quiet, human-centered investigation.

Keep these critical points in mind:

- False Positives Happen. A Lot. Students who write in a very formal or structured style are prime candidates for being wrongly flagged. The same goes for non-native English speakers, whose writing might naturally have the lower "burstiness" that triggers the algorithm.

- Students Edit AI Output. A student could use an AI to get a rough draft and then edit it heavily, which can often fool a detector. On the flip side, using a simple paraphrasing tool on their own work can sometimes make it look more like AI text.

- Your Knowledge of the Student is Key. Pull up their past assignments. Does the flagged paper sound like them? Is the vocabulary, tone, and sentence structure a complete departure from their previous work? A drastic change is a far more reliable red flag than any percentage spit out by a machine.

Never, ever confront a student based only on a detector’s score. This can shatter the trust you've built and lead to incredibly stressful—and potentially false—accusations. The score is a reason to ask questions, not to level an accusation.

A Smarter, More Humane Approach

Instead of treating these tools as a verdict-delivery service, think of them as a simple screener. A high score should be the trigger for you to go back and perform the manual checks we discussed earlier—looking for that missing personal voice, checking for bizarrely fabricated sources, and comparing writing styles.

The real evidence will always come from your expertise as an educator, not from an algorithm. Navigating this new world requires a healthy dose of skepticism. These tools offer a tempting shortcut, but they are no substitute for your professional judgment and your relationship with your students. Use them sparingly, question their findings, and always choose a fair, humane investigation over a quick, automated judgment.

Designing Assignments That AI Can't Ace

While playing detective with student work is sometimes necessary, the best defense is a good offense. If we want to move beyond the cat-and-mouse game of AI detection, we need to get proactive. That means rethinking our assignments from the ground up to create tasks where AI simply isn't a useful tool.

The trick is to design work that genuinely requires a human touch. We need to lean into the things that large language models, for all their power, just can't do well: personal reflection, critical analysis of lived experiences, and direct engagement with what's happening right here, in our classroom.

Make It Personal

The fastest way to short-circuit an AI is to require a personal connection. A generic prompt about the themes in The Great Gatsby is practically begging for a chatbot to write it. The AI has read every analysis ever published online.

But what an AI doesn't have is your student's life.

Anchor your assignments in their world. Instead of asking for a bland summary of a historical event, ask them how a primary source from that event makes them feel, or how it connects to a current issue they see in their own community.

For instance, you could have students:

- Connect a key concept from psychology to a conflict they've seen in a movie or a personal relationship.

- Analyze a character’s decision in a novel through the lens of a personal dilemma they've faced.

- Write a reflection on how a recent scientific discovery might realistically impact their own neighborhood or family.

This simple pivot makes the student the primary source. The AI can't fake a memory or a genuine opinion.

Use the Classroom to Your Advantage

The physical classroom is still one of our best tools. Anything done in person, under your supervision, and on the clock is naturally resistant to AI assistance.

This doesn't mean we have to go back to a world of nothing but high-stakes, timed exams. Think smaller and more frequently.

A low-stakes, five-minute handwritten response to a reading at the start of class is invaluable. It gives you a real, unfiltered sample of that student's writing and thinking. This becomes your baseline.

When you have a collection of these small, in-class artifacts, it becomes glaringly obvious if a formal paper submitted later sounds like it was written by a completely different person. That discrepancy is your entry point for a conversation.

Grade the Process, Not Just the Paper

AI is fantastic at generating a polished final draft. What it’s terrible at is faking the messy, frustrating, and ultimately rewarding journey of getting there. So, let’s start grading that journey.

By making the process a significant part of the grade, you build a structure that’s incredibly difficult for an AI to replicate.

Instead of just waiting for the final paper to land in your inbox, require students to submit the evidence of their work along the way.

Here are a few ideas:

- The Brainstorm: Have them turn in a mind map, a messy outline, or even just a list of questions they have about the topic.

- The Annotated Bibliography: Don't just ask for a list of sources. Require a short paragraph for each one explaining why they chose it and how they plan to use it.

- Multiple Drafts: Ask for a rough draft and a final version. The real key? Make them write a short "revision memo" explaining the top three changes they made and why.

- Peer Review: Build in structured peer feedback. This forces them to engage critically with another human's work, a skill AI can't teach.

When we assess these steps, we're rewarding the actual intellectual labor, not just the finished product. It gives us checkpoints to see the student's thinking evolve and makes the entire learning process more transparent for everyone.

Creating a Fair and Effective Investigation Process

Few things are more challenging for an educator than suspecting a student of academic dishonesty. When AI enters the picture, it gets even murkier. But the goal here isn't just to "catch" a student; it's about upholding academic integrity and, hopefully, turning a tough situation into a real learning moment. That requires a process that’s fair, well-documented, and starts with conversation, not accusation.

It's tempting to jump to conclusions based on a detector score or a gut feeling, but doing so can break the trust you've worked hard to build. The first move should always be to pause and gather more meaningful evidence. A single suspicious paper isn't enough to go on—you need context.

First, Gather Comprehensive Evidence

Before you even think about talking to the student, put on your investigator hat. The objective is to build a complete picture of that student's work, looking far beyond what one tool can tell you. A solid case is never built on a single data point; it's about connecting several dots that tell a consistent story.

Your evidence-gathering checklist should look something like this:

- Past Assignments: Pull up their previous essays, in-class writing, or even short discussion posts. Compare the writing style, voice, vocabulary, and the typical grammatical mistakes they make. A sudden, dramatic shift is one of the most powerful pieces of evidence you can have.

- In-Class Performance: How does the student talk in class? Do their verbal contributions and questions reflect the sophisticated analysis you see in the paper? A major disconnect is a huge red flag.

- Document History: If the assignment was turned in on a platform like Google Docs, check the version history. A paper that appears almost fully formed in a single copy-paste, with little to no evidence of drafting or revision, is highly suspicious.

- The Assignment Itself: Go back and re-read the submission with a critical eye. Look for those manual tells we talked about earlier—are there fake sources, a bland and generic tone, or a total lack of personal insight?

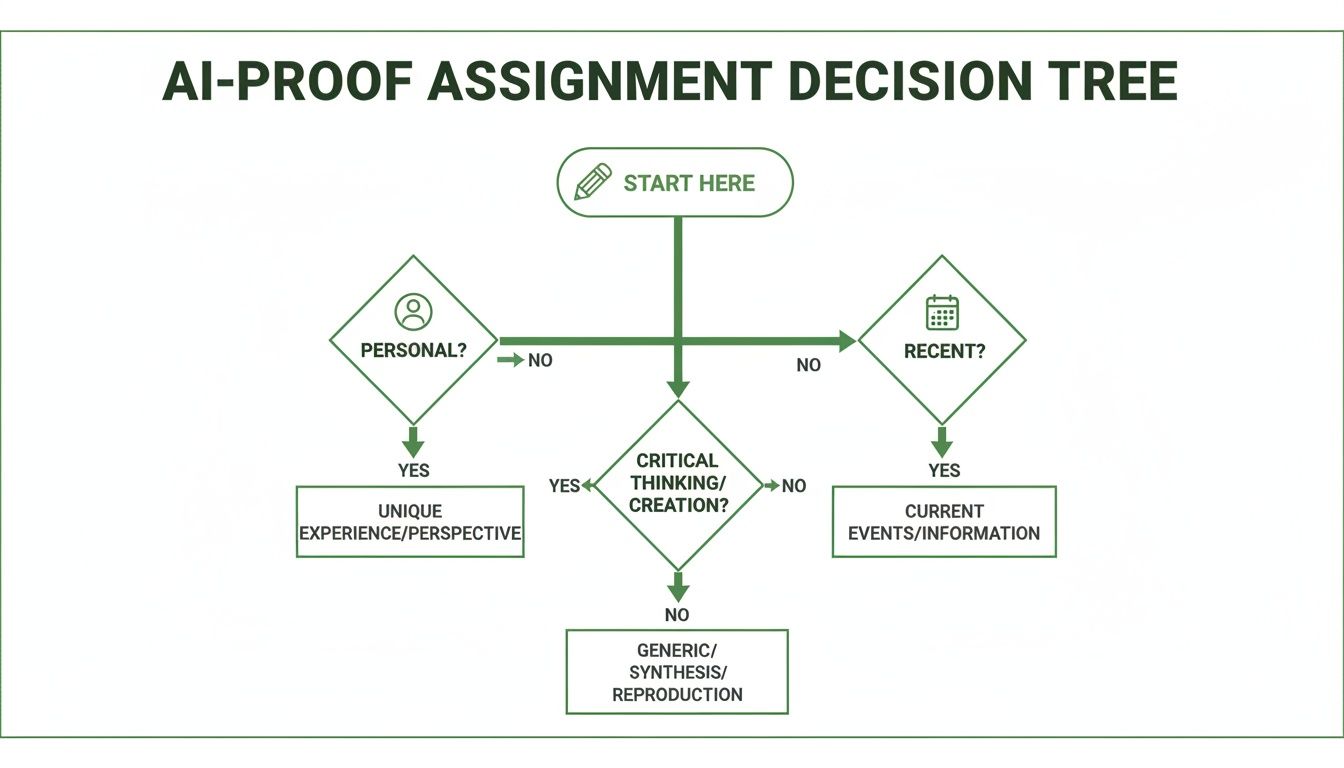

This infographic offers a great decision-making framework for designing assignments that naturally produce this kind of evidence from the start.

As the decision tree shows, assignments that lean into personal experiences or very recent events are much harder for AI to handle, which in turn helps you establish a clearer baseline of a student's authentic work.

Next, Initiate a Non-Accusatory Conversation

Once you have your evidence organized, it’s time for a private chat. The tone of this meeting is absolutely critical. You want to frame it as a discussion about their writing process, not an interrogation. For some great general tips that can be adapted to the classroom, check out a practical guide on how to conduct investigations.

Kick things off with open-ended questions driven by curiosity. This invites the student to open up about their work and gives them a chance to prove they actually understand it.

Conversation Starters to Try

- "This was a really complex paper. Could you walk me through your thought process for the main argument?"

- "I was interested in the sources you cited. Can you tell me more about what you learned from the one by [Author]?"

- "I noticed a different style in this essay compared to your last one. Can you tell me about what you did differently in your writing process this time?"

A student’s ability—or inability—to talk about their own work in detail is often the most telling part of the whole process. If they can't explain their own argument or define a key term they used, it creates a natural opening to discuss authorship and academic honesty.

Finally, Document Everything and Follow School Policy

Throughout this entire process, meticulous documentation is your best friend. Keep a log of every step you take, from the initial suspicion and the evidence you gathered to the notes from your conversation with the student. This record protects both you and the student by keeping the process transparent and fair.

Lastly, make sure you are in lockstep with your school or district's academic integrity policy. Know the official procedures for reporting a suspected case and what the potential consequences are. Following established policy ensures any action taken is consistent, fair, and defensible, turning what could be a punitive situation into a structured, educational one.

Answering Your Questions About AI in the Classroom

As educators, we're all grappling with how to handle AI in our work. It's a new frontier, and it’s natural to have questions. Let's tackle some of the most common ones I hear from colleagues, focusing on practical advice you can use right away.

What’s the Single Most Reliable Red Flag for AI Writing?

Honestly, there's no magic bullet. These AI models are getting smarter by the day. But if I had to pick one thing, it's a sudden, jarring disconnect between the work a student turns in and what you know they're capable of.

Think about that student whose writing has a distinct voice, a particular way of phrasing things, or even common grammatical quirks. If they suddenly hand in an essay with a flawless, sophisticated vocabulary and a perfectly sterile tone that feels nothing like them, that’s your biggest clue. It’s that "Spidey-sense" we develop as teachers. Trust your gut when a paper just doesn't feel like your student.

Can I Just Rely on an AI Detector's Score?

I wouldn't. Please don't. Think of AI detection tools as a first-pass filter, not a final verdict. They can be a helpful signal to look a little closer, but they are far from perfect.

These tools are notorious for false positives, especially with students who are English language learners or those who have a very formal, structured writing style. I've seen them flag perfectly legitimate work simply because the writing was clear and concise.

A high AI score isn't proof of cheating. It's a prompt for you to do a bit more digging. It's the start of the conversation, not the end of it.

Relying solely on a percentage from a machine can erode the trust you've built with your students. Always back it up with your own professional judgment.

How Should I Approach a Student I Suspect of Using AI?

The key here is to go in with curiosity, not an accusation. The goal is to open a dialogue, not to start a fight.

Set up a time to chat with the student one-on-one. I find it’s best to frame it as a check-in about their writing process. Start with open-ended questions that invite them to talk about their work.

- "This was a fascinating point you made here. Could you tell me a little more about what led you to that conclusion?"

- "I was curious about your research process for this paper. Which sources did you find the most compelling?"

- "This paragraph is really well-structured. Can you walk me through how you drafted it?"

If they wrote the paper, they can talk about it. If they can’t elaborate on their own ideas or process, the conversation naturally shifts toward authorship and what academic integrity looks like in our class. It becomes a teaching moment instead of an interrogation.

So, Should I Ban AI Entirely, or Teach Students How to Use It?

A total ban feels like the easy answer, but in the long run, it's a losing battle. AI tools are here to stay, and our students will absolutely need to know how to work with them in college and their careers. Banning them just pushes the use underground and misses a huge opportunity.

A much better approach is to teach responsible, ethical use. Show them how AI can be a powerful tool for brainstorming ideas, creating an outline, or even checking for clunky sentences.

The key is to build your classroom policies around transparency and accountability. If a student uses an AI tool, they should cite it, just like any other source. The focus should always be on their own critical thinking and analysis driving the final product. This way, you’re not just policing them; you’re preparing them for the future.

When AI-generated drafts need a human touch, Natural Write is the perfect solution. Our free platform transforms robotic text into natural, undetectable writing that maintains your original ideas. Refine your essays and assignments with confidence, knowing your work will sound authentic and bypass AI detectors.

Visit Natural Write's website to humanize your writing in one click.